Design Considerations for High Bandwidth Memory Controller

Atul Dhamba, Anand V Kulkarni (Atria Logic Pvt Ltd)

INTRODUCTION

High Bandwidth Memory (HBM) is a high-performance 3D-stacked DRAM. It is a technology which stacks up DRAM chips (memory die) vertically on a high speed logic layer which are connected by vertical interconnect technology called TSV (through silicon via) which reduces the connectivity impedance and thereby total power consumption. The HBM DRAM uses wide-interface architecture to achieve high-speed, low-power operation. The HBM DRAM is optimized for high-bandwidth operation to a stack of multiple DRAM devices across a number of independent interfaces called channels. It is anticipated that each DRAM stack will support up to 8 channels. Each channel provides access to an independent set of DRAM banks. Requests from one channel may not access data attached to a different channel. Channels are independently clocked, and need not be synchronous.

HBM DRAM v/s TRADITIONAL DRAM

The 3 major areas where every memory, be it SRAM or DRAM, is constrained in terms of further improvement is area, power and bandwidth. With increasing operating frequency of processors the limited memory bandwidth forms a bottleneck to extract maximum performance out of a system. HBM not only offers a solution to this ‘memory bandwidth wall’ but with its close proximity interposer layer and 3D structure offers high yield and reduced form factor respectively.

BANDWIDTH

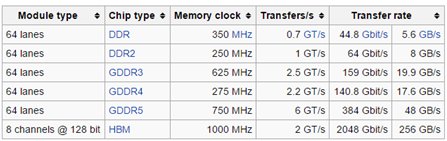

Compared to traditional DDR RAMs, HBM with its 128 bit wide data on each channel offers much higher bandwidth of about 256GB/s for 8 channels per die.

Table 1 Memory Bandwidth Comparison

*Above table is used from Wikipedia.

POWER

As per the data analysis provided by SK Hynix the HBM DRAM memory saves up to 42% power than GDDR5 DRAM.

SMALL FORM FACTOR

To accommodate more memory on board it shall take more space for GDDR5 to place each die, however due to its 3D stack physical architecture and close proximity to the core processor the area footprint on board is saved by about ~90%.

HBM MEMORY PROTOCOL OVERVIEW

HBM Memory is controlled by commands that are issued on separate row and column address bus. The HBM memory channel is divided into multiple banks, each banks has multiples rows and each row has multiple columns. To access a memory location, the row should be opened first. The row address bus controls the opening and closing of address location while column address bus controls the write/read operation on the opened row. At a time only one row can be open in a bank so to access address location on different row in same bank, the current row should be closed by issuing precharge using row address bus. The updated HBM specification (JESD235A) supports pseudo-mode in which a new row can be activated in a bank having already open row. The HBM memory internally makes sure that it closes current row and opens new row. The data bus is also divided into 2 separate 64 bits data bus with shared row and column address bus. A pseudo-channel is created within the same channel thus, memory access to address location on channel 0 does not affect memory access to address location on channel 1 and this considerable amount of wait states or dead cycles are reduced further enhancing the memory bandwidth.

The HBM memory can be put in low power modes by row address bus to save power on the I/O drivers. To further reduce power consumption, clocks can be gated when in power-down or self-refresh modes.

ATRIA LOGIC HBM MEMORY CONTROLLER IP

The Atria Logic HBM Memory Controller (AL-HBMMC) IP enables user to communicate with HBM memory at high speeds. Since, every channel is independent of each other, it makes sense to have an independent memory controller per channel and user has complete control over data transactions w.r.t a particular channel. The AL-HBMMC supports Legacy Mode and Pseudo-channel modes with varying densities from 1Gb to 8Gb. The standout feature is the ‘2-command compare and issue’ algorithm which increases bandwidth by considerably reducing dead cycles between row and column commands. The memory controller reference design is available in Verilog HDL. Table 2 lists the features supported by AL-HBMMC:

| Feature | Description |

| Addressing Mode | Supports Legacy Mode and Pseudo-Channel Mode |

| Burst Length | 2, 4 |

| User-Interface | FIFO-Based |

| User-Interface Transfer Rate | Full Rate |

| MC-PHY Transfer Rate | Full Rate |

| Number of Bank Access Supported | 8,16,32 |

| Channels Supported | 1 Channel per MC |

| Density Access Supported | 1Gb, 2Gb, 4Gb, 8Gb |

| Address Parity | Yes (Enabled/Disabled using MRS registers) |

| Data Parity | Yes (Enabled/Disabled using MRS registers for read/write data) |

| Data DBI | Yes (Enabled/Disabled using MRS registers for read/write data) |

| Data DM | Yes (Enabled/Disabled using MRS registers for write data) |

| Data ECC | Yes (Enabled/Disabled using MRS registers for read/write data) |

| MRS Mode | Yes |

| Refresh Mode | Yes |

| Power-Down Mode | Yes |

| Self-Refresh Mode | Yes |

| Single-Bank Refresh Mode | Not Supported |

| Number of commands compared for maximum bandwidth | 2 (Memory Controller compares between current and next command and decides which command to be issued to reduce dead-cycles and increase bandwidth) |

| Write-Write or Read-Read | Non-seamless, with minimum of 2 clock cycle between continuous writes or continuous reads |

| Clock Gating | Not Supported |

| Clock Frequency Change | Not Supported |

| Timing Parameters | User-Configurable (default values as per Samsung Specification for HBM) |

| Burst Re-ordering | Yes (for write and read data in legacy mode BL=4) |

Table 2: Features List

AL-HBMMC ARCHITECTURE

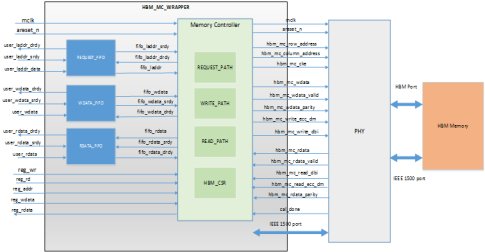

The HBM memory controller speaks with single independent channel on HBM memory. The user-interface is FIFO-based with independent FIFOs for logical address, write data and read data. The memory controller does logical to physical address mapping, reads from write data FIFO when a write command is issued and stores read data into read data FIFO when a data is available from the memory for a read request.

The timing parameters are by default as per Samsung specification, however for other vendors user can edit into the timing parameters through CSR registers.

The PHY interface is a custom interface, which can be connected with a modified DFI interface. The memory controller is divided into 4 parts: command, write data, read data and register interface. The memory controller architecture mainly focuses on minimum communication between each of these sections which shall help user for area and performance optimization during ASIC layout.

Figure 1 HBM MC Architecture

AL-HBMMC FLOW DIAGRAM

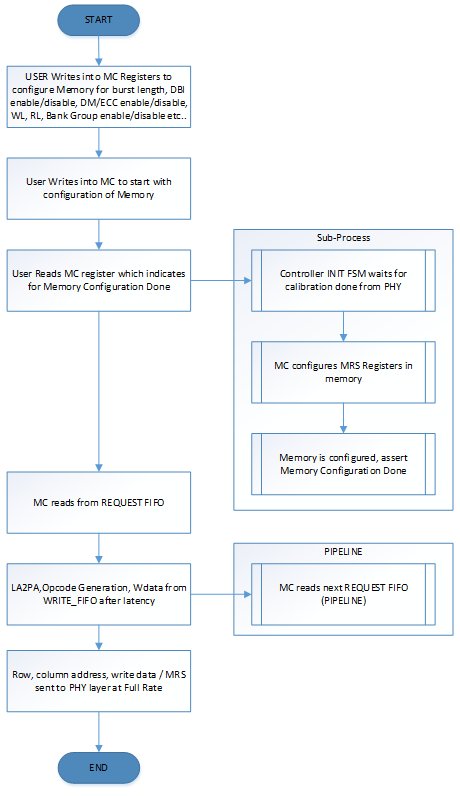

The HBM Memory Controller flow diagram in Figure 2 explains the behavior of memory controller for any mode and density. After asynchronous active low reset is de-asserted, the memory controller waits for calibration done signal from PHY, until then the user can configure the MRS and timing parameters into the CSR registers using register interface. User can configure the memory controller to initiate with initialization FSM, the controller checks for calibration done signal and then starts with its initialization FSM to configure memory with MRS settings updated by user. After initialization, the memory controller starts with normal write/read operation by reading from FIFO interface. During refresh and power-down modes the Memory controller stops reading from FIFO interface until it exits any of these modes.

Figure 2: AL-HBMMC Flow Diagram

INTERFACE

The HBM Memory Controller has FIFO-based interface on user side and custom PHY interface on PHY side. The write data, logical address and read data have separate FIFOs. A separate register interface configures the CSR and MRS registers of the memory controller.

Figure 3 Memory Controller Interface

The MC to PHY interface as shown in Figure 3 is a custom interface simplified to have separate ports for write path and read path. The write data, write eccc/dm, write dbi bus are delayed by the memory controller to match the write latency value configured by user. Memory controller sends command and write data are SDR (single data rate), and expects the read data as well at SDR.

LOGICAL TO PHYSICAL ADDRESSING

The logical address to physical address mapping follows a simple approach; it reads from logical address FIFO, converts to physical address and stores in an internal physical address FIFO. This physical address is read further and HBM commands like activate, precharge, write, read are generated and issued to memory.

The logical to physical address mapping differs as per the channel mode and density.

The physical addressing is based on BRC format (bank-row-column), to make sure that transactions on continuous logical addresses are stored in particular bank and not distributed all over in separate banks as in RBC format (row-bank-column). BRC arranges the banks in logical address order.

It is true that RBC will be faster than BRC as it opens multiple banks. However, the ‘2-command compare and issue’ algorithm helps to make BRC access faster.

COMMAND GENERATION

The memory location in DRAM can be accessed in 3 steps: Open row -> access column to read/write -> close row. The row having the particular address location is opened using ACTIVATE command, memory takes fixed amount of time to open a row (tRCD), the row has 64 columns locations, each capable of storing 256bits of data. To access a particular location the column address and command to conduct a write or read is issued onto column address bus (RD/WR). Once access is completed the row can be closed by issuing a PRE command after a fixed time tRAS. If new row is to be opened in the same bank a fixed time of tRC has to elapse.

| Command Generation | MSB | LSB |

| WR | 0 | 0 |

| RD | 0 | 1 |

| NOP | 1 | 0 |

| NOP | 1 | 1 |

Table 3: Command Generation

To do a simple transaction on a memory location multiple steps and timings need to be accounted for. However, user does not have to worry about these things as the AL-HBMMC is fully capable to handle these steps and timings easily. User only has to indicate an address location and command i.e. a write or read and the memory controller shall take care of the DRAM protocol to access the memory location.

The AL-HBMMC is robust and intelligent to understand when to open a row, issue command, close row, issue refresh to the DRAM memory to 'charge' it up and prevent from losing the data it is holding. The intelligence of memory controller is reflected with its decision making to reduce dead cycles and increase efficiency.

2-COMMAND COMPARE AND ISSUE' ALGORITHM

The efficiency of memory controller is increased by reducing dead cycles and issuing more commands than waiting for timeout w.r.t the previous row or column command issued. This is achieved through command re-ordering where the memory controller compares between current and next request and forwards the best possible command to the memory. For example, row command to open a new row address 0 in bank 1 is issued, rather than waiting for tRAS time (opening of row to issuing command time), if next command is issuing write/read to already open row address 0 in bank 2, the memory controller shall issue the next request thus reducing dead cycles. This is a simple example to demonstrate how this algorithm increases the efficiency of memory controller.

Write Data Path

User can write 288 bits on write data FIFO (32 bits for data masking + 256 bits for write data). The MSB 32 bits are for data masking if data masking is enabled in which each bit corresponds to masking 8 bits of write data. The data masking bits to write data bits relation is explained in Table 4. The LSB bits of write data ([127:0]) correspond to data sent to memory on rising edge of clock while MSB bits ([255:128]) correspond to data sent on falling edge. The memory controller generates the ECC bits (if ecc is enabled) and DBI bits (if write DBI is enabled). The legacy mode supports write data burst re-ordering for odd column address locations at burst length 4. The bursts {0,1,2,3} are re-ordered and sent as 2,3,0,1 to the memory. The write request with odd column address issues a flag which indicates that the current write data should be re-ordered and sent out. Write data latency is configurable in MRS mode registers from 1 to 8 tCK clock cycles. The write data is delayed and sent out in this block w.r.t the write data latency configured in MRS register 2 OP[2:0].

The ECC generation function can be enabled/disabled in MRS register 4 OP[0] by user. However, as per HBM specification, if ECC is enabled data masking is disabled and vice-versa is followed. If user enables ECC and data masking then ECC shall be disabled and data masking shall be enabled. The HBM specification does not define any ECC polynomial for ECC generation it is user specific, HBM memory does not perform ECC checks, just stores the ECC alongside data and sends out ECC during reads. ECC correction is done on read data path by memory controller. 8 bit ECC value for every 64 bits is generated on write data path. Likewise 32 bit ECC is generated for 256 bits of write data in SDR mode by memory controller.

The write data bus inversion is a configurable function which can be enabled/disabled using MRS register 4 OP[1]. Every 8 bit of write data has single bit data inversion bit to indicate the byte is inverted or not.

The parity is calculated for every 32 bits of data and sent out to memory. Even parity is calculated by XORing the every 32bits.

| Data masking | Write data |

| [31] => [287] | [255:248] |

| [30] => [286] | [247:240] |

| [29] => [285] | [239:232] |

| ........ | ........ |

| [2] => [258] | [23:16] |

| [1] => [257] | [15:8] |

| [0] => [256] | [7:0] |

Table 4: Data Masking to Write Data Relation

Read Data Path

The read data from memory should be sent in SDR to memory controller by PHY. The PHY shall send back 256 bits of SDR read data, 2 bits read data valid, 8 bits read data parity, 32 bits of DBI, 32 bits of ECC bits. The read data path stores 258 bits (256 bit read data and 2 bit error flags) in read data FIFO. The 2 bit error flags are to indicate if the whole data is invalid during legacy mode or to indicate the read data for pseudo-channel 0 (error flag[0]) or pseudo-channel 1(error flag[1]) are invalid. Memory controller performs parity checking, data bus inversion, ECC checking and read data re-ordering (for legacy mode with burst length = 4) before sending read data to user.

The read data is sent to user as explained in Table 5.

| Channel Mode | Read Data bit position | Read Data Error bit position |

| Legacy Mode | [255:0] | [257:256] |

| Pseudo Mode : Pseudo Channel 0 | [63:0] and [191:128] | [256] |

| Pseudo Mode : Pseudo Channel 1 | [127:63] and [255:192] | [257] |

Table 5 Read Data To User

REFRESH MODE

Since a DRAM has a capacitive load at its output, the value held by the capacitor degrades over a period of time thus loosing the data stored. In order to avoid this scenario capacitors need to be 'recharged' at timely intervals, thus a DRAM is 'refreshed'. Refresh to all banks in a channel can be issued using REF command. The Refresh command is issued only after the request issued to memory is completed and all banks are closed. Back to back refresh is issued with minimum time period of tRFC maintained between Refresh commands. The specification provides two options, to either issue advance refresh commands (maximum of 9) then give next Refresh command after 9xtREFI interval or postpone refresh commands (maximum of 9) then issue 9 back-to-back refresh commands with tRFC time period between the refreshes. The memory controller follows the latter option. It postpones 8 Refresh commands then asserts a flag to indicate that memory should be put into refresh mode.

Figure 4 Advanced Refresh Commands*

*NOTE: Above figure is used from JEDEC JESD235A standard for HBM.

POWER-DOWN MODE

The HBM memory runs at high frequency with a wide-IO interface. When normal transactions are not carried out, the memory can be put into power-down mode. The user can configure into the CSR register to enter or exit the memory in power-down mode. The CKE (clock enable) signal is de-asserted and asserted during entry and exit into this mode. The HBM memory drivers are turned off in this mode. Power can be saved further by gating the high frequency clock, even more the clock frequency can be changed in this mode.

SELF-REFRESH MODE

Self-Refresh FSM is similar to power-down FSM with only difference being the memory is put into self-refresh state; it shall refresh itself minimum once while in self-refresh mode. The CKE (clock enable) signal is de-asserted and asserted during entry and exit into this mode. The IO drivers are switched off to save power, additionally the clocks can be gated or operating frequency of memory can be changed during self-refresh mode. The refresh timing counters are held at their current value and continue increment only after the memory is exited from this mode.

MRS MODE

User can configure into the MRS registers address space specified in Table 6. On asserting start_issue_mrs bit in CSR register 16 OP [1], the memory controller puts memory into MRS mode provided the current request issued is completed and all banks are closed. The memory controller looks for which MRS registers are changed from previous configuration and configure only those MRS registers into the memory thus avoiding reconfiguring the unchanged MRS registers and saving dead cycles. The MRS registers in memory controller are updated after every tMOD time the MRS command is issued thus syncing with the configurations made on memory.

| REGISTER ADDR | MRS REGISTER DETAILS | SIZE | READ/WRITE MODE | DEFAULT | Description |

| 0 | //OP[7] = Test Mode | 1b | W/R | 1'b0 | Test mode not supported by MC |

| //OP[6] = ADD/CMD Parity | 1b | W/R | 1'b0 | Enable/Disable addr/cmd parity 0 = Disable ; 1 = Enable | |

| //OP[5] = DQ Write Parity | 1b | W/R | 1'b0 | Enable/Disable Write data parity 0 = Disable ; 1 = Enable | |

| //OP[4] = DQ Read Parity | 1b | W/R | 1'b0 | Enable/Disable Read data parity 0 = Disable ; 1 = Enable | |

| //OP[3] = Reserved | 1b | R | 1'b0 | NA | |

| //OP[2] = TCSR | 1b | W/R | 1'b0 | ||

| //OP[1] = DBIac Write | 1b | W/R | 1'b0 | Enable/Disable Write data Inversion 0 = Disable ; 1 = Enable | |

| //OP[0] = DBIac Read | 1b | W/R | 1'b0 | Enable/Disable Read data Inversion 0 = Disable ; 1 = Enable | |

| 1 | //OP[7:5] = Drive Strength | 3b | W/R | 3'b000 | |

| //OP[4:0] = Write Recovery(WR) | 5b | W/R | 5'b00011 | Time for Write Recovery in (nCK) | |

| 2 | //OP[7:3] = Read Latency(RL) | 5b | W/R | 5'b00100 | Read Latency in (nCK) |

| //OP[2:0] = Write Latency(WL) | 3b | W/R | 3'b011 | Write Latency in (nCK) | |

| 3 | //OP[7] = Burst Length(BL) | 1b | W/R | 1'b0 | Burst Length 0 = 2 ; 1 = 4 |

| //OP[6] = Bank Group | 1b | W/R | 1'b1 | Bank Group 0 = Disable ; 1 = Enable | |

| //OP[5:0] = Active to Pre(RAS) | 6b | W/R | 6'b001000 | tRAS ; Minimum time for Row to be Open | |

| 4 | //OP[7:4] = Reserved | 4b | R | 4'b0000 | NA |

| //OP[3:2] = Parity Latency | 2b | W/R | 2'b00 | Parity Latency in (nCK) | |

| //OP[1] = Write DM | 1b | W/R | 1'b0 | Enable/Disable Write Data Masking 0 = Disable ; 1 = Enable | |

| //OP[0] = ECC | 1b | W/R | 1'b0 | Enable/Disable Write ECC 0 = Disable ; 1 = Enable | |

| 5 | //OP[7] = TRR | 1b | W/R | 1'b0 | Feature Not Present in MC |

| //OP[6] = TRR-PS select | 1b | W/R | 1'b0 | Feature Not Present in MC | |

| //OP[5:4] = Reserved | 2b | R | 2'b00 | NA | |

| //OP[3:0] = TRR Mode BAn | 4b | W/R | 4'b0000 | Feature Not Present in MC | |

| 6 | //OP[7:3] = ImPre tRP Value | 5b | W/R | 5'b01110 | Pseudo-Mode Precharge Time in (nCK) |

| //OP[2:0] = Reserved | 3b | W/R | 3'b000 | NA | |

| 7 | //OP[7] = CATTRIP | 1b | W/R | 1'b0 | Feature Not Present in MC |

| //OP[6] = Reserved | 1b | R | 1'b0 | NA | |

| //OP[5:3] = MISR Control | 3b | W/R | 3'b000 | ||

| //OP[2:1] = Read Mux Control | 2b | W/R | 2'b01 | ||

| //OP[0] = Loopback | 1b | W/R | 1'b0 | ||

| 8 | //OP[7:1] = Reserved | 7b | R | 7'd0 | NA |

| //OP[0] = DA[28] Lockout | 1b | W/R | 1'b0 | Feature Not Present in MC | |

| 9 | //OP[7:0] = Reserved | 8b | R | 8'd0 | NA |

| 10 | //OP[7:0] = Reserved | 8b | R | 8'd0 | NA |

| 11 | //OP[7:0] = Reserved | 8b | R | 8'd0 | NA |

| 12 | //OP[7:0] = Reserved | 8b | R 8'd0 | NA | |

| 13 | //OP[7:0] = Reserved | 8b | R 8'd0 | NA | |

| 14 | //OP[7:0] = Reserved | 8b | R 8'd0 | NA | |

| 15 | //OP[7:3] = Reserved | 5b | R | 5'b0 | NA |

| //OP[2:0] = Internal Vref | 3b | W/R | 3'b000 |

Table 6: MRS Register Configurations

AL-HBMMC CSR SETTINGS

The AL-HBMMC can be configured with various parameters and targeted for various channel densities and channel modes. The following section describes the compiler directives to set the memory controller into channels modes and densities, various MRS and CSR registers definitions to configure the memory controller and the HBM memory. A separate register interface is used to configure the CSR and MRS registers. User can configure into any of these registers during normal operation or immediately after reset is de-asserted.

The AL-HBMMC is designed to support Legacy Mode and Pseudo Mode with varying channel densities from 1Gb to 8Gb. However, it does not support both the channel modes at the same time. The memory controller should be configured to run in legacy mode or pseudo-mode using compiler directives described in Table 7.

| Type | Compiler Directive | Description |

| Channel Mode | `LEGACY_MODE | Sets the memory controller in legacy mode |

| Channel Mode | `PSEUDO_MODE | Sets the memory controller in pseudo-mode |

| Channel Mode | `PSEUDO_MODE_HIGH | Sets the memory controller in pseudo-mode-High |

| Channel Density | `DENSITY_GB_1 | Sets Density to 1Gb |

| Channel Density | `DENSITY_GB_2 | Sets Density to 2Gb |

| Channel Density | `DENSITY_GB_4 | Sets Density to 4Gb |

| Channel Density | `DENSITY_GB_8 | Sets Density to 8Gb |

| Channel Density | `DENSITY_GB_8_HIGH | Sets Density to 8Gb-High |

Table 7: Compiler Directives for Channel Mode and Channel Density

The channel mode and channel density only help the memory controller to understand what type of HBM memory it is communicating with. The memory controller has certain blocks visible only for legacy mode and pseudo mode. Channel density also helps memory controller to understand how many banks are present in the memory so that it can generate physical address and commands accordingly.

The user can issue start to the internal initialization FSM of memory controller by asserting the start initialization bit OP[0]. User has to poll for initialization done bit OP[7] which indicates that memory is calibrated by PHY and also the MRS register settings in memory controller match the MRS settings in memory. Initialization and calibration register details are shown in Table 8.

| REGISTER ADDR | MRS REGISTER DETAILS | SIZE | READ/WRITE MODE | DEFAULT | Description |

| 16 | //OP[7] = Initialization Done | 1b | R | 1'b0 | Indicate Initialization FSM is completed and Memory is updated with MRS settings of MC |

| //OP[6] = Calibration Done | 1b | R | 1'b0 | PHY calibration completed | |

| //OP[5:3] = Reserved | 4b | R | 1'b0 | NA | |

| //OP[2] = HBM MRS Configuration Done | 1b | R | 1'b0 | MRS settings updated in memory(during normal operation) | |

| //OP[1] = Issue Memory MRS | 1b | W/R | 1'b0 | Issue MRS to memory for updated MRS/CSR registers (during normal operation) | |

| //OP[0] = Start Initialization | 1b | W/R | 1'b0 | Start with Initialization FSM (only once after reset is de-asserted) |

Table 8 Initialization and Calibration Register Configurations

User can also issue new MRS settings during normal operation and issue for start of MRS FSM by asserting OP[1]. User has to poll for OP[2] which indicates the memory and memory controller is successfully updated with new MRS settings. Point to be noted is that, when user configures new MRS settings in memory controller it is not reflected onto the behaviour of memory controller until the memory is also updated with new MRS settings. So if data bus inversion is enabled by user the memory controller will not initiate data bus inversion until the MRS settings for data bus inversion is enabled on memory using MRS commands.

MRS REGISTERS

The MRS registers reflect the same address locations and register definitions as explained in HBM specification. This is to make it easy for the user to configure into the required MRS registers without having to refer to additional memory mapping of MRS register settings for memory controller. MRS registers are mapped from address locations 0 to 15 (Table 6). Registers 9 to 14 are read-only and kept to reflect the address mapping given in HBM specification.

User can update into the registers after reset is de-asserted and before the memory controller is put into initialization mode. The user can also update MRS registers during normal read/write operation. These new configurations will be stored in temporary registers and updated in memory controller once they are issued with MRS commands to memory with tMOD time limit reached.

POWER-DOWN / SELF REFRESH ENTRY/EXIT CONTROL CSR REGISTERS

The memory can be put into power-down or self-refresh mode using OP[1] and OP[0] respectively(Table 9). User has full control to enter or exit the memory in PDE/SRE modes. Refresh FSM outputs : refresh to be issued OP[6] and refresh state OP[7] are read only bits to indicate that if the memory is put into power-down mode by user and a refresh is to be issued to memory then user has to get the memory out of power-down mode. User can poll for OP[7] to check if they memory is still in refresh state (logic 1) or out of it (logic 0). User can also poll for state of memory if PDE/SRE entry or exit is asserted. OP[2] indicates memory is put in PDE mode, likewise OP[3] indicates memory is put in SRE mode. User can exit the memory from PDE/SRE mode by asserting OP[4]. Once memory is out of PDE/SRE mode OP[5] is set high.

| REGISTER ADDR | MRS REGISTER DETAILS | SIZE | READ/WRITE MODE | DEFAULT | Description |

| 17 | //OP[7] = Refresh State | 1b | R | 0 | Indicates Memory is in Refresh Mode |

| //OP[6] = Refresh to be issued | 1b | R | 0 | Indicates Memory needs to be put in refresh mode (during PDE) | |

| //OP[5] = PDE/SRE Done | 1b | R | 0 | Indicates Memory has come out of PDE/SRE Mode | |

| //OP[4] = PDE/SRE Exit | 1b | W/R | 0 | Exit memory from PDE/SRE Mode | |

| //OP[3] = SRE state | 1b | R | 0 | Indicates memory is in SRE Mode | |

| //OP[2] = PDE state | 1b | R | 0 | Indicates memory is in PDE Mode | |

| //OP[1] = Issue SRE | 1b | W/R | 0 | Issue SRE to Memory | |

| //OP[0] = Issue PDE | 1b | W/R | 0 | Issue PDE to Memory |

Table 9 PDE/SRE/Refresh Register Configurations

CSR REGISTERS FOR TIMING PARAMETERS

The memory controller can be configured with timing parameter configuration register set implemented within IP (Table 10). The default timing parameters are as per the Samsung Specification for HBM memory with memory density 8 Gb. The memory controller follows these timing parameters for all operations. User should configure these parameters only once before issuing ‘start initialization’ in register 16.

NOTE: These parameters can be changed during clock gating or clock-frequency change modes; however current version does not support these modes.

| REGISTER ADDR | MRS REGISTER DETAILS | SIZE | READ/WRITE MODE | DEFAULT | Description (Description can be found in JEDEC specification for HBM) |

| 21 | //OP[7:0] = tRC | 8b | W/R | 47 | |

| 22 | //OP[7:0] = tRAS_ACT_to_PRE | 8b | W/R | 33 | Act to Precharge Delay |

| 23 | //OP[7:4] = tRCDRD | 4b | W/R | 14 | |

| //OP[3:0] = tRCDWR | 4b | W/R | 10 | ||

| 24 | //OP[7:4] = tRRDL | 4b | W/R | 6 | |

| //OP[3:0] = tRRDS | 4b | W/R | 4 | ||

| 25 | //OP[7:6] = Reserved | 2b | R | 0 | |

| //OP[5:0] = tFAW | 6b | W/R | 16 | ||

| 26 | //OP[7:6] = Reserved | 2b | R | 0 | |

| //OP[5:0] = tRP | 6b | W/R | 14 | Only for Legacy Mode | |

| 27 | //OP[7:4] = tXP | 4b | W/R | 8 | |

| //OP[3:0] = tCKE | 4b | W/R | 6 | Also tPD_min | |

| 28 | //OP[7:4] = tCKSRX | 4b | W/R | 10 | Not used in design currently |

| //OP[3:0] = tCKSRE | 4b | W/R | 10 | ||

| 29 | //OP[7:0] = tRFC[7:0] | 8b | W/R | 94 | 8b of 9b wide tRFC |

| 30 | //OP[7:1] = Reserved | 7b | R | 0 | |

| //OP[0] = tRFC[8] | 1b | W/R | 1 | MSB of 9b wide tRFC Density 1GB, 2GB : 160 Density 4Gb : 260 Density 8Gb : 350 | |

| 31 | //OP[7:0] = tREFI[7:0] | 8b | W/R | 60 | LSB 8b of 12b tREFI |

| 32 | //OP[7:4] = Reserved | 4b | R | 0 | |

| //OP[3:0] = tREFI[11:8] | 4b | W/R | 15 | MSB 4b of 12b tREFI Density 1Gb,2Gb,4Gb,8Gb : 3900 | |

| 33 | //OP[7:4] = tMRD | 4b | W/R | 15 | |

| //OP[3:0] = tMOD | 4b | W/R | 15 | ||

| 34 | //OP[7:0] = tWR | 8b | W/R | 15 | |

| 35 | //OP[7:4] = tWTRL | 4b | W/R | 8 | |

| //OP[3:0] = tWTRS | 4b | W/R | 3 | ||

| 36 | //OP[7:0] = tRTW | 8b | W/R | 9 | |

| 37 | //OP[7:4] = tRTPL | 4b | W/R | 5 | |

| //OP[3:0] = tRTPS | 4b | W/R | 4 | ||

| 38 | //OP[7:4] = max_tRRDL | 4b | W/R | 4 | Only for PS mode |

| //OP[3:0] = max_tRRDS | 4b | W/R | 4 | Only for PS mode | |

| 39 | //OP[7:0] = tRDPDE (or tRDSRE) | R | (read latency + parity latency + burst length/2 + 1) | ||

| 40 | //OP[7:0] = tWRPDE (or tWRSRE) | R | (write latency + parity latency + burst length/2 + 1 + tWR) |

Table 10 Timing Parameter Register Configurations

HBM PROTOCOL VERIFICATION IP

To verify a design or product that uses the High Bandwidth Memory as the memory component will need a bus functional model driven as per the HBM protocol. The Atria Logic HBM Verification IP (AL-HBM) incorporates the bus functional model which helps user to kick-start verification process instantly.

The Atria Logic HBM Verification IP (AL-HBM) is a SystemVerilog (SV) package, which leverages the native strength of SystemVerilog, imported inside a SystemVerilog module, and is pre-verified, configurable and re-useable. This enables the user to speed up test bench development and allows users to focus on testing modules and designs.

Integrating the Atria Logic HBM VIP into the existing test bench is simple: just instantiate the VIP (module) in the test bench. The built-in coverage aids the user to write test cases for covering all possible input scenarios.

HBM VIP ARCHITECTURE

Figure 5: HBM Memory Channel Architecture

The Atria Logic High Bandwidth Memory (AL-HBM) verification IP is a reusable, configurable verification component developed using SystemVerilog. The IP offers an easy-to-use verification solution for SoC’s using the HBM Memory Controller.

The HBM VIP can be configured to comprise of up to 8 memory channels, each with its own independent interface. Figure 2 shows the architecture of a single memory channel.

The VIP’s memory channel monitors the HBM interface and responds to requests (read/write) from the memory controller. The channel has been modelled to be compliant with JEDEC’s JESD235a specification and supports all non-IEEE 1500 port operations that have been detailed in the specification.

FEATURES

The HBM (High Bandwidth Memory) model has been developed using SystemVerilog and it is compatible with the Host (controller) and ready to be plugged in. Once plugged in, all it requires is the initial configurations to be made and the model is ready to use.

Compliant with JEDEC JESD235a specification:

The VIP fully supports all non-IEEE 1500 port operations that have been specified in the specification. This includes support for pseudo-channel and legacy modes, various memory size configurations, low power modes, valid mode register configurations, and on-the-fly clock frequency change during low power modes.

Protocol and mode register configuration checks:

The VIP implements timing checks for all the command-to-command timing parameters. It also implements checks for valid mode register configurations and for other protocols (initialization sequence, low power modes, etc.) to be followed during the course of operation.

Functional coverage:

Functional coverage has been provided for all of the command-to-command scenarios that can occur during the course of operation.

Logs:

For each channel of the HBM, the model provides a set of log files that keep track of commands issued, transaction details of reads and writes (including details of strobes, mode register configurations at the time of command, etc.), all the details of initialization sequence(s), and the complete happenings during low power modes.

As described above, the HBM VIP enables effective verification of the design under test for the functionalities mentioned in the JEDEC JESD235a specification. The HBM VIP seamlessly integrates with System Verilog or OVM/UVM Verification Environments. The HBM VIP model strictly confines to the protocol mentioned in the specification and maintains a log of all the transactions that happened and reports any violations of the protocol.

CONCLUSION

The HBM Memory Controller IP is highly efficient, highly configurable single channel memory controller which with its ‘2-command compare and issue’ algorithm reduces number of dead cycles and increases data transfer with HBM memory to achieve high bandwidth. The separation of logic flow for address, write data, read data and register interface makes it area efficient for ASIC implementation.

Related Semiconductor IP

- HBM4 PHY IP

- Ultra-Low-Power LPDDR3/LPDDR2/DDR3L Combo Subsystem

- HBM4 Controller IP

- IPSEC AES-256-GCM (Standalone IPsec)

- Parameterizable compact BCH codec

Related Articles

- PCIe 5.0: The universal high-speed interconnect for High Bandwidth and Low Latency Applications Design Challenges & Solutions

- High bandwidth memory (HBM) PHY IP verification

- High Bandwidth Memory (HBM) Model & Verification IP Implementation - Beginner's guide

- Breaking the Memory Bandwidth Boundary. GDDR7 IP Design Challenges & Solutions

Latest Articles

- Pipeline Stage Resolved Timing Characterization of FPGA and ASIC Implementations of a RISC V Processor

- Lyra: A Hardware-Accelerated RISC-V Verification Framework with Generative Model-Based Processor Fuzzing

- Leveraging FPGAs for Homomorphic Matrix-Vector Multiplication in Oblivious Message Retrieval

- Extending and Accelerating Inner Product Masking with Fault Detection via Instruction Set Extension

- ioPUF+: A PUF Based on I/O Pull-Up/Down Resistors for Secret Key Generation in IoT Nodes