Soft Tiling RISC-V Processor Clusters Speed Design and Reduce Risk

By John Min, Arteris

The RISC-V Instruction Set Architecture (ISA), known for its power, flexibility, low adoption cost and open-source foundation, is experiencing rapid growth across various market segments. This versatile ISA supports applications in sectors such as automotive, aerospace, defense, networking, telecommunications, datacenters, cloud computing, industrial automation, artificial intelligence (AI), machine learning (ML), embedded systems, IoT devices and consumer electronics.

In system-on-chip (SoC) designs, RISC-V’s scalability becomes evident. Low-end applications might require only a single core, medium-level applications may employ a cluster of RISC-V processor cores, and high-end applications may demand an array of RISC-V clusters. However, traditional methods of manually configuring processor clusters are labor-intensive and error-prone and inhibit scalability, creating bottlenecks in design flexibility and speed. A new technique called network-on-chip (NoC) soft tiling addresses all these issues.

The Evolution of RISC-V Configurations

RISC-V’s adaptability has enabled its adoption across a wide range of performance levels, from single-core implementations to multi-cluster configurations supporting thousands of cores. The NoC plays a critical role in facilitating communication across these configurations, as explained below:

- Single-Core Implementations: For many applications, a single RISC-V processor core is sufficient. These foundational systems are commonly found in embedded or resource-constrained environments, where minimal area and power requirements are priorities. Despite their simplicity, single-core RISC-V SoCs benefit from NoC technology, which connects the core to memory and peripheral blocks and manages data transfers efficiently.

- Multi-Core Clusters: As requirements increase, RISC-V designs often scale to clusters of 2 to 8 cores, delivering greater computational power and enabling parallelism. In these applications, efficient communication between cores is crucial to maintain performance. NoC interconnects offer a unified fabric that links cores within a cluster and connects them to memory and peripherals, ensuring low-latency, high-bandwidth data transfer. These configurations require flexible routing and efficient resource management, which NoCs deliver through optimized communication pathways that minimize power consumption and latency.

- High-Performance Multi-Cluster SoCs: In high-performance applications like AI and HPC, RISC-V SoCs may include numerous clusters, each containing tens or even thousands of cores. Managing data traffic across such large configurations demands advanced NoC architectures that support coherence and minimize data movement bottlenecks. Sophisticated NoCs integrate scalable coherency protocols and enable cache coherence across clusters, synchronizing data access across hundreds of cores.

High-End SoC Architecture with RISC-V

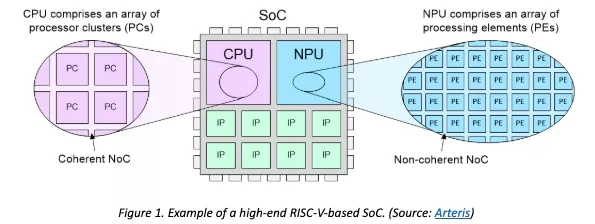

A modern high-end RISC-V-based SoC might feature an architecture where the Central Processing Unit (CPU) comprises an array of RISC-V processor clusters (PCs), each hosting multiple cores (see Figure 1). Likewise, the Neural Processing Unit (NPU), serving as a hardware accelerator tailored for AI and ML tasks, may contain an array of processing elements (PEs), providing specialized processing for complex workloads.

Today’s SoCs can contain billions of transistors, incorporating hundreds of functional units known as intellectual property (IP) blocks. While some IPs are sourced from trusted third-party vendors, design teams often develop custom IP blocks internally to create a competitive edge for their SoCs.

NoC technology has emerged as the standard interconnect solution for these IPs within SoCs. Although SoC teams can design a NoC in-house, it is a resource-intensive undertaking that requires extensive debugging and testing with other SoC components. This takes away the time they can spend in their core competencies for their products. Consequently, many teams choose to acquire NoC IP from vendors specializing in this technology.

Depending on the design approach, SoC teams may develop custom RISC-V processor cores and clusters or acquire them as IP. Inside each cluster, RISC-V cores commonly communicate using their proprietary bus, a specialized RISC-V bus protocol. However, some clusters may use alternative NoC technologies, whether developed in-house or externally sourced.

When interfacing a RISC-V cluster to the rest of the SoC or the array of clusters, each cluster will expose an interface to the outside world. Arm’s AXI Coherency Extensions (ACE) protocol was traditionally employed. Recently, however, Arm’s Coherent Hub Interface (CHI) specification has gained popularity for its ability to provide a coherent interface that connects processor clusters into a coherent NoC that implements cache coherence protocols.

By comparison, the PEs forming the NPU array will typically be connected using a non-coherent NoC. They will connect to this NoC using an interface like Arm’s Advanced eXtensible Interface (AXI). It is also worth noting that each PE may contain multiple IPs connected via an internal NoC.

Generating Arrays by Hand

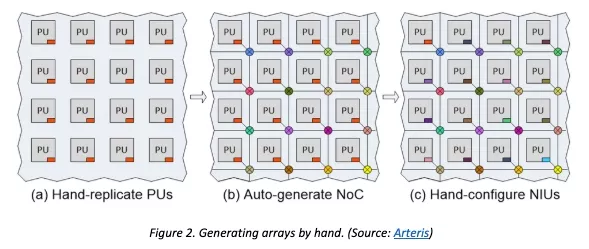

The process of creating arrays of PCs and PEs is essentially the same. The only real difference is whether to use a coherent NoC for PCs or a non-coherent NoC for PEs. The term processing units (PUs) encompasses both PCs and PEs.

Array generation has typically been performed by hand. The first step is to create or acquire the first PU. This PU is then replicated into an array of the requisite size (Figure 2a). The second step is to use the NoC tools to auto-generate the NoC (Figure 2b).

In Figure 2, the small red rectangles in the corner of each Processing Unit (PU) represent the Network Interface Units (NIUs). These units allow IP blocks to communicate with the NoC. Each NIU requires a unique address, allowing the NoC to guide packets from source IPs to their destinations. Traditionally, these addresses have been added to the NIUs by hand.

The tasks of replicating the PUs and configuring the NIUs manually are time-consuming, frustrating and susceptible to mistakes. Additionally, a PU may undergo many changes early in the development cycle, with each change necessitating the re-replication and re-configuration of the PUs and NIUs.

Generating Arrays Using NoC-Based Soft Tiling Technology

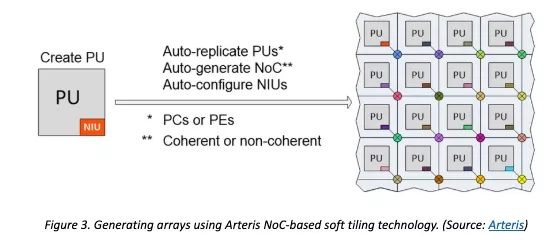

Arteris has developed a new soft tiling technology that has been seamlessly integrated into our Ncore and FlexNoC network-on-chip IP technologies (Figure 3). Once the SoC development team has created its initial PU, all that is required is to specify the number of rows and columns of the array and initiate the process. The tool then automatically replicates the PUs, generates the NoC and configures the NIUs with their unique addresses.

This technology is available in both FlexNoC interconnect IP for use in non-coherent arrays like NPUs and Ncore coherent NoC IP for use in coherent arrays like RISC-V processor clusters.

This auto-replication and auto-configuration takes anywhere from a few seconds to a few minutes, depending on the size of the array and the complexity of the PUs. Therefore, any design changes to the original PU can be quickly and easily accommodated. Similarly, the array can be easily scaled as required, and it is easy to generate derivative designs with larger or smaller arrays.

Conclusion

Developing high-end SoCs featuring arrays of RISC-V processor clusters is a complex and costly undertaking that requires an immense amount of time and effort. Low-level tasks such as manual PU replication and NIU configuration are prone to error and consume valuable time that could be better spent on higher-level design challenges.

Arteris’ NoC soft tiling technology resolves these issues by automating the configuration and scaling of complex RISC-V architectures. This automation allows design teams to focus their time and attention on other areas. By streamlining the integration of RISC-V cores and clusters, Arteris’ solution accelerates the development process. Learn more about NoC tiling at arteris.com.

Related Semiconductor IP

- Smart Network-on-Chip (NoC) IP

- FlexNoC 5 Option For Scalability and Performance Critical Systems

- NoC System IP

- Non-Coherent Network-on-Chip (NOC)

- Coherent Network-on-Chip (NOC)

Related White Papers

- Scaling AI Chip Design With NoC Soft Tiling

- Design and implementation of a hardened cryptographic coprocessor for a RISC-V 128-bit core

- Deliver "Smarter" Faster: Design Methodology for AI/ML Processor Design

- Emerging Trends and Challenges in Embedded System Design

Latest White Papers

- Ramping Up Open-Source RISC-V Cores: Assessing the Energy Efficiency of Superscalar, Out-of-Order Execution

- Transition Fixes in 3nm Multi-Voltage SoC Design

- CXL Topology-Aware and Expander-Driven Prefetching: Unlocking SSD Performance

- Breaking the Memory Bandwidth Boundary. GDDR7 IP Design Challenges & Solutions

- Automating NoC Design to Tackle Rising SoC Complexity