Boosting RISC-V SoC performance for AI and ML applications

By Andy Nightingale, Arteris | May 20, 2025

Today’s system-on-chip (SoC) designs integrate unprecedented numbers of diverse IP cores, from general-purpose CPUs to specialized hardware accelerators, including neural processing units (NPUs), tensor processors, and data processing units (DPUs). This heterogeneous approach enables designers to optimize performance, power efficiency, and cost. However, it also increases the complexity of on-chip communication, synchronization, and interoperability.

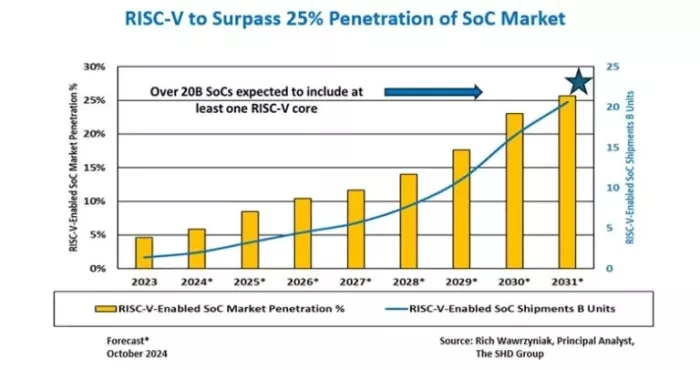

At around the same time, the open and configurable RISC-V instruction set architecture (ISA) is experiencing rapid adoption across diverse markets. This growth aligns with rising SoC complexity and the widespread integration of artificial intelligence (AI), as illustrated in figure below. Nearly half of global silicon projects now incorporate AI or machine learning (ML), spanning automotive, mobile, data center, and Internet of Things (IoT) applications. This rapid RISC-V evolution is placing increasing demands on the underlying hardware infrastructure.

The above graph shows projected growth of RISC-V-enabled SoC market share and unit shipments.

NoCs for heterogeneous SoCs

A key challenge in AI-centric SoCs is ensuring efficient communication among IP blocks from different vendors. These designs often integrate cores from various architectures, such as RISC-V CPUs, Arm processors, DPUs, and AI accelerators, which adds to the complexity of on-chip interaction. So, compatibility with a range of communication protocols, such as Arm ACE and CHI, as well as emerging RISC-V interfaces like CHI-B, is critical.

The distinction between coherent networks-on-chip (NoCs), primarily used for CPUs that require synchronized data caches, and non-coherent NoCs, typically utilized for AI accelerators, must also be carefully managed. Effectively handling both types of NoCs enables the design of flexible, high-performance systems.

NoC architectures address interoperability and scalability. This technology delivers flexible interconnectivity, seamlessly integrating the expanding variety and number of IP cores. Approximately 10% to 13% of a chip’s silicon area is typically dedicated to interconnect logic. Here, NoCs serve as the backbone infrastructure of modern SoCs, enabling efficient data flow, low latency, and flexible routing between diverse processing elements.

Advanced techniques for AI performance

The rapid rise of generative AI and large language models (LLMs) has further intensified interconnect demands, with some now surpassing trillions of parameters and significantly increasing on-chip data bandwidth requirements. Conventional bus architectures can no longer efficiently manage these massive data flows.

Designers are now implementing advanced techniques like data interleaving, multicast communication, and multiline reorder buffers. These methods enable widened data buses with thousands of bits for sustained high-throughput and low-latency communication.

In addition to addressing bandwidth demands, new architectural approaches optimize system performance. One technique is AI tiling, where multiple smaller compute units or tiles are interconnected to form scalable compute clusters.

These architectures allow designers to scale CPU or AI-specific processing clusters from dozens to thousands of cores. The NoC infrastructure manages data movement and communication among these tiles, ensuring maximum performance and efficiency.

Beyond tiling, physical and back-end design challenges intensify at advanced nodes. Below 10 nanometers, routing and layout constraints significantly impact chip performance, power consumption, and reliability. Physically aware NoCs optimize placement and timing for successful silicon realization. Early consideration of these physical factors minimizes silicon respin risk and supports efficiency goals in AI applications at 5 nm and 3 nm.

Reliability and flexibility

Hardware-software integration, including RISC-V register management and memory mapping, streamlines validation, reduces software overhead, and boosts system reliability. This approach manages coherent design complexity, meeting performance and safety standards.

Next. safety certifications have become paramount as RISC-V-based designs enter safety-critical domains such as autonomous automotive systems. Interconnect solutions must deliver high-bandwidth, low-latency communication while meeting rigorous safety standards such as ISO 26262 up to ASIL D. Certified NoC architectures incorporate fault-tolerant features to enable reliability in AI platforms.

Modularity and interoperability across vendors and interfaces have also become essential to keep pace with the dynamic demands of AI-driven RISC-V systems. Many real-world designs no longer follow a monolithic approach.

Instead, they evolve over multiple iterations and often replace processing subsystems mid-development to improve efficiency or time to market. Such flexibility is achievable when the interconnect fabric supports diverse protocols, topologies, and evolving standards.

About the Author

Andy Nightingale, VP of product management and marketing at Arteris, has over 37 years of experience in the high-tech industry, including 23 years in various engineering and product management positions at Arm.

Related Semiconductor IP

- Smart Network-on-Chip (NoC) IP

- FlexNoC 5 Interconnect IP

- FlexNoC Functional Safety (FuSa) Option helps meet up to ISO 26262 ASIL B and D requirements against random hardware faults.

- NoC System IP

- Non-Coherent Network-on-Chip (NOC)

Related White Papers

- Selection of FPGAs and GPUs for AI Based Applications

- Accelerating SoC Evolution With NoC Innovations Using NoC Tiling for AI and Machine Learning

- A RISC-V Multicore and GPU SoC Platform with a Qualifiable Software Stack for Safety Critical Systems

- Generative AI for Analog Integrated Circuit Design: Methodologies and Applications

Latest White Papers

- Ramping Up Open-Source RISC-V Cores: Assessing the Energy Efficiency of Superscalar, Out-of-Order Execution

- Transition Fixes in 3nm Multi-Voltage SoC Design

- CXL Topology-Aware and Expander-Driven Prefetching: Unlocking SSD Performance

- Breaking the Memory Bandwidth Boundary. GDDR7 IP Design Challenges & Solutions

- Automating NoC Design to Tackle Rising SoC Complexity