An Efficient Device for Forward Collision Warning Using Low Cost Stereo Camera & Embedded SoC

Manoj Rajan, Sravya Vunnam, Tata Consultancy Services

ABSTRACT

Forward Collision Warning (FCW) systems in automobiles provide alerts to assist drivers in avoiding the rear-side crashes. These devices currently use, radars as the main sensor. The increasing resolution of imaging sensors, processing capability of hardware chipsets and advances in machine vision algorithms, have pushed ahead the camera-based features. This paper discusses about a stereo camera-based FCW algorithm that uses a pair of cameras for sensing forward collision situations. Stereo camera based devices overcomes the disadvantages of using single camera for distance measurements and at the same time with lesser cost compared to radar sensors. This paper provides an overview of the system, sensors used, and details including novel state of the art algorithms that detects vehicles and calculates distance from it, and how the algorithms are designed to be affordable for low cost multi core embedded hardware platform meeting stringent real time performance parameters. Novel, Enhanced Histogram of Gradients algorithm detects the presence of vehicle at different scales and postures. Highly efficient stereo matching algorithm which operates at dynamic sub-pixel level granularity provides accurate depth which helps to predict the time for collision accurately. Long testing proves that system would meet the New Car Assessment Program test requirements

Keywords: ADAS, FCW, NCAP, HOG, radar, camera sensor, vision, stereo matching, sub-pixel interpolation

1 INTRODUCTION

Forward Collision Warning, is a feature that provides alerts intended to assist drivers in avoiding or mitigating the harm caused by rear-end crashes. The FCW system may alert the driver to an approach (or closing) conflict a few seconds before the driver would have detected such a conflict (e.g., if the driver's eyes were off-the-road) so they can take any necessary corrective action (e.g., Apply hard braking). The most important aspect of FCW feature is the timing of the alert to the driver. The goal is to have sufficiently early true warnings and at the sametime to avoid false warnings. The IPR of the subject matter disclosed in this paper belongs to TCS, and TCS has a patent application pending on this subject matter

2 OPPORTUNITY FOR CRASH AVOIDANCE SYSTEMS

As per the studies conducted by World Health Organization (WHO), every year, road crashes kill nearly 1.3 million people and injure between 20 and 50 million more. By 2020, unless action is taken, road traffic injuries are predicted to double. The studies reveal that the driver is considered to be major cause of all accidents, the other two being the environmental conditions and increased distraction within the vehicle (for e.g.: the in-vehicle entertainment).A timely alert to the driver can help in prevention of accidents

3 FORWARD COLLISION WARNING SENSORS

The primary FCW ranging device used on current production vehicles are either radar or LIDAR-based sensors. Irrespective of the sensor used, FCW system should be able to detect, classify and track the vehicles moving in front and at the same time discard the objects which are not of interest .It is critical for an FCW system to have very high true positive detections and very minimal false warnings to be qualified for usage in a production vehicle. .The camera based systems which are more common, uses monocular camera. The systems which uses mono camera lacks in the ability of detection of distance as accurate as RADAR and hence suffers in the computation of Time to Collide (TTC).The proposed solution makes uses of a stereo camera which provides much better accuracy in terms of computation of distance to the object of interest.

Figure 1. Comparison of different FCW sensors

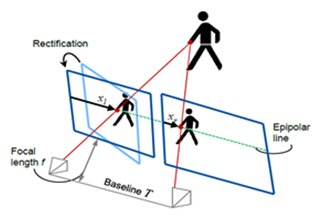

3.1 Stereo Camera Sensor

The stereo camera consists of two sensors of parallel optical axes and separated horizontally from each other by a small pre-defined distance called baseline. The distance between the cameras and the object can be measured depending upon the distance between the positions of the objects in both pictures, the focal lengths of both cameras as well as the distance. Image processing techniques are used to find the relation between objects in the left image w.r.t the right image. The amount of shift in the coordinates of the object of interest in the right image w.r.t left image is the disparity. Using the law of Triangulation, the distance of the object from the camera can be calculated provided the baseline and focal length is known. Distance to an object of interest has inverse relationship with disparity. The range and accuracy of distance calculations significantly depend on the resolution of the camera and the distance between the optical axes of the two cameras.

Figure 2: Stereo Camera Geometry

4 SENSING ALGORITHMS

In this system, different types of vehicles are detected .The distances were measured for the vehicles which are in path to the subject vehicle in the distance range of 5m to 80m with VGA resolution camera. The average percentage error in these measurements was found to be in the range of less than +/-5%. Subsequent sections discusses about the algorithms developed for the detection of vehicles and calculation of distance to the detected vehicles.

4.1 Vehicle Detection

Detection of vehicles using camera images has been a well-researched topic. There are multiple algorithms which are capable of doing this. The important aspect of a robust algorithm is its capability to detect multiple kinds of vehicles under different environmental and lighting conditions with high levels of accuracy. A novel algorithm was developed which has the capability of detecting vehicles from as close as 5m to 80m of distance with VGA resolution camera. A robust method for detecting the vehicles by using images captured by a mono or stereo camera (pair of imagers), the said method characterized in being able to detect and calculate distances over very far ranges comprising the processor implemented steps of computing the Histogram of Oriented Gradients (HOG) for finding out the presence of vehicles in the image. Multiple scales and orientations of the objects can be detected with enhancements done over traditional HOG. This is being achieved with the following approach. First split the input image into multiple slices .The slices are formed based on the candidate object size and the distance of targeted detection. The slices which correspond to the long distance regions are interpolated may be to a factor of 2. On each slice, gradients are computed locally. Based on the precomputed scales of object at different distances, multiple window sizes are chosen for HOG computation and feature descriptors are trained with these specific windows sizes .These feature descriptors are applied on the corresponding slice in which it is expected to detect an object. This approach ensures that varying scales (or sizes) and the orientations of the objects are being detected. At the same time, since the descriptors for different window sizes are computed simultaneously it doesn’t consume additional computational bandwidth.

Detection was followed by a tracking algorithm which tracks the already detected targets using a Kalman & Optical flow fused approach. The pictures below show the actual detection output of algorithms (marked by bounding box) at various distances when subjected with multiple test videos.

Figure 3: Vehicle Detection output – Long, Medium & Short Range

The statistics below show the performance of the algorithm under different environmental conditions for different types of vehicles on long hours of real time road data.

Table 3: Vehicle Detection – Algorithm Performance Statistics

| Algorithm | True Detection (Frame by Frame %) | Miss detection (Frame by frame %) | False Detection (Out of total frames tested) |

| Vehicle Detection (up to 80m) | 92% | 8% | 2% |

4.2 Distance Estimation

Major strength of stereo camera is the ability to depict the depth or distance information accurately. Stereo matching or stereo correspondence algorithms are used to generate the disparity maps or the depth maps. In this paper we introduce a highly efficient local algorithm that generates higher-accuracy disparity values. The vehicle detection algorithm detects vehicles in front and generates the Region of Interest (ROI) around the detected vehicle on the left image. This ROI is then searched in the right image using a stereo correspondence algorithm. In this approach, matching is needed to be done only for the required features which increases the accuracy and saves the computation time. The matching is done using area-based correlation technique. Major challenge in stereo matching is the false matches which happen more in larger distances as the object size becomes smaller. Algorithms which based on the approximate range of the object, generates an interpolated or sub-pixel level images. Levels of sub-pixels which can be selected can be 0.5, 0.1, 0.05 or 0.01 and dynamically decided based on EHOG detection window size .Dynamic selection helps to reduce the computationally complexity significantly. Since the matching happens at such fine levels of interpolation the accuracy of matching and the disparity generation happens with high levels of accuracy even at 80m distance with VGA resolution camera. Prior arts indicate that with VGA camera stereo matching can generate high levels of accuracy only up to ranges of 40m.

Figure 4: Original Image & Interpolated Image (@ 0.05 sub-pixel)

The figures below show the original image and the interpolated image which is used for matching generated at 0.05 sub-pixel accuracy. Once the matching information is obtained this is used for calculating distance using triangulation principle.

Table 4: Distance Computation – Algorithm Performance Statistics

| Actual Distance to Vehicles | Distance Computed by Algorithm | Error Percentage |

| 6.00 | 6.09 | 1.50 |

| 8.00 | 8.00 | 0.00 |

| 16.50 | 16.39 | 0.67 |

| 42.00 | 43.25 | 2.98 |

| 64.00 | 66.13 | 3.33 |

| 80.00 | 79.72 | 0.35 |

5 Implementation and Optimization on the embedded processor

Since the requirement of the system is to work in the vehicle, the algorithms and application would need to be implemented on a hardware meeting real time performance .The stringent real time requirement calls for processing the algorithms at a frame rate of 30 per second or a detection latency of 33ms. Evaluation of different popular hardware platforms was done and Texas Instruments TDA2x was identified as the suitable one for the system. TI’s TDA2x enables low-power, high-performance vision-processing systems. It features two C66x Digital Signal Processor (DSP) cores and four Vision Acceleration vision engines called EVE’s. The following step by step iterative design and implementation methodology was adopted to optimize the performance of the algorithms.

Figure 5: Performance Optimization Methodology

Detailed analysis has been done on the strength of the different types of cores .i.e., DSP, Image processor (EVE) and ARM Cortex .The following table gives the comparative performance of each of the cores.

Table 5: Comparative performance figures of different cores

| Type of Operation | Cortex A9 | c66x DSP | EVE |

| 16 bit integer | 1x | 2.5x | 8-12x |

| Single Precision float | 1x | 5x |

As the above table shows , EVE has a definite advantage on the algorithms which involve fixed point arithmetic whereas DSP offers flexibility in supporting algorithms which require floating point arithmetic to maintain the precision required for higher accuracy.

Based on the analysis done on the algorithm, the following partitioning is done for the HOG Vehicle detection.

Figure 6: Partitioning of HOG algorithm on TDA2x

6- Conclusion

This paper discussed the development and initial testing results from an alternative FCA sensing approach that uses a forward-looking stereo camera as a replacement for a radar/lidar device as the sole Forward Collision Warning (FCW) sensing mechanism

This paper also provided a discussion about the state of the art algorithms which detects and approves lead vehicle candidates, compute the distances to these candidates for the purposes of identifying potential rear-end crash situations. An efficient implementation of the algorithm on a low cost embedded hardware was also discussed. Results from initial testing indicate this system would be capable of meeting the New Car Assessment Program (NCAP) Forward Collision warning confirmation test requirements

7- References

[1]. Pre-Crash Scenario Typology for Crash Avoidance Research. National Highway Transportation Safety Administration. - Najm, W.G., Smith, J.D., and Yanagisawa, M.

[2]. Development and validation of functional definitions and evaluation procedures for collision warning/avoidance systems. Highway Transportation Safety Administration. - Kiefer, R., LeBlanc, D., Palmer, M., Salinger, J., Deering, R., and Shulman, M.

[3]. Histogram of Oriented Gradients (HOG) for Object Detection -Navneet Dalal and Bill Triggs

[4]. FORWARD COLLISION WARNING SYSTEM CONFIRMATION TEST, February 2013 - US Department of Transportation

[5]. Empowering automotive vision with TI’s Vision Acceleration Pac – Texas Instruments

Related Semiconductor IP

- HBM4 PHY IP

- Ultra-Low-Power LPDDR3/LPDDR2/DDR3L Combo Subsystem

- MIPI D-PHY and FPD-Link (LVDS) Combinational Transmitter for TSMC 22nm ULP

- HBM4 Controller IP

- IPSEC AES-256-GCM (Standalone IPsec)

Related Articles

- Growing demand for high-speed data in consumer devices gives rise to new generation of low-end FPGAs

- Assessing Design Space for the Device-Circuit Codesign of Nonvolatile Memory-Based Compute-in-Memory Accelerators

- FPGA-Accelerated RISC-V ISA Extensions for Efficient Neural Network Inference on Edge Devices

- Market Focus: DSP makers' forward spin on the market

Latest Articles

- ElfCore: A 28nm Neural Processor Enabling Dynamic Structured Sparse Training and Online Self-Supervised Learning with Activity-Dependent Weight Update

- A 14ns-Latency 9Gb/s 0.44mm² 62pJ/b Short-Blocklength LDPC Decoder ASIC in 22FDX

- Pipeline Stage Resolved Timing Characterization of FPGA and ASIC Implementations of a RISC V Processor

- Lyra: A Hardware-Accelerated RISC-V Verification Framework with Generative Model-Based Processor Fuzzing

- Leveraging FPGAs for Homomorphic Matrix-Vector Multiplication in Oblivious Message Retrieval