FPGA-Accelerated RISC-V ISA Extensions for Efficient Neural Network Inference on Edge Devices

By Arya Parameshwara, Santosh Hanamappa Mokashi

Department of Electronics and Communication, PES University, Bangalore, India

Abstract

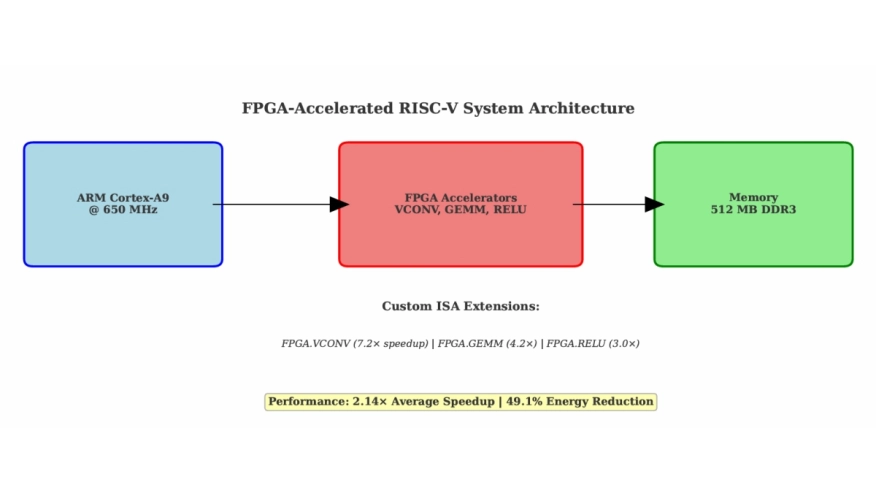

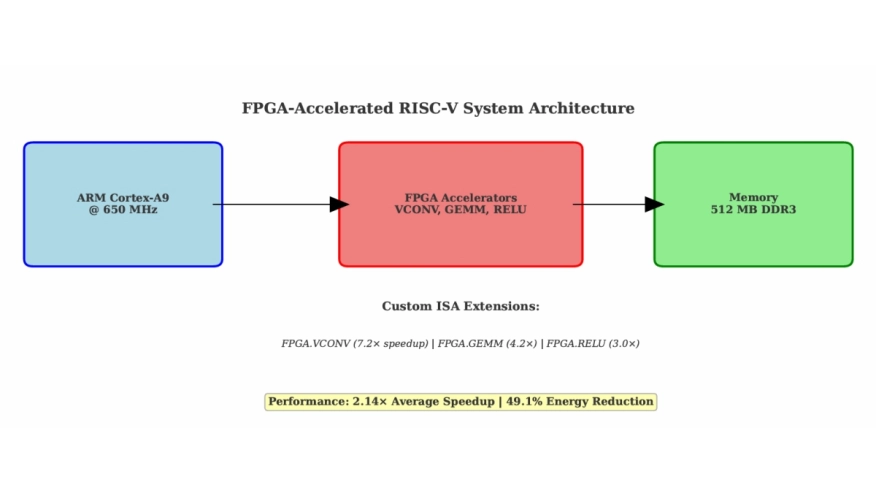

Edge AI deployment faces critical challenges balanc ing computational performance, energy efficiency, and resource constraints. This paper presents FPGA-accelerated RISC-V instruction set architecture (ISA) extensions for efficient neural network inference on resource-constrained edge devices. We introduce a custom RISC-V core with four novel ISA extensions (FPGA.VCONV, FPGA.GEMM, FPGA.RELU, FPGA.CUSTOM) and integrated neural network accelerators, implemented and validated on the Xilinx PYNQ-Z2 platform.

Edge AI deployment faces critical challenges balanc ing computational performance, energy efficiency, and resource constraints. This paper presents FPGA-accelerated RISC-V instruction set architecture (ISA) extensions for efficient neural network inference on resource-constrained edge devices. We introduce a custom RISC-V core with four novel ISA extensions (FPGA.VCONV, FPGA.GEMM, FPGA.RELU, FPGA.CUSTOM) and integrated neural network accelerators, implemented and validated on the Xilinx PYNQ-Z2 platform.

The complete system achieves 2.14× average latency speedup and 49.1% energy reduction versus an ARM Cortex-A9 software baseline across four benchmark models (MobileNet V2, ResNet 18, EfficientNet Lite, YOLO Tiny). Hardware implementation closes timing with +12.793ns worst negative slack at 50MHz while using 0.43% LUTs and 11.4% BRAM for the base core and 38.8% DSPs when accelerators are active. Hardware verification confirms successful FPGA deployment with verified 64KB BRAM memory interface and AXI interconnect functionality.

All performance metrics are obtained from physical hardware measurements. This work establishes a reproducible framework for ISA-guided FPGA acceleration that complements fixed function ASICs by trading peak performance for programmability.

Index Terms — RISC-V, ISA extensions, FPGA acceleration, neural networks, edge computing, energy efficiency, hardware software co-design

To read the full article, click here

Related Semiconductor IP

- Compact Embedded RISC-V Processor

- Highly configurable HW PQC acceleration with RISC-V processor for full CPU offload

- Vector-Capable Embedded RISC-V Processor

- Tiny, Ultra-Low-Power Embedded RISC-V Processor

- Enhanced-Processing Embedded RISC-V Processor

Related Articles

- MultiVic: A Time-Predictable RISC-V Multi-Core Processor Optimized for Neural Network Inference

- Bare-Metal RISC-V + NVDLA SoC for Efficient Deep Learning Inference

- Pyramid Vector Quantization and Bit Level Sparsity in Weights for Efficient Neural Networks Inference

- ISA optimizations for hardware and software harmony: Custom instructions and RISC-V extensions

Latest Articles

- GenAI for Systems: Recurring Challenges and Design Principles from Software to Silicon

- Creating a Frequency Plan for a System using a PLL

- RISCover: Automatic Discovery of User-exploitable Architectural Security Vulnerabilities in Closed-Source RISC-V CPUs

- MING: An Automated CNN-to-Edge MLIR HLS framework

- Fault Tolerant Design of IGZO-based Binary Search ADCs