FeNN-DMA: A RISC-V SoC for SNN acceleration

By Zainab Aizaz, James C. Knight, and Thomas Nowotny

School of Engineering and Informatics, University of Sussex, Brighton, UK

Abstract

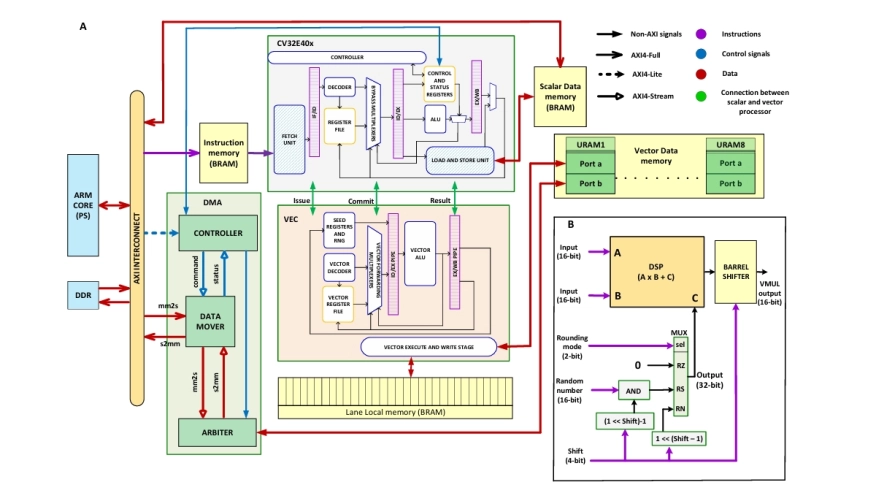

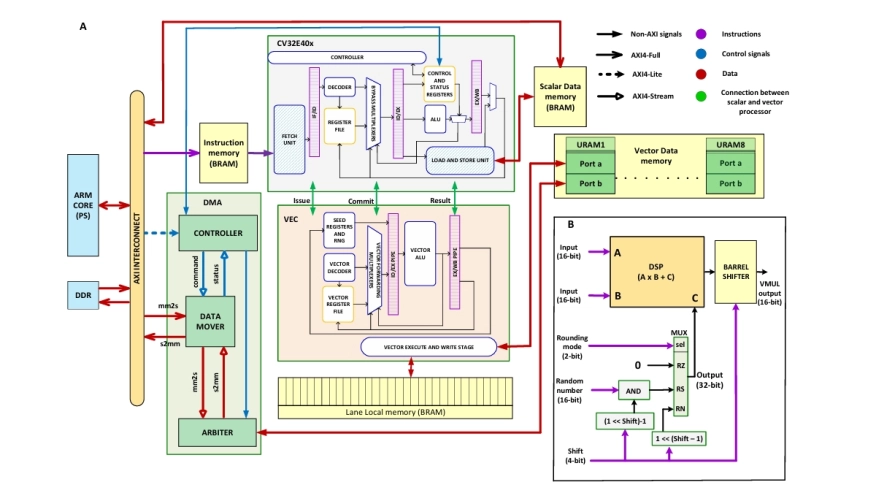

Spiking Neural Networks (SNNs) are a promising, energy-efficient alternative to standard Artificial Neural Networks (ANNs) and are particularly well-suited to spatio-temporal tasks such as keyword spotting and video classification. However, SNNs have a much lower arithmetic intensity than ANNs and are therefore not well-matched to standard accelerators like GPUs and TPUs. Field Programmable Gate Arrays (FPGAs) are designed for such memory-bound workloads and here we develop a novel, fully-programmable RISC-V-based system-on-chip (FeNN-DMA), tailored to simulating SNNs on modern UltraScale+ FPGAs. We show that FeNN-DMA has comparable resource usage and energy requirements to state-of-the-art fixed-function SNN accelerators, yet it is capable of simulating much larger and more complex models. Using this functionality, we demonstrate state-of-the-art classification accuracy on the Spiking Heidelberg Digits and Neuromorphic MNIST tasks.

Spiking Neural Networks (SNNs) are a promising, energy-efficient alternative to standard Artificial Neural Networks (ANNs) and are particularly well-suited to spatio-temporal tasks such as keyword spotting and video classification. However, SNNs have a much lower arithmetic intensity than ANNs and are therefore not well-matched to standard accelerators like GPUs and TPUs. Field Programmable Gate Arrays (FPGAs) are designed for such memory-bound workloads and here we develop a novel, fully-programmable RISC-V-based system-on-chip (FeNN-DMA), tailored to simulating SNNs on modern UltraScale+ FPGAs. We show that FeNN-DMA has comparable resource usage and energy requirements to state-of-the-art fixed-function SNN accelerators, yet it is capable of simulating much larger and more complex models. Using this functionality, we demonstrate state-of-the-art classification accuracy on the Spiking Heidelberg Digits and Neuromorphic MNIST tasks.

Index Terms—Spiking Neural Networks (SNN), Field Programmable Gate Array (FPGA), RISC-V, Vector processor.

To read the full article, click here

Related Semiconductor IP

- RISC-V Debug & Trace IP

- Gen#2 of 64-bit RISC-V core with out-of-order pipeline based complex

- Compact Embedded RISC-V Processor

- Multi-core capable 64-bit RISC-V CPU with vector extensions

- 64 bit RISC-V Multicore Processor with 2048-bit VLEN and AMM

Related Articles

- A RISC-V Multicore and GPU SoC Platform with a Qualifiable Software Stack for Safety Critical Systems

- Why RISC-V is a viable option for safety-critical applications

- CAST Provides a Functional Safety RISC-V Processor IP for Microchip FPGAs

- Design and implementation of a hardened cryptographic coprocessor for a RISC-V 128-bit core

Latest Articles

- GenAI for Systems: Recurring Challenges and Design Principles from Software to Silicon

- Creating a Frequency Plan for a System using a PLL

- RISCover: Automatic Discovery of User-exploitable Architectural Security Vulnerabilities in Closed-Source RISC-V CPUs

- MING: An Automated CNN-to-Edge MLIR HLS framework

- Fault Tolerant Design of IGZO-based Binary Search ADCs