QMC: Efficient SLM Edge Inference via Outlier-Aware Quantization and Emergent Memories Co-Design

By Nilesh Prasad Pandey 1, Jangseon Park 1, Onat Gungor 1, Flavio Ponzina 2, Tajana Rosing 1

1 University of California San Diego

2 San Diego State University

Abstract

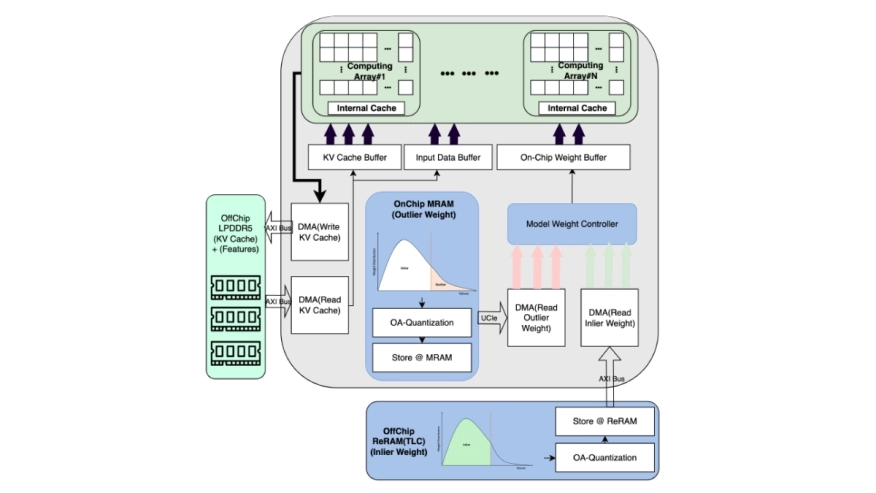

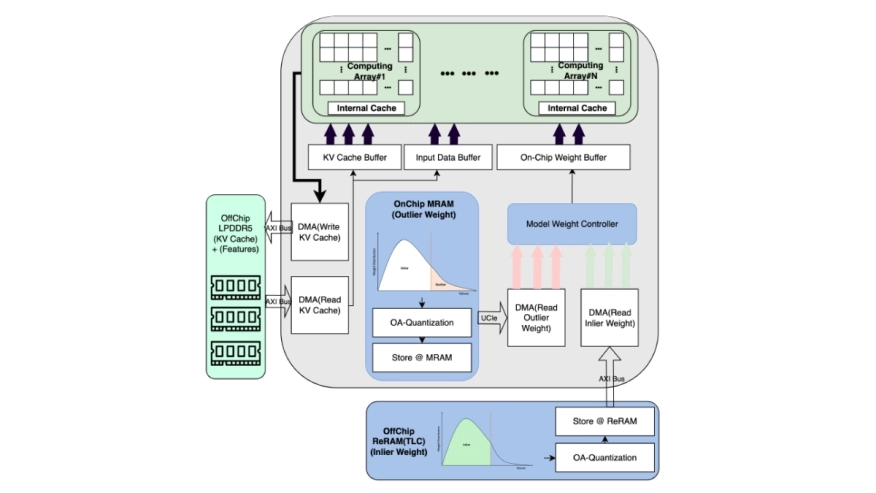

Deploying Small Language Models (SLMs) on edge platforms is critical for real-time, privacy-sensitive generative AI, yet constrained by memory, latency, and energy budgets. Quantization reduces model size and cost but suffers from device noise in emerging non-volatile memories, while conventional memory hierarchies further limit efficiency. SRAM provides fast access but has low density, DRAM must simultaneously accommodate static weights and dynamic KV caches, which creates bandwidth contention, and Flash, although dense, is primarily used for initialization and remains inactive during inference. These limitations highlight the need for hybrid memory organizations tailored to LLM inference. We propose Outlier-aware Quantization with Memory Co-design (QMC), a retraining-free quantization with a novel heterogeneous memory architecture. QMC identifies inlier and outlier weights in SLMs, storing inlier weights in compact multi-level Resistive-RAM (ReRAM) while preserving critical outliers in high-precision on-chip Magnetoresistive-RAM (MRAM), mitigating noise-induced degradation. On language modeling and reasoning benchmarks, QMC outperforms and matches state-of-the-art quantization methods using advanced algorithms and hybrid data formats, while achieving greater compression under both algorithm-only evaluation and realistic deployment settings. Specifically, compared against SoTA quantization methods on the latest edge AI platform, QMC reduces memory usage by 6.3x-7.3x, external data transfers by 7.6x, energy by 11.7x, and latency by 12.5x when compared to FP16, establishing QMC as a scalable, deployment-ready co-design for efficient on-device inference.

Deploying Small Language Models (SLMs) on edge platforms is critical for real-time, privacy-sensitive generative AI, yet constrained by memory, latency, and energy budgets. Quantization reduces model size and cost but suffers from device noise in emerging non-volatile memories, while conventional memory hierarchies further limit efficiency. SRAM provides fast access but has low density, DRAM must simultaneously accommodate static weights and dynamic KV caches, which creates bandwidth contention, and Flash, although dense, is primarily used for initialization and remains inactive during inference. These limitations highlight the need for hybrid memory organizations tailored to LLM inference. We propose Outlier-aware Quantization with Memory Co-design (QMC), a retraining-free quantization with a novel heterogeneous memory architecture. QMC identifies inlier and outlier weights in SLMs, storing inlier weights in compact multi-level Resistive-RAM (ReRAM) while preserving critical outliers in high-precision on-chip Magnetoresistive-RAM (MRAM), mitigating noise-induced degradation. On language modeling and reasoning benchmarks, QMC outperforms and matches state-of-the-art quantization methods using advanced algorithms and hybrid data formats, while achieving greater compression under both algorithm-only evaluation and realistic deployment settings. Specifically, compared against SoTA quantization methods on the latest edge AI platform, QMC reduces memory usage by 6.3x-7.3x, external data transfers by 7.6x, energy by 11.7x, and latency by 12.5x when compared to FP16, establishing QMC as a scalable, deployment-ready co-design for efficient on-device inference.

To read the full article, click here

Related Semiconductor IP

- eUSB2V2.0 Controller + PHY IP

- I/O Library with LVDS in SkyWater 90nm

- 50G PON LDPC Encoder/Decoder

- UALink Controller

- RISC-V Debug & Trace IP

Related Articles

- Pyramid Vector Quantization and Bit Level Sparsity in Weights for Efficient Neural Networks Inference

- FPGA-Accelerated RISC-V ISA Extensions for Efficient Neural Network Inference on Edge Devices

- An efficient way of loading data packets and checking data integrity of memories in SoC verification environment

- AI Edge Inference is Totally Different to Data Center

Latest Articles

- QMC: Efficient SLM Edge Inference via Outlier-Aware Quantization and Emergent Memories Co-Design

- ChipBench: A Next-Step Benchmark for Evaluating LLM Performance in AI-Aided Chip Design

- COVERT: Trojan Detection in COTS Hardware via Statistical Activation of Microarchitectural Events

- A Reconfigurable Framework for AI-FPGA Agent Integration and Acceleration

- Veri-Sure: A Contract-Aware Multi-Agent Framework with Temporal Tracing and Formal Verification for Correct RTL Code Generation