In-Pipeline Integration of Digital In-Memory-Computing into RISC-V Vector Architecture to Accelerate Deep Learning

By Tommaso Spagnolo 1, Cristina Silvano 1, Riccardo Massa 2, Filippo Grillotti 2, Thomas Boesch 3, Giuseppe Desoli 2

1 Politecnico di Milano, Milan, Italy

2 STMicroelectronics, Milan, Italy

3 STMicroelectronics, Geneva, Switzerland

Abstract

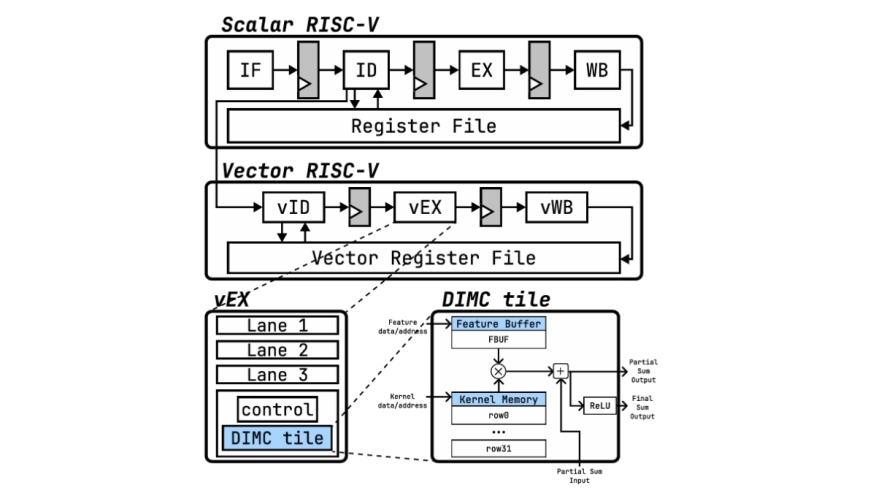

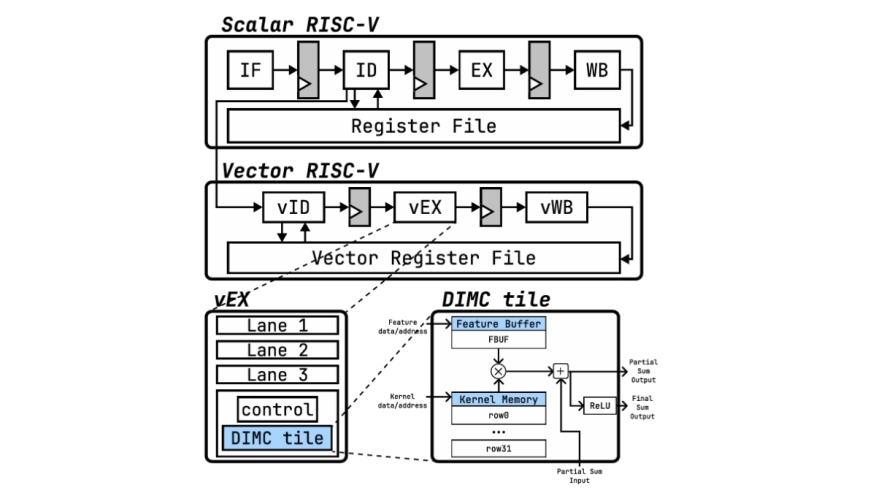

Expanding Deep Learning applications toward edge com puting demands architectures capable of delivering high computational performance and efficiency while adhering to tight power and memory constraints. Digital In-Memory Computing (DIMC) addresses this need by moving part of the computation directly within memory arrays, significantly reducing data movement and improving energy efficiency. This paper introduces a novel architecture that extends the Vector RISC-V Instruction Set Architecture (ISA) to integrate a tightly coupled DIMC unit directly into the execution stage of the pipeline, to accelerate Deep Learning inference at the edge. Specifically, the proposed approach adds four custom instructions dedicated to data loading, computation, and write-back, enabling flexible and optimal control of the inference execution on the target architecture. Experimental results demonstrate high utilization of the DIMC tile in Vector RISC-V and sustained throughput across the ResNet-50 model, achieving a peak performance of 137 GOP/s. The proposed architecture achieves a speedup of 217× over the baseline core and 50× area-normalized speedup even when operating near the hardware resource limits. The experimental results confirm the high potential of the proposed architecture as a scalable and efficient solution to accelerate Deep Learning inference on the edge.

Expanding Deep Learning applications toward edge com puting demands architectures capable of delivering high computational performance and efficiency while adhering to tight power and memory constraints. Digital In-Memory Computing (DIMC) addresses this need by moving part of the computation directly within memory arrays, significantly reducing data movement and improving energy efficiency. This paper introduces a novel architecture that extends the Vector RISC-V Instruction Set Architecture (ISA) to integrate a tightly coupled DIMC unit directly into the execution stage of the pipeline, to accelerate Deep Learning inference at the edge. Specifically, the proposed approach adds four custom instructions dedicated to data loading, computation, and write-back, enabling flexible and optimal control of the inference execution on the target architecture. Experimental results demonstrate high utilization of the DIMC tile in Vector RISC-V and sustained throughput across the ResNet-50 model, achieving a peak performance of 137 GOP/s. The proposed architecture achieves a speedup of 217× over the baseline core and 50× area-normalized speedup even when operating near the hardware resource limits. The experimental results confirm the high potential of the proposed architecture as a scalable and efficient solution to accelerate Deep Learning inference on the edge.

Index Terms—AI Accelerator, RISC-V Architecture, Instruction Set Extension, Digital In-Memory Computing, Vector Processors

To read the full article, click here

Related Semiconductor IP

- RISC-V Debug & Trace IP

- Gen#2 of 64-bit RISC-V core with out-of-order pipeline based complex

- Compact Embedded RISC-V Processor

- Multi-core capable 64-bit RISC-V CPU with vector extensions

- 64 bit RISC-V Multicore Processor with 2048-bit VLEN and AMM

Related Articles

- How Low Can You Go? Pushing the Limits of Transistors - Deep Low Voltage Enablement of Embedded Memories and Logic Libraries to Achieve Extreme Low Power

- Time Interleaving of Analog to Digital Converters: Calibration Techniques, Limitations & what to look in Time Interleaved ADC IP prior to licensing

- Understanding the Deployment of Deep Learning algorithms on Embedded Platforms

- Artificial Intelligence (AI) utilizing deep learning techniques to enhance ADAS

Latest Articles

- PDF: PUF-based DNN Fingerprinting for Knowledge Distillation Traceability

- TeraPool: A Physical Design Aware, 1024 RISC-V Cores Shared-L1-Memory Scaled-up Cluster Design with High Bandwidth Main Memory Link

- AutoGNN: End-to-End Hardware-Driven Graph Preprocessing for Enhanced GNN Performance

- LUTstructions: Self-loading FPGA-based Reconfigurable Instructions

- CQ-CiM: Hardware-Aware Embedding Shaping for Robust CiM-Based Retrieval