FengHuang: Next-Generation Memory Orchestration for AI Inferencing

By Jiamin Li, Lei Qu, Tao Zhang, Grigory Chirkov, Shuotao Xu, Peng Cheng, Lidong Zhou

Microsoft Research

Abstract

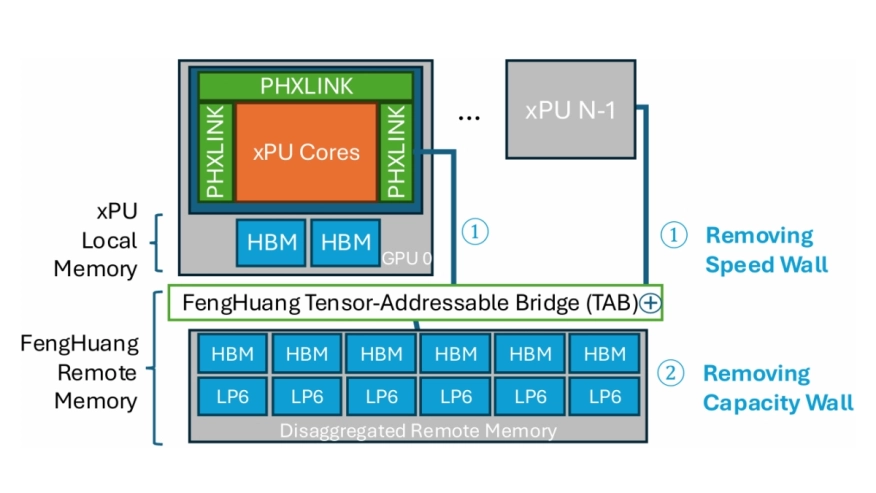

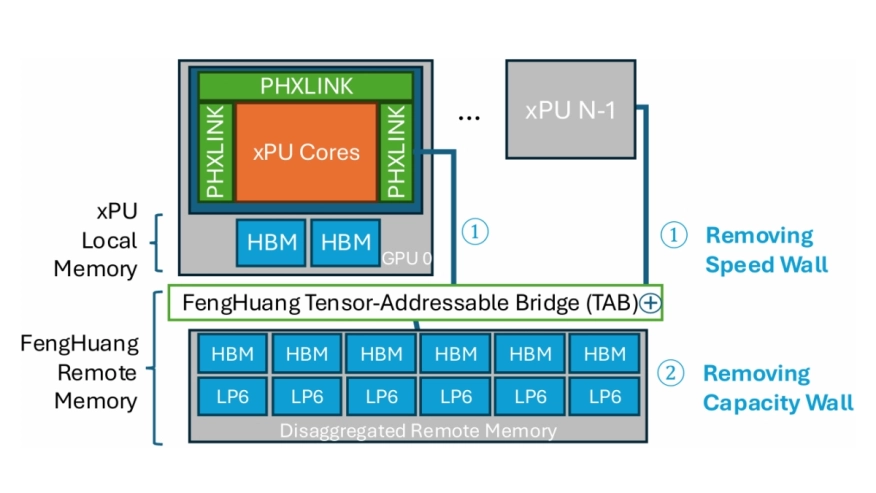

This document presents a vision for a novel AI infrastructure design that has been initially validated through inference simulations on state-of-the-art large language models. Advancements in deep learning and specialized hardware have driven the rapid growth of large language models (LLMs) and generative AI systems. However, traditional GPU-centric architectures face scalability challenges for inference workloads due to limitations in memory capacity, bandwidth, and interconnect scaling. To address these issues, the FengHuang Platform, a disaggregated AI infrastructure platform, is proposed to overcome memory and communication scaling limits for AI inference. FengHuang features a multi-tier shared-memory architecture combining high-speed local memory with centralized disaggregated remote memory, enhanced by active tensor paging and near-memory compute for tensor operations. Simulations demonstrate that FengHuang achieves up to 93% local memory capacity reduction, 50% GPU compute savings, and 16x to 70x faster inter-GPU communication compared to conventional GPU scaling. Across workloads such as GPT-3, Grok-1, and QWEN3-235B, FengHuang enables up to 50% GPU reductions while maintaining end-user performance, offering a scalable, flexible, and cost-effective solution for AI inference infrastructure. FengHuang provides an optimal balance as a rack-level AI infrastructure scale-up solution. Its open, heterogeneous design eliminates vendor lock-in and enhances supply chain flexibility, enabling significant infrastructure and power cost reductions.

This document presents a vision for a novel AI infrastructure design that has been initially validated through inference simulations on state-of-the-art large language models. Advancements in deep learning and specialized hardware have driven the rapid growth of large language models (LLMs) and generative AI systems. However, traditional GPU-centric architectures face scalability challenges for inference workloads due to limitations in memory capacity, bandwidth, and interconnect scaling. To address these issues, the FengHuang Platform, a disaggregated AI infrastructure platform, is proposed to overcome memory and communication scaling limits for AI inference. FengHuang features a multi-tier shared-memory architecture combining high-speed local memory with centralized disaggregated remote memory, enhanced by active tensor paging and near-memory compute for tensor operations. Simulations demonstrate that FengHuang achieves up to 93% local memory capacity reduction, 50% GPU compute savings, and 16x to 70x faster inter-GPU communication compared to conventional GPU scaling. Across workloads such as GPT-3, Grok-1, and QWEN3-235B, FengHuang enables up to 50% GPU reductions while maintaining end-user performance, offering a scalable, flexible, and cost-effective solution for AI inference infrastructure. FengHuang provides an optimal balance as a rack-level AI infrastructure scale-up solution. Its open, heterogeneous design eliminates vendor lock-in and enhances supply chain flexibility, enabling significant infrastructure and power cost reductions.

To read the full article, click here

Related Semiconductor IP

- E-Series GPU IP

- Arm's most performance and efficient GPU till date, offering unparalled mobile gaming and ML performance

- 3D OpenGL ES 1.1 GPU IP core

- 2.5D GPU

- 2D GPU Hardware IP Core

Related Articles

- The Growing Importance of AI Inference and the Implications for Memory Technology

- A novel 3D buffer memory for AI and machine learning

- Resistive RAM for next-generation nonvolatile memory

- Why the Memory Subsystem is Critical in Inferencing Chips

Latest Articles

- GenAI for Systems: Recurring Challenges and Design Principles from Software to Silicon

- Creating a Frequency Plan for a System using a PLL

- RISCover: Automatic Discovery of User-exploitable Architectural Security Vulnerabilities in Closed-Source RISC-V CPUs

- MING: An Automated CNN-to-Edge MLIR HLS framework

- Fault Tolerant Design of IGZO-based Binary Search ADCs