Heterogeneous Multicore using Cadence IP

For many of today’s embedded applications, compute requirements demand multiple cores (compute units). These applications also run various types of workloads. A heterogeneous multicore system enables designers to reduce energy and area costs while meeting performance requirements across various workloads. Data crunching by these multiple cores also puts a huge demand on the interconnect and memory bandwidth.

This blog presents a heterogeneous multicore system built with the RISC-V Host CPU and Cadence IP: Xtensa DSPs, and the Janus Network-on-Chip (NoC). While this example uses an RISC-V CPU, any other ISA with similar capabilities can also serve as the host CPU.

Heterogeneous Architecture

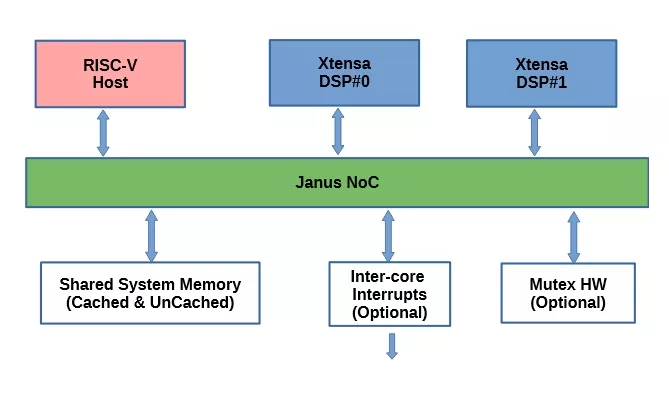

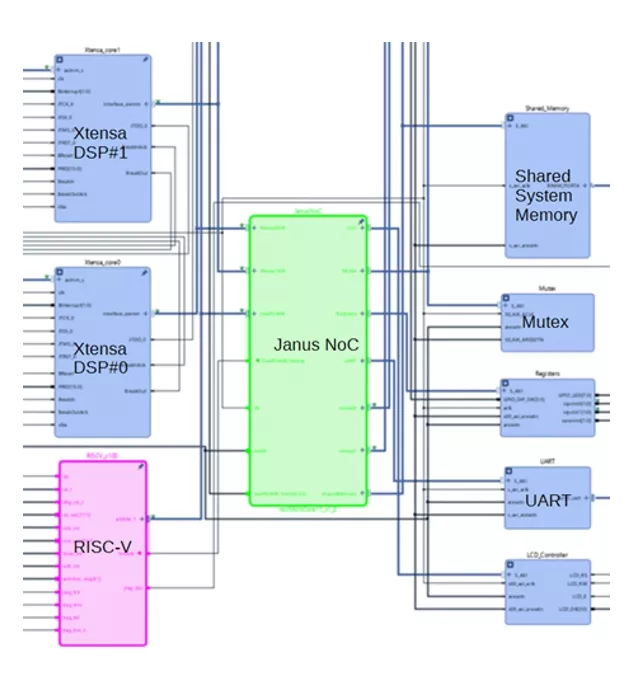

Figure 1 depicts a sample architecture with one RISC-V core and two Xtensa DSPs. A configurable Janus NoC connects all the system components.

Figure 1 - System Block Diagram

The RISC-V specification specifies various ISA options and features, and RISC-V processors can range from simple microcontrollers to cache-coherent Symmetric Multiprocessing (SMP) clusters.

The designer can select either an Xtensa CPU or an Xtensa-based DSP with an ISA optimized for Baseband/Radar, Vision/AI, or Audio applications. Xtensa DSPs are VLIW and SIMD machines. Depending on the application workload, a system architect can pick a SIMD width that maximizes hardware utilization while meeting the area budget.

To optimize the system from a PPA perspective, Xtensa offers power-saving features such as Wait-for-Interrupt (WAITI) and Power Shut Off (PSO). Custom instructions and hardware accelerators can be added to Xtensa DSPs, enabling the system architect to significantly improve performance with minimal impact on area and power. Leveraging these power-saving features and acceleration helps system architects meet and exceed their system design goals.

The designer can create an interconnect based on the Janus NoC, which supports multiple system interface types (AXI4, ACE-Lite, and AHB), multiple clock domains, and multiple physical partitions. A configurable NoC enables designers to optimize critical paths for bandwidth and latency in their heterogeneous multicore environment. NoCs are especially valuable when facing wire congestion and timing closure issues, which are common in large SoCs.

Data Sharing

In a multicore environment, cores need to share data to divide the application workload. Cores in a heterogeneous multicore environment with different ISAs and system interfaces need to reconcile their locking mechanisms. Xtensa DSPs support AXI initiator exclusive access configuration option. RISC-V cores implement the RISC-V “A” (atomics) extension that generates an exclusive bus access. Janus NoC also supports exclusive access on both AXI and AHB bus interfaces. So, it is possible to implement locking based on exclusive memory access. In designs with a host that does not support atomic instructions, a hardware mutex is needed to implement locking.

Shared System Memory

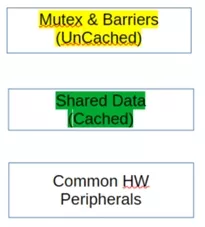

Figure 2 illustrates a shared memory map. Data shared between RISC-V and Xtensa cores, highlighted in green, can be cached by both cores. While using a coherent fabric is possible, it requires a significant amount of additional area. Alternatively, a non-coherent fabric like Janus NoC can be used, with code written to ensure cache coherency. The Xtensa SDK includes cache flush APIs as described in the system software reference manual. Most RISC-V SDKs support similar API’s as well. Both RISC-V and Xtensa cores need to access a shared memory space, but do not require identical views of it. Memory locks highlighted in yellow in Figure 2 are uncached by all cores.

Figure 2 - Shared System Memory Map

System memory bandwidth and capacity are another challenge when building a heterogeneous system with multiple compute units. Please visit the Memory interface IP for Double Data Rate (DDR) and High Bandwidth Memory (HBM) system memory options.

Runtime Environment

Several run-time execution options are available. Multiple RTOS options, such as FreeRTOS, Zephyr, and XOS, are available on Xtensa DSPs. SMP versions of RTOSs are also available for select Xtensa DSP SMP clusters. While the RISC-V microcontroller may run bare metal, several RTOS and Linux options are available on higher-end RISC-V CPUs. Be aware that using a non-coherent fabric can pose challenges when using any RTOS in a multicore environment, as such RTOS options assume coherency.

Boot up

Typically, a system controller (RISC-V in this example) acts as the primary boot-up core, providing implicit reset sequencing. RISC-V stalls the Xtensa DSPs at reset, authenticates and loads the image, initializes software synchronization data structures, and de-asserts the stall. The Xtensa DSP can be configured to place the reset vector in either local instruction memory or system memory. When both the RISC-V and Xtensa cores boot independently from a ROM, hardware synchronization is required before all cores can start using software synchronization data structures. Note that the Xtensa SDK includes a Linker Support Package (LSP) that unpacks the ROM image into the execution region.

Off-Load Engine

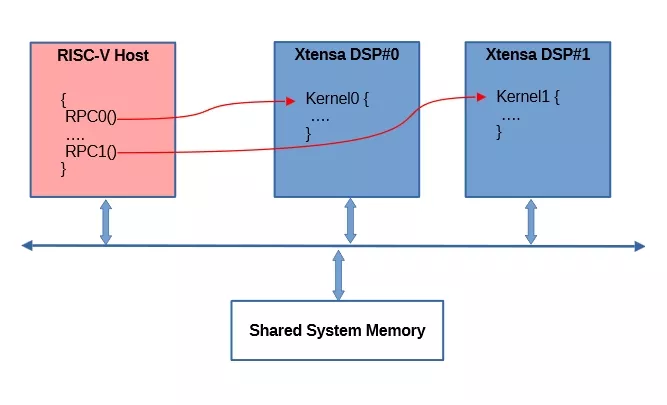

Figure 3 depicts an offload engine in which the RISC-V application dispatches work to each DSP via a Remote Procedure Call (RPC). An image processing application might exploit data-level parallelism, while a baseband signal processing application might decide to implement task pipelining. The Xtensa SDK includes an RPC library, XRPC, which consists of two parts: the DSP and the host. The XRPC DSP build runs on Xtensa cores, while the XRPC Host build runs on RISC-V cores with minimal porting effort. The host and DSP communicate through shared memory to exchange input data and results. The XRPC library supports both interrupt and flag-based synchronization between the host and the DSP.

Figure 3 - Offload Engine

Dynamic Kernel Loading

Xtensa SDK also includes a library loader. RPC can be combined with the library loader to dynamically load kernels onto DSPs. Kernel0 and Kernel1 in Figure 3 can be loaded by the host application before execution. The DSP kernel is pre-compiled as a position-independent library.

Optimized,Language Agnostic Compilation

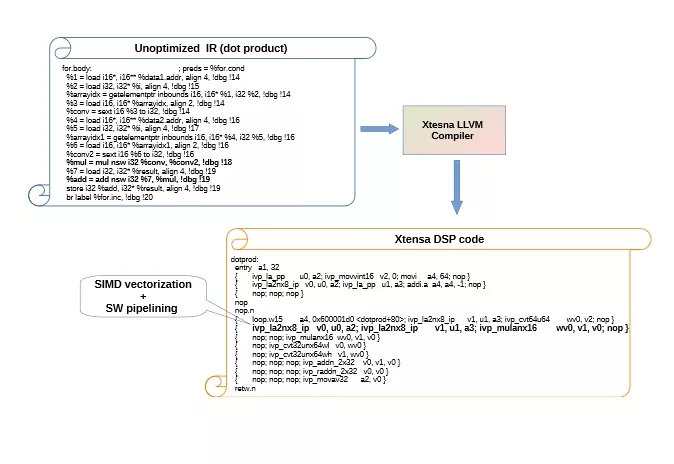

Application developers can specify DSP code in their preferred programming language. Xtensa LLVM-based tools consume unoptimized intermediate representation (IR) to generate optimized machine code, as shown in Figure 4.

Figure 4 - IR Translation

Development Platform

At the pre-silicon stage, development teams use simulation and emulation platforms for architecture exploration, early software development, and verification/integration. For hybrid platforms, please visit Helium Virtual and Hybrid Studio.

SystemC Simulation

While RTL simulation is an option, functionally accurate SystemC simulation is faster. The Xtensa SDK includes an Xtensa SystemC (XTSC) library with models for the DSP core and other system components, such as memory. The Janus NoC package includes a functional model of a NoC built with XTSC components. Here’s an example of a RISC-V open-source model. Customers can easily build a SystemC model of their heterogeneous multicore system using these components.

FPGA Emulation

For cycle-accurate profiling, customers can map their heterogeneous design onto an FPGA. Here’s a video tutorial on Xtensa DSP FPGA Emulation. Figure 5 shows a design mapped onto an Xilinx KC705 board.

Figure 5 - FPGA Emulation

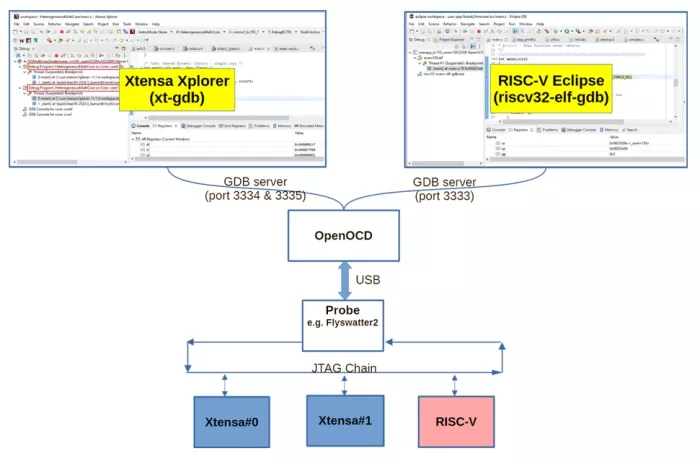

Figure 6 illustrates a debug scheme. OpenOCD connects to the target cores via the JTAG probe. RISC-V and Xtensa GDB instances connect to their respective target cores via OpenOCD GDB server ports. Both the RISC-V and Xtensa IDEs provide standard source-level debugging. Each core can be reset independently. Synchronous debugging is supported. Note that two Xtensa DSP debug contexts are annotated in red in Figure 6.

Figure 6 - Debug Setup

Summary

As shown, customers can easily design, validate, and debug a heterogeneous multicore system using Cadence IP and tools, even when combined with other IP. Please visit the Cadence support site for more information on building your next optimized heterogeneous multicore application.

Explore NoC IP:

Related Semiconductor IP

Related Blogs

- Cadence Showcases World's First 128GT/s PCIe 7.0 IP Over Optics

- DDR5 12.8Gbps MRDIMM IP: Powering the Future of AI, HPC, and Data Centers

- Flow Control Credit Updates in PCIe 6.1 ECN

- Locking When Emulating Xtensa LX Multi-Core on a Xilinx FPGA

Latest Blogs

- Silicon Insurance: Why eFPGA is Cheaper Than a Respin

- One Bit Error is Not Like Another: Understanding Failure Mechanisms in NVM

- Introducing CoreCollective for the next era of open collaboration for the Arm software ecosystem

- Integrating eFPGA for Hybrid Signal Processing Architectures

- eUSB2V2: Trends and Innovations Shaping the Future of Embedded Connectivity