Processor IP

Welcome to the ultimate Processor IP hub!

Our vast directory of Processor IP cores include AI Processor IP, GPU IP, NPU IP, DSP IP, Arm Processor, RISC-V Processor and much more.

All offers in

Processor IP

Filter

Compare

667

Processor IP

from

113

vendors

(1

-

10)

-

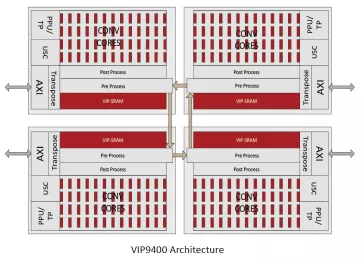

Specialized Video Processing NPU IP for SR, NR, Demosaic, AI ISP, Object Detection, Semantic Segmentation

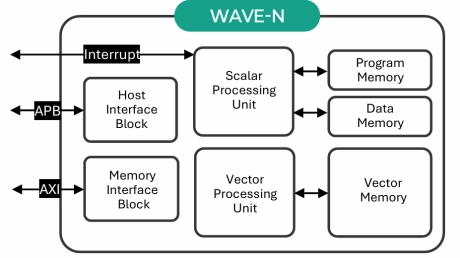

- WAVE-N is a high-performance, video-specialized NPU IP designed to deliver real-time, deep learning-based image enhancement for edge devices.

- By utilizing a proprietary 'Line-by-Line' processing architecture, it significantly reduces DRAM bandwidth and achieves 4x to 10x faster processing speeds compared to conventional NPUs.

-

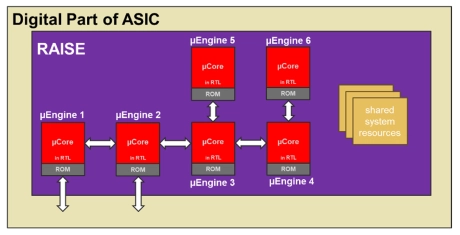

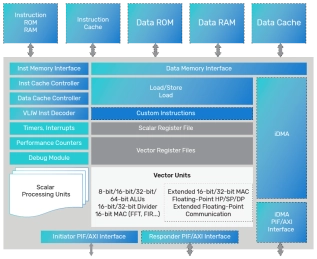

Configurable CPU tailored precisely to your needs

- Increased efficiency by converting digital design into software development

- Hardware independent and parallel Software development

- Rapid system development and evaluation:

- Software debug and tests with RAISE Simulator

-

HiFi iQ DSP

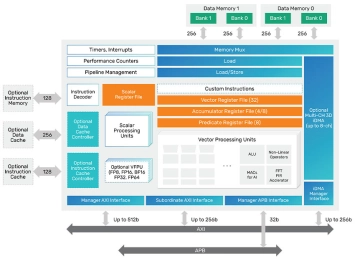

- 8X Increased AI Performance: Run the entire voice AI networks efficiently with configurable AI-MAC

- 2X Increased Raw Compute Performance: Wider SIMD allows more computations

- Expanded Data Type Support: Efficiently run cutting-edge voice AI models in FP8, BF16, and more

-

Tensilica ConnX 120 DSP

- Certified ISO 26262:2018 ASIL-compliant

- VLIW parallelism issuing multiple concurrent operations per cycle

- 256-bit SIMD

- Up to 64 MAC

-

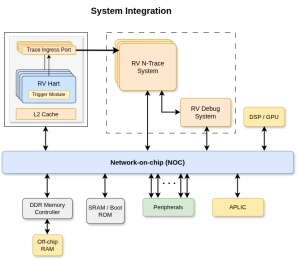

RISC-V Debug & Trace IP

- 10xEngineers Debug & N-Trace IP delivers a unified Debug + Trace solution that provides full-system visibility with low overhead and multi-hart awareness.

- Standards-compliant debug, real-time trace, and flexible triggering significantly reduce bring-up time and simplify system integration.

-

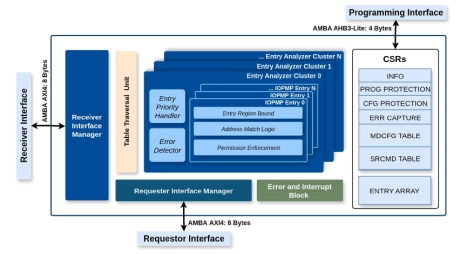

RISC-V IOPMP IP

- The I/O Physical Memory Protection (IOPMP) unit is a hardware-based access control mechanism designed to safeguard memory regions in RISC-V SoCs.

- It ensures only authorized devices and masters can access sensitive memory areas, enabling secure and reliable system operation.

-

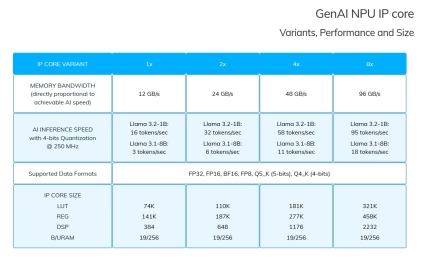

Embedded AI accelerator IP

- The GenAI IP is the smallest version of our NPU, tailored to small devices such as FPGAs and Adaptive SoCs, where the maximum Frequency is limited (<=250 MHz) and Memory Bandwidth is lower (<=100 GB/s).

-

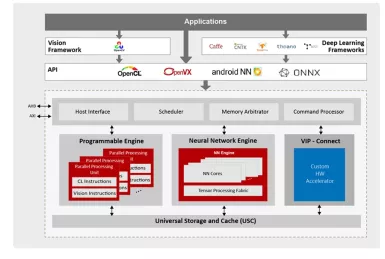

NPU IP for AI Vision and AI Voice

- 128-bit vector processing unit (shader + ext)

- OpenCL 3.0 shader instruction set

- Enhanced vision instruction set (EVIS)

- INT 8/16/32b, Float 16/32b

-

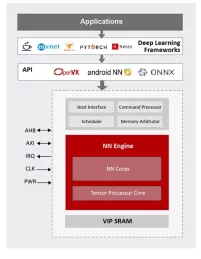

NPU IP for Wearable and IoT Market

- ML inference engine for deeply embedded system

NN Engine

Supports popular ML frameworks

Support wide range of NN algorithms and flexible in layer ordering

- ML inference engine for deeply embedded system

-

NPU IP for Data Center and Automotive

- 128-bit vector processing unit (shader + ext)

- OpenCL 1.2 shader instruction set

- Enhanced vision instruction set (EVIS)

- INT 8/16/32b, Float 16/32b in PPU

- Convolution layers