AI Processor IP Cores

AI Processor IP cores provide high-performance processing power for AI algorithms, enabling real-time data analysis, pattern recognition, and decision-making. Supporting popular AI frameworks, AI Processor IP cores are ideal for applications in edge computing, autonomous vehicles, robotics, and smart devices.

All offers in

AI Processor IP Cores

Filter

Compare

76

AI Processor IP Cores

from

38

vendors

(1

-

10)

-

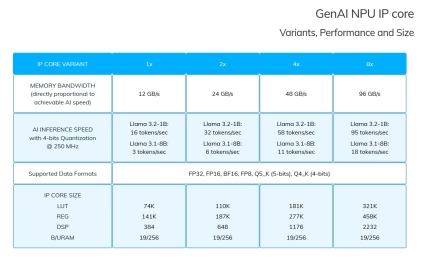

Embedded AI accelerator IP

- The GenAI IP is the smallest version of our NPU, tailored to small devices such as FPGAs and Adaptive SoCs, where the maximum Frequency is limited (<=250 MHz) and Memory Bandwidth is lower (<=100 GB/s).

-

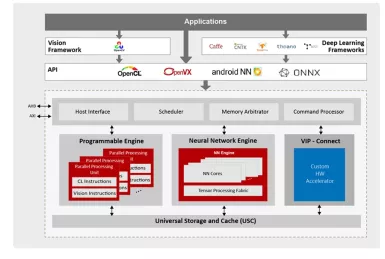

NPU IP for AI Vision and AI Voice

- 128-bit vector processing unit (shader + ext)

- OpenCL 3.0 shader instruction set

- Enhanced vision instruction set (EVIS)

- INT 8/16/32b, Float 16/32b

-

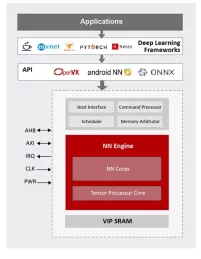

NPU IP for Wearable and IoT Market

- ML inference engine for deeply embedded system

NN Engine

Supports popular ML frameworks

Support wide range of NN algorithms and flexible in layer ordering

- ML inference engine for deeply embedded system

-

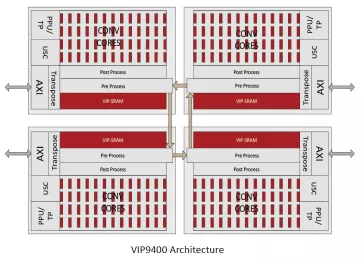

NPU IP for Data Center and Automotive

- 128-bit vector processing unit (shader + ext)

- OpenCL 1.2 shader instruction set

- Enhanced vision instruction set (EVIS)

- INT 8/16/32b, Float 16/32b in PPU

- Convolution layers

-

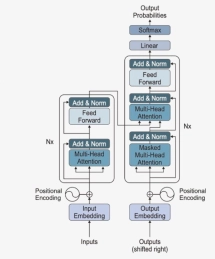

LLM AI IP Core

- For sophisticated workloads, DeepTransformCore is optimized for language and vision applications.

- Supporting both encoder and decoder transformer architectures with flexible DRAM configurations and FPGA compatibility, DeepTransformCore eliminates complex software integration burdens, empowering customers to rapidly develop custom AI SoC (System-on-chip) designs with unprecedented efficiency.

-

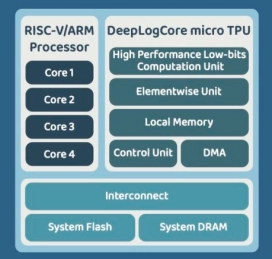

CNN AI IP Core

- DeepMentor has developed an AI IP that combines low-power and high-performance features with the RISC-V SOC.

- This integration allows customers to quickly create unique AI SOC without worrying about software integration or system development issues.

- DeepLogCore supports both RISC-V and ARM systems, enabling faster and more flexible development.

-

Multi-core capable 64-bit RISC-V CPU with vector extensions

- The SiFive® Intelligence™ X180 core IP products are designed to meet the increasing requirements of embedded IoT and AI at the far edge.

- With this 64-bit version, X100 series IP delivers higher performance and better integration with larger memory systems.

-

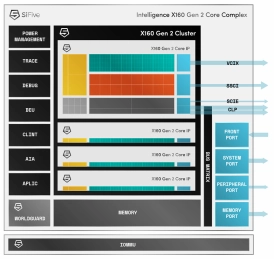

Multi-core capable 32-bit RISC-V CPU with vector extensions

- The SiFive® Intelligence™ X160 core IP products are designed to meet the increasing requirements of embedded IoT and AI at the far edge.

- With this 32-bit version, X100 series IP can be optimized for power efficiency and severely area-constrained applications.

-

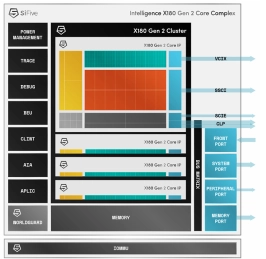

Multi-core capable RISC-V processor with vector extensions

- The SiFive® Intelligence™ X280 Gen 2 is an 8-stage dual issue, in-order, superscalar design with wide vector processing (512 bit VLEN/256-bit DLEN).

- It supports RISC-V Vectors v1.0 (RVV 1.0) and SiFive Intelligence Extensions to accelerate critical AI/ML operations.

-

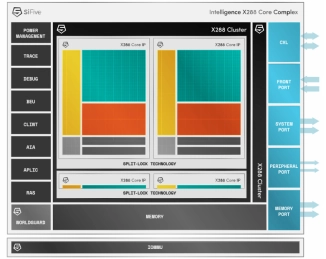

Multi-core capable processor

- The SiFive® Intelligence™ X288 is a multi-core capable processor extensively optimized to meet the harsh environmental and stringent reliability requirements of aerospace and defense applications.

- The X288 features the powerful combination of a RISC-V 64-bit 8-stage dual-issue in-order scalar pipeline and a 512-bit vector length pipeline.