David vs. Goliath: Can Small Models Win Big with Agentic AI in Hardware Design?

By Shashwat Shankar 1, Subhranshu Pandey 1, Innocent Dengkhw Mochahari 1, Bhabesh Mali 1, Animesh Basak Chowdhury 2, Sukanta Bhattacharjee 1, Chandan Karfa 1

1 Indian Institute of Technology, Guwahati, India

2 NXP USA, Inc.

Abstract

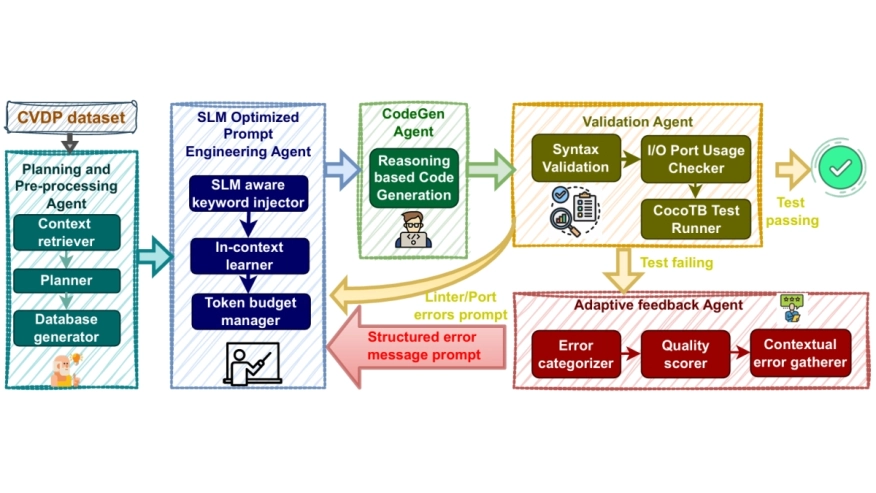

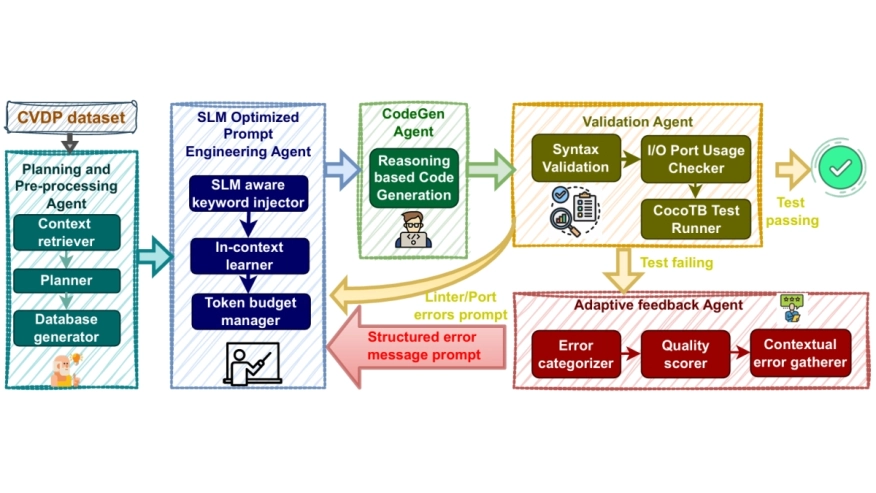

Large Language Model(LLM) inference demands massive compute and energy, making domain-specific tasks expensive and unsustainable. As foundation models keep scaling, we ask: Is bigger always better for hardware design? Our work tests this by evaluating Small Language Models coupled with a curated agentic AI framework on NVIDIA's Comprehensive Verilog Design Problems(CVDP) benchmark. Results show that agentic workflows: through task decomposition, iterative feedback, and correction - not only unlock near-LLM performance at a fraction of the cost but also create learning opportunities for agents, paving the way for efficient, adaptive solutions in complex design tasks.

Large Language Model(LLM) inference demands massive compute and energy, making domain-specific tasks expensive and unsustainable. As foundation models keep scaling, we ask: Is bigger always better for hardware design? Our work tests this by evaluating Small Language Models coupled with a curated agentic AI framework on NVIDIA's Comprehensive Verilog Design Problems(CVDP) benchmark. Results show that agentic workflows: through task decomposition, iterative feedback, and correction - not only unlock near-LLM performance at a fraction of the cost but also create learning opportunities for agents, paving the way for efficient, adaptive solutions in complex design tasks.

Keywords: AI assisted Hardware Design, Agentic AI, Large Language Model, Small Language Model, Benchmarking

To read the full article, click here

Related Semiconductor IP

- Multi-channel Ultra Ethernet TSS Transform Engine

- Configurable CPU tailored precisely to your needs

- Ultra high-performance low-power ADC

- HiFi iQ DSP

- CXL 4 Verification IP

Related Articles

- AI, and the Real Capacity Crisis in Chip Design

- Optimizing Electronics Design With AI Co-Pilots

- The role of cache in AI processor design

- New PCIe Gen6 CXL3.0 retimer: a small chip for big next-gen AI

Latest Articles

- GenAI for Systems: Recurring Challenges and Design Principles from Software to Silicon

- Creating a Frequency Plan for a System using a PLL

- RISCover: Automatic Discovery of User-exploitable Architectural Security Vulnerabilities in Closed-Source RISC-V CPUs

- MING: An Automated CNN-to-Edge MLIR HLS framework

- Fault Tolerant Design of IGZO-based Binary Search ADCs