Analog Foundation Models

By Julian Büchel 1,2, Iason Chalas 1,2, Giovanni Acampa 1,2, An Chen 3, Omobayode Fagbohungbe 4, Sidney Tsai 3, Kaoutar El Maghraoui 4, Manuel Le Gallo 1, Abbas Rahimi 1, Abu Sebastian 1

1 IBM Research– Zurich,

2 ETH Zürich

3 IBM Research– Almaden,

4 IBM Thomas J. Watson Research Center

Abstract

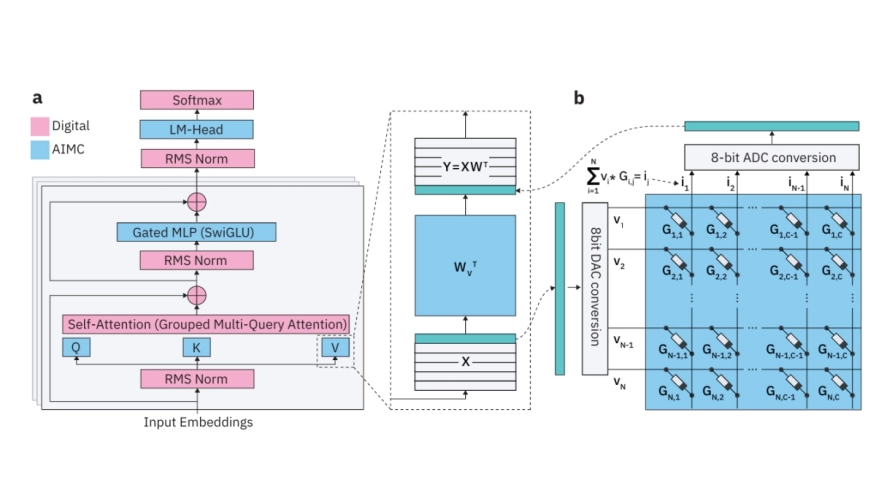

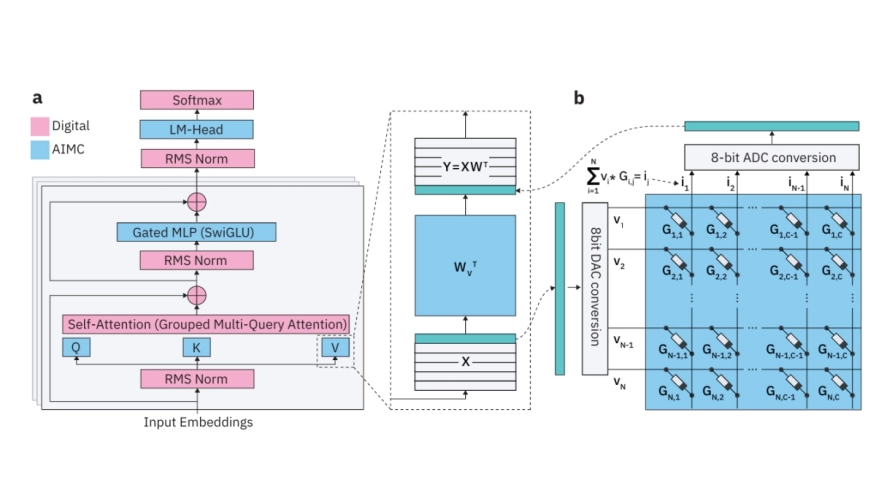

Analog in-memory computing (AIMC) is a promising compute paradigm to improve speed and power efficiency of neural network inference beyond the limits of conventional von Neumann-based architectures. However, AIMC introduces fundamental challenges such as noisy computations and strict constraints on input and output quantization. Because of these constraints and imprecisions, off-the-shelf LLMs are not able to achieve 4-bit-level performance when deployed on AIMC-based hardware. While researchers previously investigated recovering this accuracy gap on small, mostly vision-based models, a generic method applicable to LLMs pre-trained on trillions of tokens does not yet exist. In this work, we introduce a general and scalable method to robustly adapt LLMs for execution on noisy, low-precision analog hardware. Our approach enables state-of-the-art models — including Phi-3-mini-4k-instruct and Llama-3.2-1B-Instruct — to retain performance comparable to 4-bit weight, 8-bit activation baselines, despite the presence of analog noise and quantization constraints. Additionally, we show that as a byproduct of our training methodology, analog foundation models can be quantized for inference on low-precision digital hardware. Finally, we show that our models also benefit from test-time compute scaling, showing better scaling behavior than models trained with 4-bit weight and 8-bit static input quantization. Our work bridges the gap between high-capacity LLMs and efficient analog hardware, offering a path toward energy-efficient foundation models. Code is available at https://github.com/IBM/analog-foundation-models

Analog in-memory computing (AIMC) is a promising compute paradigm to improve speed and power efficiency of neural network inference beyond the limits of conventional von Neumann-based architectures. However, AIMC introduces fundamental challenges such as noisy computations and strict constraints on input and output quantization. Because of these constraints and imprecisions, off-the-shelf LLMs are not able to achieve 4-bit-level performance when deployed on AIMC-based hardware. While researchers previously investigated recovering this accuracy gap on small, mostly vision-based models, a generic method applicable to LLMs pre-trained on trillions of tokens does not yet exist. In this work, we introduce a general and scalable method to robustly adapt LLMs for execution on noisy, low-precision analog hardware. Our approach enables state-of-the-art models — including Phi-3-mini-4k-instruct and Llama-3.2-1B-Instruct — to retain performance comparable to 4-bit weight, 8-bit activation baselines, despite the presence of analog noise and quantization constraints. Additionally, we show that as a byproduct of our training methodology, analog foundation models can be quantized for inference on low-precision digital hardware. Finally, we show that our models also benefit from test-time compute scaling, showing better scaling behavior than models trained with 4-bit weight and 8-bit static input quantization. Our work bridges the gap between high-capacity LLMs and efficient analog hardware, offering a path toward energy-efficient foundation models. Code is available at https://github.com/IBM/analog-foundation-models

To read the full article, click here

Related Semiconductor IP

- TSN Ethernet Endpoint Controller 10Gbps

- 13ns High-Speed Comparator with no Hysteresis

- Frequency Divider

- Specialized Video Processing NPU IP for SR, NR, Demosaic, AI ISP, Object Detection, Semantic Segmentation

- Ultra-Low-Power Temperature/Voltage Monitor

Related Articles

- Keynoter calls for better IP standards, analog models

- Analog behavioral models reduce mixed-signal LSI verification time

- Efficient Verification and Virtual Prototyping of Analog and Mixed-Signal IP and SOCs Using Behavioral Models

- Mixed-signal SOC verification using analog behavioral models

Latest Articles

- AutoGNN: End-to-End Hardware-Driven Graph Preprocessing for Enhanced GNN Performance

- LUTstructions: Self-loading FPGA-based Reconfigurable Instructions

- CQ-CiM: Hardware-Aware Embedding Shaping for Robust CiM-Based Retrieval

- GenAI for Systems: Recurring Challenges and Design Principles from Software to Silicon

- Creating a Frequency Plan for a System using a PLL