BSC executes, for the first time, big encrypted neural networks using Intel Optane Persistent Memory and Intel Xeon Scalable Processors

Until now, the use of homomorphic encryption was limited to encrypted neural networks for mobile devices.

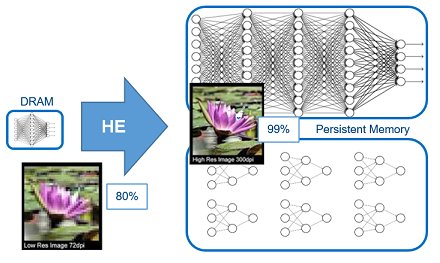

August 31, 2021 -- The Barcelona Supercomputing Center (BSC), together with Intel, have succeeded, for the first time, in encrypting the execution of big neural networks in an efficient way, thanks to Intel Optane persistent memory (PMem) and Intel Xeon Scalable processors with built-in AI acceleration. Until now, the size of the main memory supported by current technology had limited the use of homomorphic encryption to small (up to 1.7 million parameters) neural network models, designed for mobile devices. Thus, encryption of big neural networks is a major technological advancement.

This type of encryption (Homomorphic Encryption), which cannot be break even by quantum computers, allows direct operations on encrypted data, so the entity operating it has no access to its content. Given that this encryption does not need to be unencrypted to operate, privacy in non-secure environments is guarenteed (as in the cloud).

The main challenge of homomorphic encryption is its additional costs as the size of data increases, which can be multiplied by a factor of up to 10,000. Intel Optane persistent memory offers much higher capacities than DRAM and much faster access time than other non-volatile memories. While not as fast as main memory technology, combining both with an efficient access pattern, delivers compelling price/performance benefits.

This new technology can be applied in the private execution of neural networks in untrusted remote environments, such as the cloud, and it includes both the protection of intellectual property related to the model of neural network, and the used data, which enables compliance with the proliferation of data protection laws and regulations. This data could include personal, medical, trade, or state secrets, etc.

The research has been carried out by a team of researchers from the BSC, together with an international team from Intel, with members in both Europe and the United States, led by BSC researcher Antonio J. Peña. According to Peña, “this new technology will allow the general use of neural networks in cloud environments, including, for the first time, where indisputable confidentiality is required for the data or the neural network model.”

Peña leads the Accelerators and Communications for High Performance Computing Team in the Computer Sciences Department of the BSC. His research is centered on the heterogeneity of hardware and communications resources on high performance computing.

Fabian Boemer, a technical lead at Intel supporting this research, said: “The computation is both compute-intensive and memory-intensive. To speed up the bottleneck of memory access, we are investigating different memory architectures that allow better near-memory computing. This work is an important first step to solving this often-overlooked challenge. Among other technologies, we are investigating the use Intel Optane Persistent Memory to keep constantly accessed data close to the processor during the evaluation.”

The scientific article related to this research is accepted for publication in the magazine IEEE Transactions on Computers, where the execution of the popular model ResNet-50 is analyzed, which incorporates 25 million parameters, and consumes almost 1TB of memory, more than the double which is available in a computing node of the MareNostrum4 supercomputer.

In this article, the architecture of an efficient computer for this task is also mentioned, with only 1/3 of the regular RAM, which typically consumes around 10 times more power per byte than Intel Optane persistent memory, allowing for configurations with improved energy efficiency and solution sustainability.

The authors’ version is publicly available under the platform arXiv: https://arxiv.org/abs/2103.16139

Related Semiconductor IP

- Multi-channel Ultra Ethernet TSS Transform Engine

- Configurable CPU tailored precisely to your needs

- Ultra high-performance low-power ADC

- HiFi iQ DSP

- CXL 4 Verification IP

Related News

- New IBM SyNAPSE Chip Could Open Era of Vast Neural Networks

- Neural Networks Take on Embedded Vision

- Gartner Identifies Think Silicon a "Cool Vendor" in Novel Semiconductors for Neural Networks

- Eta Compute Secures $8 Million Series A Financing to Accelerate Development of the 3rd Generation of Neural Networks

Latest News

- SEALSQ and Lattice Collaborate to Deliver Unified TPM-FPGA Architecture for Post-Quantum Security

- SEMIFIVE Partners with Niobium to Develop FHE Accelerator, Driving U.S. Market Expansion

- TASKING Delivers Advanced Worst-Case Timing Coupling Analysis and Mitigation for Multicore Designs

- Efficient Computer Raises $60 Million to Advance Energy-Efficient General-Purpose Processors for AI

- QuickLogic Announces $13 Million Contract Award for its Strategic Radiation Hardened Program