Securing AI at Its Core: Why Protection Must Start at the Silicon Level

As AI systems become more pervasive and powerful, they also become more vulnerable.

Software-based security measures such as application firewalls, intrusion detection, and patch management are important for protecting AI systems — but they are not enough. These solutions cannot fully address the risks introduced by modern AI architectures and the distributed workloads they support.

That’s why a hardware-based approach to security is also required. The strategy and related solutions, which complement software features, begin at the silicon level and embed security from the ground up.

AI’s expanding attack surface

AI workloads are growing at an unprecedented rate, from cloud to edge, with model complexity doubling every few months. This surge is driving demand for specialized, high-performance silicon architectures, including multi-die systems and advanced interconnects. While these innovations enable breakthroughs in performance and scale, they also increase the number of potential vulnerabilities.

AI systems process vast amounts of sensitive data, such as biometric identifiers, medical diagnostics, and financial records. This makes them attractive targets for hackers, insider threats, cybercriminal organizations, and even nation-state actors. What’s more, new methods of attack are emerging, including:

- Data poisoning — Manipulating training datasets to skew model behavior.

- Model theft — Extracting proprietary models through side-channel or inference attacks.

- Decision tampering — Altering inference results or sensor inputs to compromise system integrity.

Regulatory pressure and security-by-design

In addition to technical threats, organizations face mounting regulatory pressures. Global and industry-specific standards now emphasize lifecycle security, data privacy, ethical AI deployment, and security-by-design principles. These pressures amplify the need for comprehensive AI security measures that cover both hardware and software layers.

|

Standard/ Regulation |

Scope |

Security Focus |

Status/Year |

|

ISO/IEC 42001 |

AI Management System |

Assess and manage risk on lifecycle security, data security |

Published: 2023 |

|

ISO/PAS 8800 |

Automotive AI Safety |

Security as an AI system requirement (not detailed) |

Published: 2024 |

|

NIST AI RMF 1.0 |

U.S. AI Risk Management Framework |

Secure function (resilience, defense) |

Published: 2023 |

|

ISO/IEC 23894 |

AI-specific Risk Governance |

AI security risk sources, data privacy cyber-vulnerability assessment, treatment |

Published: 2023 |

|

EU AI ACT |

AI System Regulation |

Security-by-design for high-risk AI |

Published: 2024 Fully enforced: 2026 |

|

OECD AI Principles |

Responsible and Trustworthy Development and Use of AI |

AI systems to be reliable, secure, and safe throughout their lifecycle |

Published: 2019 Updated: 2024 |

|

ISO/IEC 27090 |

Guidance for Addressing Security Threats to AI Systems |

Adversarial threats, model integrity |

Enquiry (Review): 2025 Publication: 2026 |

|

ISO/TS 5083 |

Safety for Automated Driving Systems (ADS) |

Safety principle: ADS employs solutions to protect against cybersecurity threats and safeguard information |

Published: 2025 |

Examples of AI governance standards and regulations

End-to-end protection for AI ecosystems

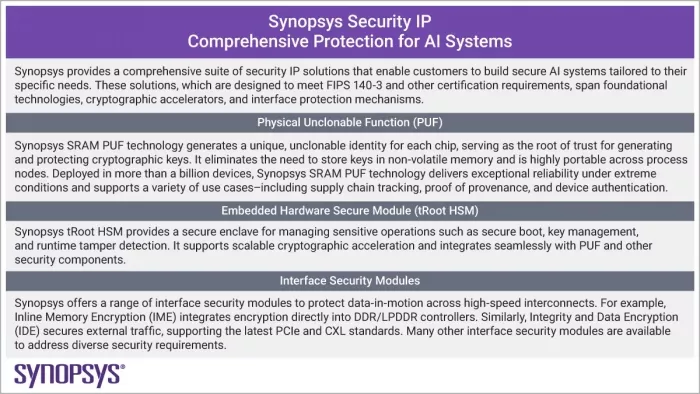

Securing AI systems is not limited to software or cloud layers. It requires a holistic approach spanning edge devices, accelerators, data pipelines, and the underlying silicon supporting them. Key priorities include:

- Integrity and confidentiality of models and datasets

- Protection of user data during collection, transmission, and processing

- Secure communication between sensors, accelerators, and inference engines

- Hardware-based secure boot and runtime anti-tampering

- Cryptographic isolation of workloads

AI systems also require mechanisms to authenticate devices, manage keys securely, and enforce access control across distributed environments. These capabilities must be designed into the hardware, starting with systems-on-chip (SoCs) and chiplets, to ensure trustworthiness and resilience against evolving threats. This way, hardware security is the foundation of a secure system, providing fundamental trust and physical protection for AI systems.

Preparing for the quantum computing era

Quantum computing introduces a new and disruptive dynamic to the security landscape. Widely used algorithms such as RSA and ECC will eventually be rendered vulnerable by large-scale, cryptographically-capable quantum computers. While the full impact of quantum threats may still be years away, the risks are already present.

“Harvest now, decrypt later” attacks are actively occurring, where encrypted data is collected today with the intent to decrypt it once quantum capabilities become available. Another type of attack, with potentially even more impact, is “trust now, forge later.” Through these attacks, signatures or certificates that are considered secure today could be forged in the future using quantum computers, undermining current authentication methods.

Fortunately, post-quantum cryptography (PQC) solutions are now available to help safeguard today’s systems against tomorrow’s quantum threats. These solutions leverage NIST-standardized cryptographic algorithms for key encapsulation and digital signatures. And they should be deployed along with other quantum-safe symmetric and hash cryptographic algorithms as well as entropy sources (TRNGs, PUFs).

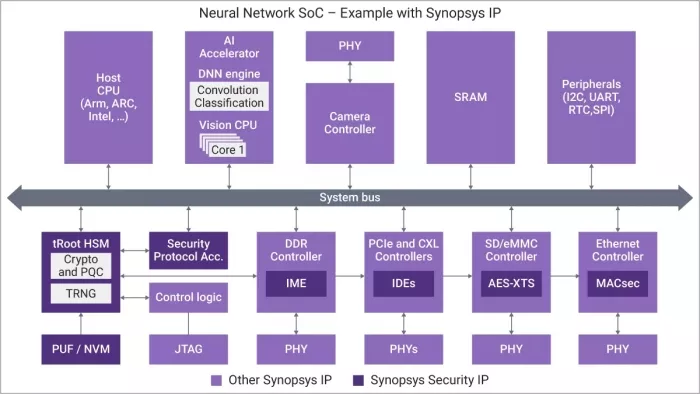

Example of a neural network SoC with Synopsys Security IP

Security as a design imperative

AI is transforming how we compute, communicate, and make decisions. But trust in these systems depends on robust security measures embedded at the hardware level. Silicon-based security provides the foundation for protecting sensitive data, ensuring model integrity, meeting global compliance requirements, and safeguarding today’s systems from tomorrow’s quantum computing threats.

Security is not an optional feature. It is a prerequisite for reliable, ethical, and future-ready AI systems.

Related Semiconductor IP

- UALinkSec Security Module

- Quantum Safe, ISO 21434 Automotive-grade Programmable Hardware Security Module

- Embedded Hardware Security Module (Root of Trust) - Automotive Grade ISO 26262 ASIL-B

- Embedded Hardware Security Module for Automotive and Advanced Applications

- Hardware Security Module

Related Blogs

- Why thinking about software and security is so important right at the start of an ASIC design

- X100 - Securing the System - RISC-V AI at the Edge

- High Bandwidth Memory (HBM) at the AI Crossroads: Customization or Standardization?

- Trust at the Core: A Deep Dive into Hardware Root of Trust (HRoT)

Latest Blogs

- Silicon Insurance: Why eFPGA is Cheaper Than a Respin

- One Bit Error is Not Like Another: Understanding Failure Mechanisms in NVM

- Introducing CoreCollective for the next era of open collaboration for the Arm software ecosystem

- Integrating eFPGA for Hybrid Signal Processing Architectures

- eUSB2V2: Trends and Innovations Shaping the Future of Embedded Connectivity