Vision Transformers Have Already Overtaken CNNs: Here’s Why and What’s Needed for Best Performance

Vision AI Has Moved Beyond CNNs—Now What?

Convolutional Neural Networks (CNNs) have long dominated AI vision, powering applications from automotive ADAS to face recognition and surveillance. But the industry has moved on—Vision Transformers (ViTs) are now recognized as the superior approach for many computer vision tasks. Their ability to understand global context, resist adversarial attacks, and analyze complex scenes has made them the new standard in vision AI.

The conversation is no longer about whether ViTs will overtake CNNs. They already have. Now, the real challenge is ensuring ViTs run efficiently on hardware designed for their needs.

This article will explore why ViTs have become the preferred choice, what makes them different, and what hardware capabilities are essential for maximizing their performance.

Why Have Vision Transformers Taken Over?

CNNs process images bottom-up, detecting edges and features progressively until a full object is classified. This works well for clean, ideal images, but struggles with occlusions, image corruption, and adversarial noise. Vision Transformers, on the other hand, analyze an image more holistically, understanding relationships between different regions through an attention mechanism.

A great analogy, as noted in Quanta Magazine: “If a CNN’s approach is like starting at a single pixel and zooming out, a transformer slowly brings the whole fuzzy image into focus.”

This approach gives ViTs significant advantages:

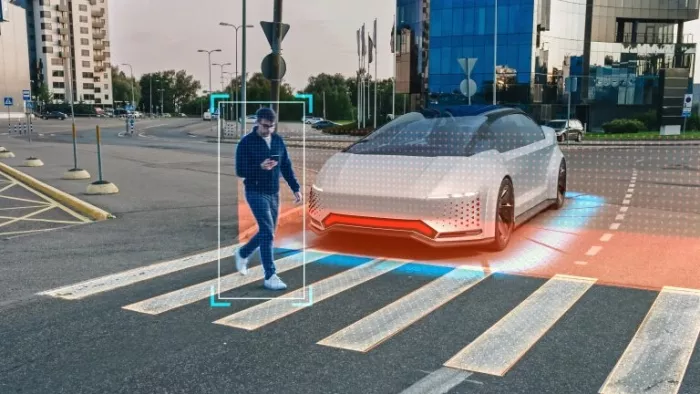

- Superior Object Recognition in Complex Scenes – Unlike CNNs, which focus on local features, ViTs consider global context, making them more robust in cluttered environments (e.g., a pedestrian partially occluded by a parked car).

- Resistance to Adversarial Attacks – CNNs can be fooled by small pixel changes (e.g., modifying a stop sign to be misclassified as a yield sign). ViTs are less susceptible to such tricks due to their holistic approach.

- Better Adaptability to Scene Parsing – Tasks like autonomous driving rely not just on object recognition but also scene segmentation and pathfinding. ViTs naturally excel in these areas.

However, these benefits come at a cost—ViTs are computationally expensive and require significantly more processing power than CNNs. That’s why the focus has shifted to AI hardware optimization.

What Hardware is Needed to Unlock ViT’s Full Potential?

For Vision Transformers to reach their full potential in edge AI applications, the underlying hardware must be optimized for their unique computational demands. Key hardware capabilities of an NPU supporting ViTs include:

- Efficient Attention Mechanisms – Unlike CNNs, which rely on convolutional operations, ViTs depend on fast matrix multiplications and attention layers. Hardware must be able to accelerate these processes efficiently.

- Mixed Datatype Support – ViTs often require floating-point precision for attention calculations but can transition to smaller integer types (e.g., INT8) for Multi-Layer Perceptron (MLP) stages. The hardware must handle this transition seamlessly.

- Structured and Unstructured Sparsity Acceleration – Many ViT models contain redundant calculations (e.g., multiplying by zero). Efficient hardware skips unnecessary operations, improving throughput and power efficiency.

- High-Throughput Custom Operators – Offloading computations to external accelerators often slows down inference. Instead, hardware must integrate custom operators into the same pipeline as the transformer core for maximum efficiency.

- Parallelism and Multi-Core Processing – Scene understanding and segmentation require extensive parallel computation. Multi-engine support is essential for handling these tasks efficiently.

- Model Compression and Pruning – ViT models can contain billions of parameters. Hardware that supports weight pruning and compression minimizes loading times and memory overhead.

- On-Chip Processing to Reduce Memory Bottlenecks – Some advanced ViT implementations can run on-device without external memory, significantly improving efficiency in edge AI applications.

The Future is Here—ViT-Optimized Hardware is the Next Step

Vision AI is evolving rapidly, and ViTs have already surpassed CNNs in critical applications like autonomous driving and security. The next challenge is ensuring that NPU hardware can efficiently support these models while maintaining power efficiency and real-time performance.

At Ceva, we have already integrated these hardware optimizations into our NeuPro-M NPU IP . If you want to learn more about how we enable high-performance Vision Transformer applications, visit our website or reach out for a discussion.

The future of AI vision is here—make sure your hardware is ready for it.

Related Semiconductor IP

- Scalable Edge NPU IP for Generative AI

- NPU

- Specialized Video Processing NPU IP for SR, NR, Demosaic, AI ISP, Object Detection, Semantic Segmentation

- NPU IP Core for Mobile

- NPU IP Core for Edge

Related Blogs

- From ChatGPT to Computer Vision Processing: How Deep-Learning Transformers Are Shaping Our World

- Vision Transformers Change the AI Acceleration Rules

- CNNs and Transformers: Decoding the Titans of AI

- The CEVA-MM3101: An Imaging-Optimized DSP Core Swings for an Embedded Vision Home Run

Latest Blogs

- Silicon Insurance: Why eFPGA is Cheaper Than a Respin

- One Bit Error is Not Like Another: Understanding Failure Mechanisms in NVM

- Introducing CoreCollective for the next era of open collaboration for the Arm software ecosystem

- Integrating eFPGA for Hybrid Signal Processing Architectures

- eUSB2V2: Trends and Innovations Shaping the Future of Embedded Connectivity