Designing the AI Factories: Unlocking Innovation with Intelligent IP

The rapid evolution of artificial intelligence (AI) is reshaping the technological landscape, driving unprecedented demands on computing infrastructure. At the heart of this transformation lie innovations in intellectual property (IP) that enable scalable, efficient, and performance-driven AI factories. These advancements are central to addressing the technical challenges of modern AI workloads while ensuring adaptability for the future. An AI factory is a dedicated computing infrastructure built to generate value from data by overseeing the complete AI life cycle, including data ingestion, training, fine-tuning, and large-scale AI inference. Its core output is intelligence, quantified by token throughput, which powers decision-making, automation, and the development of innovative AI solutions. Today, we explore how these innovations in IP are poised to power the AI-driven compute systems of tomorrow.

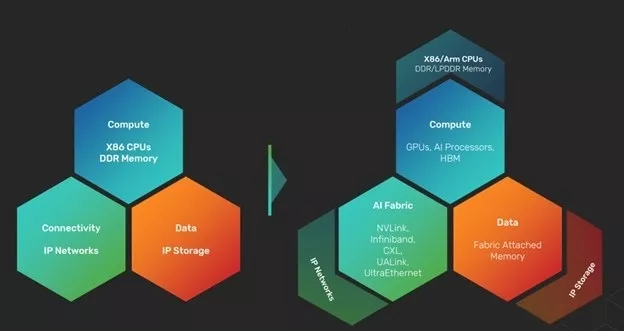

The Shift in Computing for the AI Era

Traditionally, computing systems relied heavily on x86-based CPUs with standard internet protocols for connectivity and legacy DDR memory modules. However, as AI workloads ramp up, we see a fundamental shift toward heterogeneous architectures. Modern systems pair CPUs with accelerators like GPUs, AI processors, and data processing units (DPUs), using advanced memory technologies such as high-bandwidth memory (HBM) or GDDR for optimal performance. This diversification in hardware demands evolving connectivity standards, including NVLink™, InfiniBand, CXL™, and Ultra Ethernet™, to meet the data-intensive needs of AI applications. These advancements signify a move beyond traditional computing paradigms, adding a new layer of performance-optimized connectivity and storage to handle AI-specific workloads.

Scaling Challenges in AI Factories

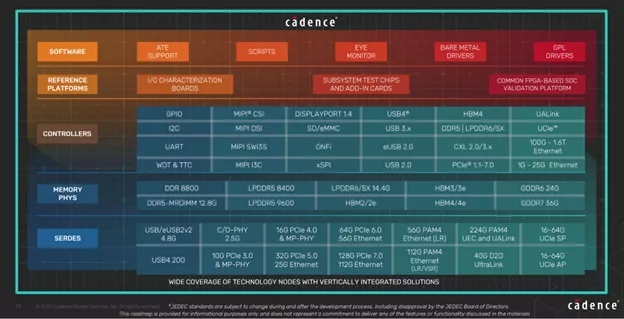

Scaling AI workloads introduces unique computational hurdles, such as the "memory wall," where memory bandwidth per CPU core fails to scale proportionally with the addition of more cores. This calls for advanced memory solutions like HBM, which empowers AI factories to meet high data throughput demands. Cadence has been a leader in delivering cutting-edge memory solutions, such as HBM3 and HBM4, along with GDDR6 and GDDR7, enabling efficient data movement while addressing power and performance constraints. Disaggregation is also key, with standards like UCIe facilitating modular designs and reducing the bottlenecks in massive system-on-chip (SoC) architectures.

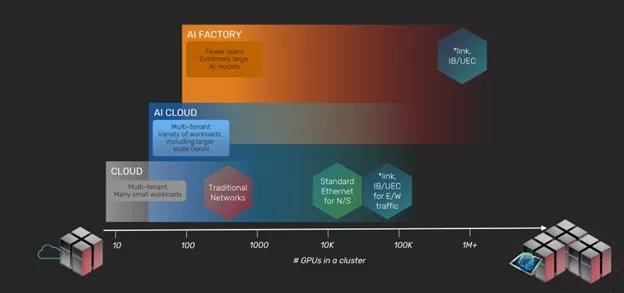

Networking Innovations for Large-Scale AI Systems

Networking scalability is equally critical in AI factories. High-speed SerDes technologies drive interconnects like Ultra Ethernet, UALink™, and PCI Express® (PCIe®) 7.0, which are essential for efficient scale-up and scale-out approaches within AI systems. The versatility of these SerDes solutions allows multiple protocols to operate efficiently over the same hardware backbone, offering flexibility for diverse deployment needs. Cadence has made strides in delivering SerDes and PHY IP with exceptional system performance under the most stringent operating conditions and energy efficiency, enabling error-free operations in even the most demanding environments. These breakthroughs are foundational for the seamless connectivity of accelerators, CPUs, and storage within next-gen AI factories.

Enabling Broad AI Applications with Robust IP Portfolios

From large AI model training to small-scale inference and ranking, each workload has distinct demands across compute, memory, and networking vectors. To optimize these diverse requirements, Cadence provides a comprehensive IP portfolio, including advanced PHYs, controllers, and memory solutions, designed to support the entire spectrum of AI applications. This robust ecosystem empowers customers to build scalable solutions for high-performance computing (HPC) and AI environments, pushing the boundaries of capability and efficiency.

Shaping the Future of AI Factories

The innovations in IP powering AI factories today are laying the groundwork for future advancements in AI and HPC. With continued focus on efficiency, scalability, and performance, these developments are enabling system designers and engineers to tackle the growing complexity of AI workloads. By leveraging advanced memory architectures, high-performance interconnects, and modular SoC designs, the next generation of AI factories promises to drive breakthroughs in everything from autonomous systems to generative AI applications.

Closing Thoughts

The evolution of AI computing is a testament to the importance of IP innovation in addressing the technical challenges of the modern era. Organizations like Cadence are at the forefront of this transformation, delivering expertise and solutions that allow AI factories to scale dynamically for the demands of tomorrow. As we continue to push technological boundaries, the collaboration between design, silicon, and software will remain pivotal in unlocking the full potential of AI. Stay tuned for more developments as these innovations shape the future of intelligent compute systems.

Discover how to power the AI factories of tomorrow! Watch Arif Khan, Sr. Product Marketing Group Director, as he shares insights on "Innovations in IP to Power the AI Factories of Tomorrow." Learn about unique architectural requirements and how choosing the right IP can lead to successful designs. Don't miss this opportunity—watch now!

Related Semiconductor IP

- HBM4E PHY and controller

- 40G UltraLink D2D PHY

- GDDR7 PHY

- Specialized Video Processing NPU IP for SR, NR, Demosaic, AI ISP, Object Detection, Semantic Segmentation

- Ultra-Low-Power Temperature/Voltage Monitor

Related Blogs

- The Road to Innovation with Synopsys 224G PHY IP From Silicon to Scale: Synopsys 224G PHY Enables Next Gen Scaling Networks

- FuriosaAI Accelerates Innovation with Digital Controller IP from Rambus

- The Industry’s First USB4 Device IP Certification Will Speed Innovation and Edge AI Enablement

- Analog Bits Steals the Show with Working IP on TSMC 3nm and 2nm and a New Design Strategy

Latest Blogs

- Silicon Insurance: Why eFPGA is Cheaper Than a Respin

- One Bit Error is Not Like Another: Understanding Failure Mechanisms in NVM

- Introducing CoreCollective for the next era of open collaboration for the Arm software ecosystem

- Integrating eFPGA for Hybrid Signal Processing Architectures

- eUSB2V2: Trends and Innovations Shaping the Future of Embedded Connectivity