Morphlux: Programmable chip-to-chip photonic fabrics in multi-accelerator servers for ML

By Abhishek Vijaya Kumar 1, Eric Ding 1, Arjun Devraj 1, Darius Bunandar 2, Rachee Singh 1

1 Cornell University

2 Lightmatter

Abstract

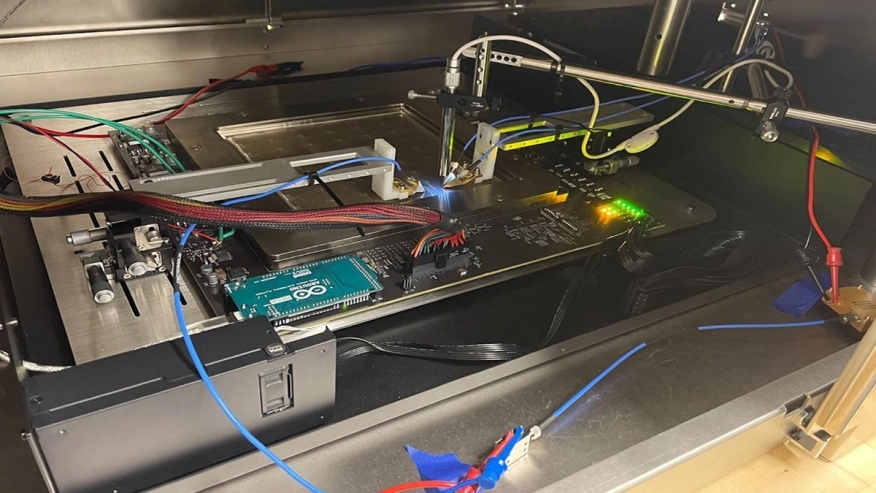

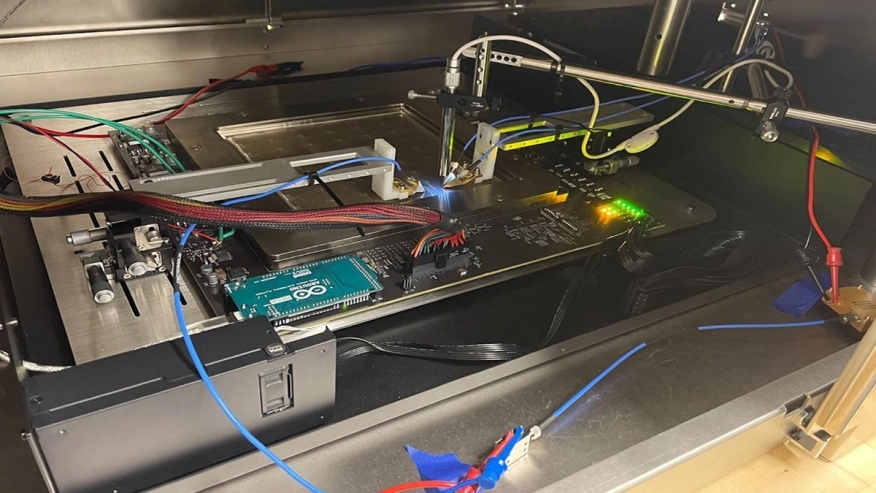

We optically interconnect accelerator chips (e.g., GPUs, TPUs) within compute servers using newly viable programmable chip-to-chip photonic fabrics. In contrast, today, commercial multi-accelerator compute servers that are workhorses of ML, use electrical interconnects to network accelerator chips in the server. However, recent trends have shown an interconnect bandwidth wall caused by accelerator FLOPS scaling at a faster rate than the bandwidth of the interconnect between accelerators in the same server. This has led to under-utilization and idling of GPU resources in cloud datacenters. We develop Morphlux, a server-scale programmable photonic fabric, to interconnect accelerators within servers. We show that augmenting state-of-the-art photonic ML-centric datacenters with Morphlux can improve the bandwidth of tenant compute allocations by up to 66% and reduce compute fragmentation by up to 70%. We develop a novel end-to-end hardware prototype of Morphlux to demonstrate these performance benefits, which translate to 1.72x improvement in training throughput of ML models. By rapidly programming the server-scale fabric in our hardware testbed, Morphlux can logically replace a failed accelerator chip in 1.2 seconds.

We optically interconnect accelerator chips (e.g., GPUs, TPUs) within compute servers using newly viable programmable chip-to-chip photonic fabrics. In contrast, today, commercial multi-accelerator compute servers that are workhorses of ML, use electrical interconnects to network accelerator chips in the server. However, recent trends have shown an interconnect bandwidth wall caused by accelerator FLOPS scaling at a faster rate than the bandwidth of the interconnect between accelerators in the same server. This has led to under-utilization and idling of GPU resources in cloud datacenters. We develop Morphlux, a server-scale programmable photonic fabric, to interconnect accelerators within servers. We show that augmenting state-of-the-art photonic ML-centric datacenters with Morphlux can improve the bandwidth of tenant compute allocations by up to 66% and reduce compute fragmentation by up to 70%. We develop a novel end-to-end hardware prototype of Morphlux to demonstrate these performance benefits, which translate to 1.72x improvement in training throughput of ML models. By rapidly programming the server-scale fabric in our hardware testbed, Morphlux can logically replace a failed accelerator chip in 1.2 seconds.

To read the full article, click here

Related Semiconductor IP

- JESD204E Controller IP

- eUSB2V2.0 Controller + PHY IP

- I/O Library with LVDS in SkyWater 90nm

- 50G PON LDPC Encoder/Decoder

- UALink Controller

Related Articles

- FPGAs: Embedded Apps : Building mesh-based distributed switch fabrics with programmable logic

- New programmable processors will displace ASICs in communications designs, says CEO

- Embedded Systems: Programmable Logic -> Programming enters designer's core

- Embedded Systems: Programmable Logic -> Common gateway networks enable remote programs

Latest Articles

- Crypto-RV: High-Efficiency FPGA-Based RISC-V Cryptographic Co-Processor for IoT Security

- In-Pipeline Integration of Digital In-Memory-Computing into RISC-V Vector Architecture to Accelerate Deep Learning

- QMC: Efficient SLM Edge Inference via Outlier-Aware Quantization and Emergent Memories Co-Design

- ChipBench: A Next-Step Benchmark for Evaluating LLM Performance in AI-Aided Chip Design

- COVERT: Trojan Detection in COTS Hardware via Statistical Activation of Microarchitectural Events