Breaking the HBM Bit Cost Barrier: Domain-Specific ECC for AI Inference Infrastructure

By Rui Xie †, Asad Ul Haq †, Yunhua Fang †, Linsen Ma †, Sanchari Sen ∗, Swagath Venkataramani ∗, Liu Liu †, Tong Zhang †

† Rensselaer Polytechnic Institute, NY, USA,

∗ IBM, NY, USA

Abstract

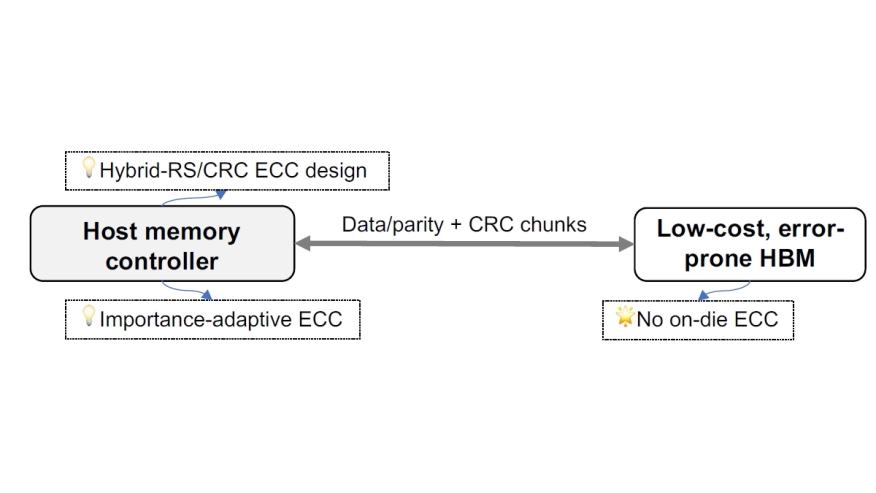

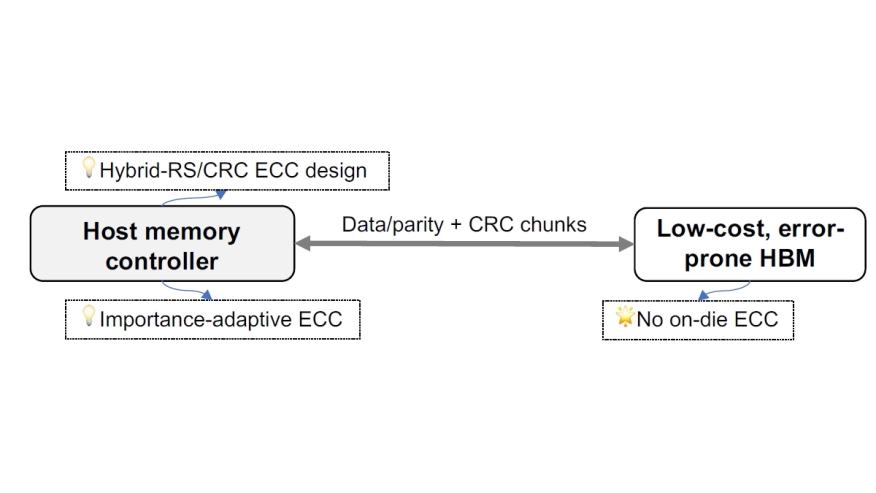

High-Bandwidth Memory (HBM) delivers exceptional bandwidth and energy efficiency for AI workloads, but its high cost per bit, driven in part by stringent on-die reliability requirements, poses a growing barrier to scalable deployment. This work explores a systemlevel approach to cost reduction by eliminating on-die ECC and shifting all fault management to the memory controller. We introduce a domainspecific ECC framework combining large-codeword Reed–Solomon (RS) correction with lightweight fine-grained CRC detection, differential parity updates to mitigate write amplification, and tunable protection based on data importance. Our evaluation using LLM inference workloads shows that, even under raw HBM bit error rates up to 10−3, the system retains over 78% of throughput and 97% of model accuracy compared with systems equipped with ideal error-free HBM. By treating reliability as a tunable system parameter rather than a fixed hardware constraint, our design opens a new path toward low-cost, high-performance HBM deployment in AI infrastructure.

High-Bandwidth Memory (HBM) delivers exceptional bandwidth and energy efficiency for AI workloads, but its high cost per bit, driven in part by stringent on-die reliability requirements, poses a growing barrier to scalable deployment. This work explores a systemlevel approach to cost reduction by eliminating on-die ECC and shifting all fault management to the memory controller. We introduce a domainspecific ECC framework combining large-codeword Reed–Solomon (RS) correction with lightweight fine-grained CRC detection, differential parity updates to mitigate write amplification, and tunable protection based on data importance. Our evaluation using LLM inference workloads shows that, even under raw HBM bit error rates up to 10−3, the system retains over 78% of throughput and 97% of model accuracy compared with systems equipped with ideal error-free HBM. By treating reliability as a tunable system parameter rather than a fixed hardware constraint, our design opens a new path toward low-cost, high-performance HBM deployment in AI infrastructure.

Index Terms — HBM, Error-Correcting Code, CRC, Reliability

To read the full article, click here

Related Semiconductor IP

- HBM Memory Controller

- HBM 4 Verification IP

- Verification IP for HBM

- TSMC CLN16FFGL+ HBM PHY IP

- Simulation VIP for HBM

Related Articles

- Making Strong Error-Correcting Codes Work Effectively for HBM in AI Inference

- The Growing Importance of AI Inference and the Implications for Memory Technology

- Top 5 Reasons why CPU is the Best Processor for AI Inference

- Why Software is Critical for AI Inference Accelerators

Latest Articles

- GenAI for Systems: Recurring Challenges and Design Principles from Software to Silicon

- Creating a Frequency Plan for a System using a PLL

- RISCover: Automatic Discovery of User-exploitable Architectural Security Vulnerabilities in Closed-Source RISC-V CPUs

- MING: An Automated CNN-to-Edge MLIR HLS framework

- Fault Tolerant Design of IGZO-based Binary Search ADCs