The Quest for Reliable AI Accelerators: Cross-Layer Evaluation and Design Optimization

By Meng Li 1,2,3, Tong Xie 2,1, Zuodong Zhang 4, and Runsheng Wang 2,3,4

1 Institute for Artificial Intelligence & 2 School of Integrated Circuits, Peking University, Beijing, China

3 Beijing Advanced Innovation Center for Integrated Circuits, Beijing, China

4 Institute of Electronic Design Automation, Peking University, Wuxi, China

Abstract

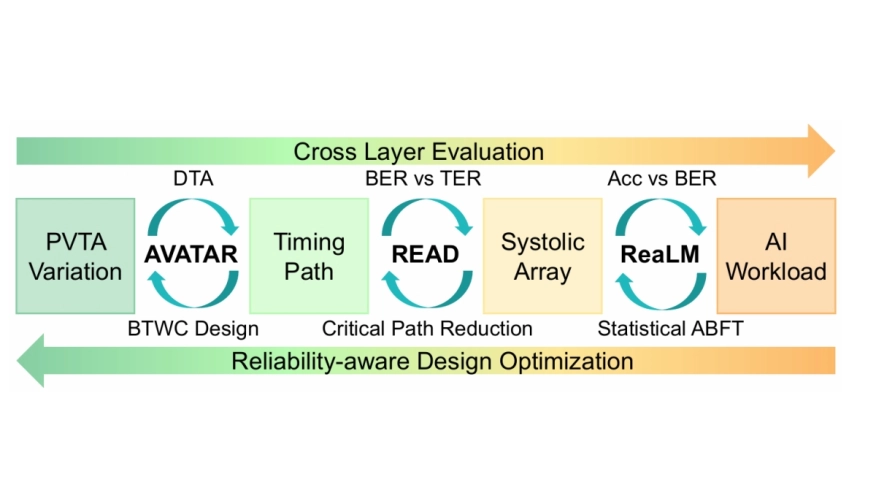

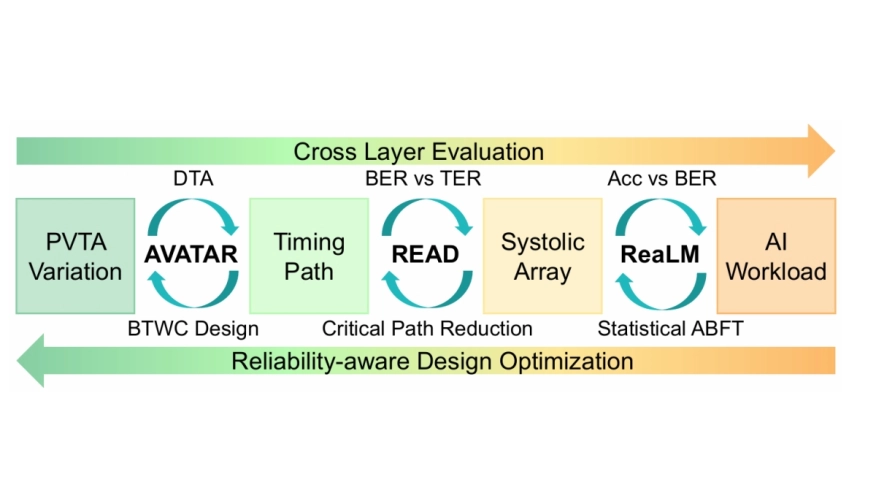

As the CMOS technology pushes to the nanoscale, aging effects and process variations have become increasingly pronounced, posing significant reliability challenges for AI accelerators. Traditional guardband-based design approaches, which rely on pessimistic timing margin, sacrifice significant performance and computational efficiency, rendering them inadequate for high-performance AI computing demands. Current reliability-aware AI accelerator design faces two core challenges: (1) the lack of systematic cross-layer analysis tools to capture coupling reliability effects across device, circuit, architecture, and application layers; and (2) the fundamental trade-off between conventional reliability optimization and computational efficiency. To address these challenges, this paper systematically presents a series of reliability-aware accelerator designs, encompassing (1) aging and variation-aware dynamic timing analyzer, (2) accelerator dataflow optimization using critical input pattern reduction, and (3) resilience characterization and novel architecture design for large language models (LLMs). By tightly integrating cross-layer reliability modeling and AI workload characteristics, these co-optimization approaches effectively achieve reliable and efficient AI acceleration.

As the CMOS technology pushes to the nanoscale, aging effects and process variations have become increasingly pronounced, posing significant reliability challenges for AI accelerators. Traditional guardband-based design approaches, which rely on pessimistic timing margin, sacrifice significant performance and computational efficiency, rendering them inadequate for high-performance AI computing demands. Current reliability-aware AI accelerator design faces two core challenges: (1) the lack of systematic cross-layer analysis tools to capture coupling reliability effects across device, circuit, architecture, and application layers; and (2) the fundamental trade-off between conventional reliability optimization and computational efficiency. To address these challenges, this paper systematically presents a series of reliability-aware accelerator designs, encompassing (1) aging and variation-aware dynamic timing analyzer, (2) accelerator dataflow optimization using critical input pattern reduction, and (3) resilience characterization and novel architecture design for large language models (LLMs). By tightly integrating cross-layer reliability modeling and AI workload characteristics, these co-optimization approaches effectively achieve reliable and efficient AI acceleration.

To read the full article, click here

Related Semiconductor IP

- RISC-V-Based, Open Source AI Accelerator for the Edge

- Embedded AI accelerator IP

- Lowest Power and Cost End Point AI Accelerator

- Performance AI Accelerator for Edge Computing

- Performance Efficiency AI Accelerator

Related Articles

- Why Software is Critical for AI Inference Accelerators

- Programmable accelerators: hardware performance with software flexibility

- H.264 Baseline Decoder With ADI Blackfin DSP and Hardware Accelerators

- Reducing Power in Embedded Systems by Adding Hardware Accelerators

Latest Articles

- GenAI for Systems: Recurring Challenges and Design Principles from Software to Silicon

- Creating a Frequency Plan for a System using a PLL

- RISCover: Automatic Discovery of User-exploitable Architectural Security Vulnerabilities in Closed-Source RISC-V CPUs

- MING: An Automated CNN-to-Edge MLIR HLS framework

- Fault Tolerant Design of IGZO-based Binary Search ADCs