A Reconfigurable Framework for AI-FPGA Agent Integration and Acceleration

By Aybars Yunusoglu 1, Talha Coskun 2, Hiruna Vishwamith 3, Murat Isik 4, I. Can Dikmen 5

1 Purdue University, West Lafayette, USA

2 University of Illinois, Urbana-Champaign, Urbana, USA

3 University of Moratuwa, Moratuwa, Sri Lanka

4 Stanford University, Stanford, USA

5 Istinye University, Istanbul, Turkey

Abstract

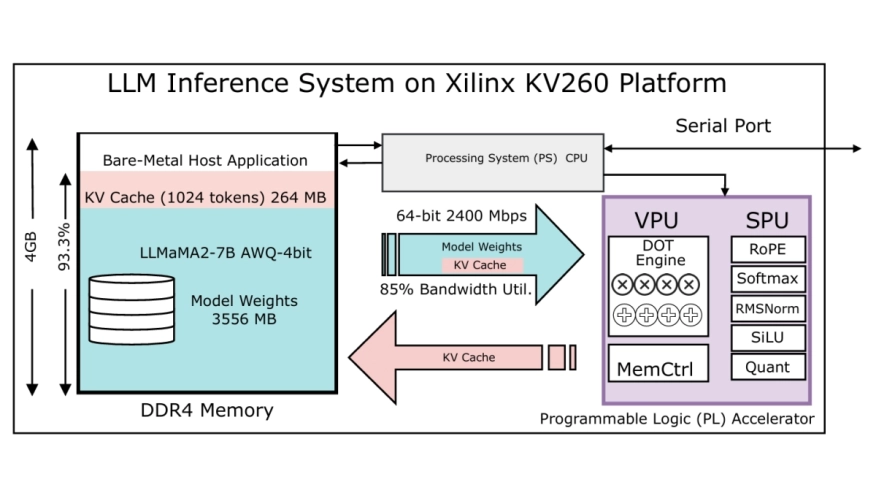

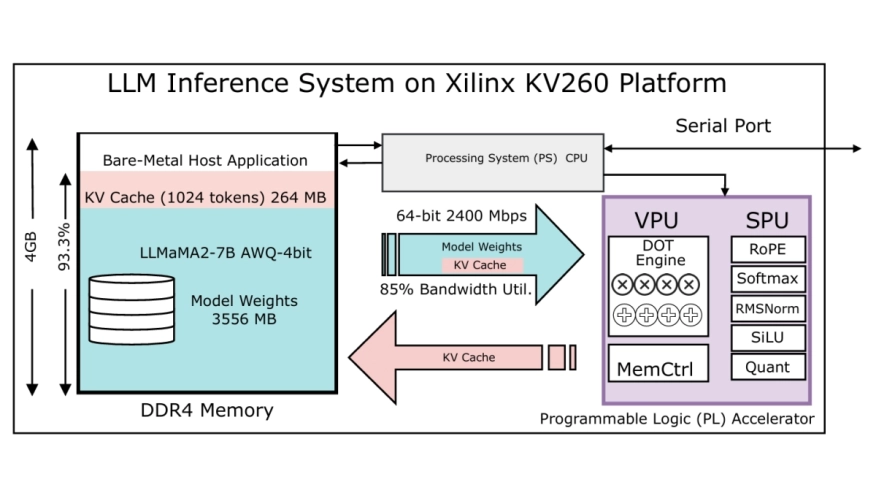

Artificial intelligence (AI) is increasingly deployed in real-time and energy-constrained environments, driving demand for hardware platforms that can deliver high performance and power efficiency. While central processing units (CPUs) and graphics processing units (GPUs) have traditionally served as the primary inference engines, their general-purpose nature often leads to inefficiencies under strict latency or power budgets. Field-Programmable Gate Arrays (FPGAs) offer a promising alternative by enabling custom-tailored parallelism and hardware-level optimizations. However, mapping AI workloads to FPGAs remains challenging due to the complexity of hardware-software co-design and data orchestration. This paper presents AI FPGA Agent, an agent-driven framework that simplifies the integration and acceleration of deep neural network inference on FPGAs. The proposed system employs a runtime software agent that dynamically partitions AI models, schedules compute-intensive layers for hardware offload, and manages data transfers with minimal developer intervention. The hardware component includes a parameterizable accelerator core optimized for high-throughput inference using quantized arithmetic. Experimental results demonstrate that the AI FPGA Agent achieves over 10x latency reduction compared to CPU baselines and 2-3x higher energy efficiency than GPU implementations, all while preserving classification accuracy within 0.2% of full-precision references. These findings underscore the potential of AI-FPGA co-design for scalable, energy-efficient AI deployment.

Artificial intelligence (AI) is increasingly deployed in real-time and energy-constrained environments, driving demand for hardware platforms that can deliver high performance and power efficiency. While central processing units (CPUs) and graphics processing units (GPUs) have traditionally served as the primary inference engines, their general-purpose nature often leads to inefficiencies under strict latency or power budgets. Field-Programmable Gate Arrays (FPGAs) offer a promising alternative by enabling custom-tailored parallelism and hardware-level optimizations. However, mapping AI workloads to FPGAs remains challenging due to the complexity of hardware-software co-design and data orchestration. This paper presents AI FPGA Agent, an agent-driven framework that simplifies the integration and acceleration of deep neural network inference on FPGAs. The proposed system employs a runtime software agent that dynamically partitions AI models, schedules compute-intensive layers for hardware offload, and manages data transfers with minimal developer intervention. The hardware component includes a parameterizable accelerator core optimized for high-throughput inference using quantized arithmetic. Experimental results demonstrate that the AI FPGA Agent achieves over 10x latency reduction compared to CPU baselines and 2-3x higher energy efficiency than GPU implementations, all while preserving classification accuracy within 0.2% of full-precision references. These findings underscore the potential of AI-FPGA co-design for scalable, energy-efficient AI deployment.

To read the full article, click here

Related Semiconductor IP

- Multi-channel Ultra Ethernet TSS Transform Engine

- Configurable CPU tailored precisely to your needs

- Ultra high-performance low-power ADC

- HiFi iQ DSP

- CXL 4 Verification IP

Related Articles

- CD-PIM: A High-Bandwidth and Compute-Efficient LPDDR5-Based PIM for Low-Batch LLM Acceleration on Edge-Device

- Veri-Sure: A Contract-Aware Multi-Agent Framework with Temporal Tracing and Formal Verification for Correct RTL Code Generation

- Proven solutions for converting a chip specification into RTL and UVM

- A novel 3D buffer memory for AI and machine learning

Latest Articles

- GenAI for Systems: Recurring Challenges and Design Principles from Software to Silicon

- Creating a Frequency Plan for a System using a PLL

- RISCover: Automatic Discovery of User-exploitable Architectural Security Vulnerabilities in Closed-Source RISC-V CPUs

- MING: An Automated CNN-to-Edge MLIR HLS framework

- Fault Tolerant Design of IGZO-based Binary Search ADCs