Artificial Intelligence (AI) utilizing deep learning techniques to enhance ADAS

By Ambuj Nandanwar, Softnautics a MosChip Company

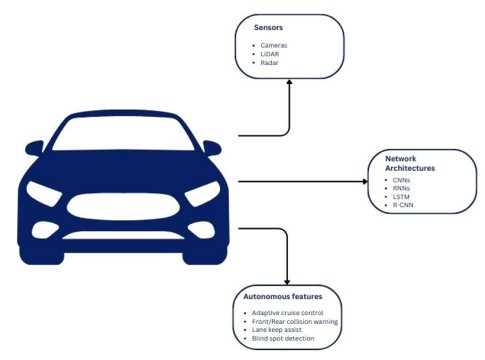

Artificial Intelligence and machine learning has significantly revolutionized the Advanced Driver Assistance System (ADAS), by utilizing the strength of deep learning techniques. ADAS relies heavily on deep learning to analyze and interpret large amounts of data obtained from a wide range of sensors. Cameras, LiDAR (Light Detection and Ranging), radar, and ultrasonic sensors are examples of these sensors. The data collected in real-time from the surrounding environment of the vehicle encompasses images, video, and sensor readings.

By effectively incorporating machine learning development techniques into the training deep learning models, ADAS systems can analyze the sensor data in real-time and make informed decisions to enhance driver safety and assist in driving tasks, making it future ready for autonomous driving. They can also estimate distances, velocities, and trajectories of surrounding objects, allowing ADAS systems to predict potential collisions and provide timely warnings or take preventive actions. Let’s dive into the key steps of deep learning techniques in the Advanced Driver Assistance System and tools commonly used in the development and deployment of ADAS systems.

Key steps in the development and deployment of deep learning models for ADAS

Data preprocessing

Data preprocessing in ADAS focuses on preparing collected data for effective analysis and decision-making. It involves tasks such as cleaning data to remove errors and inconsistencies, handling missing values through interpolation or extrapolation, addressing outliers, and normalizing features. For image data, resizing ensures consistency, while normalization methods standardize pixel values. Sensor data, such as LiDAR or radar readings, may undergo filtering techniques like noise removal or outlier detection to enhance quality.

By performing these preprocessing steps, the ADAS system can work with reliable and standardized data, improving the accuracy of predictions and overall system performance.

Network architecture selection

Network architecture selection is another important process in ADAS as it optimizes performance, ensures computational efficiency, balances model complexity, and interpretability, enables generalization to diverse scenarios, and adapts to hardware constraints. By choosing appropriate architectures, such as Convolutional Neural Networks (CNNs) for visual tasks and Recurrent Neural Networks (RNNs) or Long Short-Term Memory Networks (LSTM) for sequential data analysis, ADAS systems can improve accuracy, achieve real-time processing, interpret model decisions, and effectively handle various driving conditions while operating within resource limitations. CNNs utilize convolutional and pooling layers to process images and capture spatial characteristics, while RNNs and LSTMs capture temporal dependencies and retain memory for tasks like predicting driver behavior or detecting drowsiness.

Training data preparation

Training data preparation in ADAS helps in data splitting, data augmentation, and other necessary steps to ensure effective model learning and performance. Data splitting involves dividing the collected datasets into training, validation, and testing sets, enabling the deep learning network to be trained, hyperparameters to be tuned using the validation set, and the final model's performance to be evaluated using the testing set.

Data augmentation techniques, such as flipping, rotating, or adding noise to images, are employed to enhance the diversity and size of the training data, mitigating the risk of overfitting. These steps collectively enhance the quality, diversity, and reliability of the training data, enabling the ADAS system to make accurate and robust decisions.

Networks Architectures and Autonomous Features in ADAS

Training process

The training process in an ADAS system involves training deep learning models using optimization algorithms and loss functions. These methods are employed to optimize the model's performance, minimize errors, and enable accurate predictions in real-world driving scenarios. By adjusting the model's parameters through the optimization process, the model learns from data and improves its ability to make informed decisions, enhancing the overall effectiveness of the ADAS system.

Object detection and tracking

Object detection and tracking is also a crucial step in ADAS as it enables systems to detect the driving lanes or implement pedestrian detection to improve road safety. There are several techniques to perform object detection in ADAS, some popular deep learning-based techniques are Region-based Convolutional Neural Networks (R-CNN), Single Shot MultiBox Detector (SSD) and You Only Look Once (YOLO).

Deployment

The deployment of deep learning models in ADAS ensure that the trained deep learning models are compatible with the vehicle's hardware components, such as an onboard computer or specialized processors. The model must be adapted so that it can function seamlessly within the hardware architecture that already exists. The models need to be integrated into the vehicle's software stack, allowing them to communicate with other software modules and sensors. They process real-time sensor data from various sources, such as cameras, LiDAR, radar, and ultrasonic sensors. These deployed models analyze incoming data streams, detect objects, identify lane markings, and make driving-related decisions based on their interpretations. This real-time processing is crucial for providing timely warnings and assisting drivers in critical situations.

Continuous learning and updating

- Online learning: The ADAS system can be designed to continually learn and update the deep learning models based on new data and experiences. This involves incorporating mechanisms to adapt the models to changing driving conditions, new scenarios, and evolving safety requirements.

- Data collection and annotation: Continuous learning requires the collection of new data and annotations to train updated models. This may involve data acquisition from various sensors, manual annotation or labeling of the collected data, and updating the training pipeline accordingly.

- Model re-training and fine-tuning: When new data is collected, the existing deep learning models can be re-trained or fine-tuned using the new data to adapt to emerging patterns or changes in the driving environment.

Now let us see commonly used tools, frameworks and libraries in ADAS development.

- TensorFlow: An open-source deep learning framework developed by Google. It provides a comprehensive ecosystem for building and training neural networks, including tools for data pre-processing, network construction, and model deployment.

- PyTorch: Another widely used open-source deep learning framework that offers dynamics computational graphs, making it suitable for research and prototyping. It provides a range of tools and utilities for building and training deep learning models.

- Keras: A high-level deep learning library that runs on top of TensorFlow. It offers a user-friendly interface for building and training neural networks, making it accessible for beginners and rapid prototyping.

- Caffe: A deep learning framework specifically designed for speed and efficiency, often used for real-time applications in ADAS. It provides a rich set of pre-trained models and tools for model deployment.

- OpenCV: A popular computer vision library that offers a wide range of image and video processing functions. It is frequently used for pre-processing sensor data, performing image transformations, and implementing computer vision algorithms in ADAS applications.

To summarize, the integration of deep learning techniques into ADAS systems empowers them to analyze and interpret real-time data from various sensors, enabling accurate object detection, collision prediction, and proactive decision-making. This ultimately contributes to safer and more advanced driving assistance capabilities.

About the Author

Ambuj Nandanwar is a Marketing professional at Softnautics a MosChip Company creating impactful techno-commercial writeups and conducting extensive market research to promote businesses on various platforms. He has been a passionate marketer for more than two years and is constantly looking for new endeavors to take on. When He’s not working, Ambuj can be found riding his bike or exploring new destinations.

Related Semiconductor IP

- NPU IP Core for Mobile

- NPU IP Core for Edge

- Specialized Video Processing NPU IP

- HYPERBUS™ Memory Controller

- AV1 Video Encoder IP

Related White Papers

- Artificial Intelligence and Machine Learning based Image Processing

- How AI (Artificial Intelligence) Is Transforming the Aerospace Industry

- Aircraft Jet Engine Failure Analytics Using Google Cloud Platform Based Deep Learning

- The Growing Market for Specialized Artificial Intelligence IP in SoCs

Latest White Papers

- Ramping Up Open-Source RISC-V Cores: Assessing the Energy Efficiency of Superscalar, Out-of-Order Execution

- Transition Fixes in 3nm Multi-Voltage SoC Design

- CXL Topology-Aware and Expander-Driven Prefetching: Unlocking SSD Performance

- Breaking the Memory Bandwidth Boundary. GDDR7 IP Design Challenges & Solutions

- Automating NoC Design to Tackle Rising SoC Complexity