BittWare selects EdgeCortix's SAKURA-I AI Processors as its Edge Focused Artificial Intelligence Acceleration Solution

TOKYO, June 13, 2023 -- EdgeCortix® Inc., the innovative Edge Artificial Intelligence (AI) Platform company, focused on delivering class-leading compute efficiency and ultra-low latency for AI inference, announced, that BittWare, a Molex Company, has selected EdgeCortix’s SAKURA-I, best in-class, energy-efficient, AI co-processor as its edge inference solution.

EdgeCortix founder and CEO Dr. Sakyasingha Dasgupta poses for a picture with their latest SAKURA AI processor-enabled machine learning system for power-efficient inference at the edge, from vision to generative AI applications.

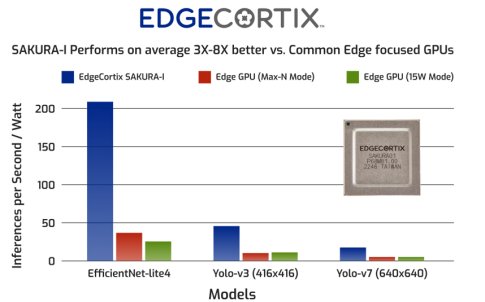

"We are pleased to have BittWare bringing our SAKURA-I AI chip to market as part of their new artificial intelligence and machine learning inference acceleration program," said Sakyasingha Dasgupta, Founder and CEO of EdgeCortix. "Together with BittWare, we are delivering a complete edge AI acceleration solution for small form factor computer boxes to edge inference servers that can be used as 'drop-in', highly efficient solutions in lieu of common power hungry GPUs. From a performance perspective, BittWare customers can look forward to SAKURA-I delivering exceptionally low inference latency and high data throughput, while consuming under 10 Watts of power and delivering up to an 8X performance improvement versus leading GPUs. We are excited to be making our solution available at scale, through BittWare.”

“For over thirty years, the BittWare brand has been trusted to bring the best acceleration technology to market. Extending our offerings into ASIC-based acceleration solutions optimized for AI inference, is a natural step for both our business and our brand,” said Craig Petrie, Vice President, BittWare. "Customers have already identified a broad range of opportunities for this solution, from surveillance and security applications, to quality assurance on the manufacturing floor, to data closets inside office buildings, these extensive edge use cases are expected to benefit tremendously from SAKURA-I’s capabilities. We are delighted to be partnering with EdgeCortix to bring their edge focused SAKURA-I acceleration solution to market.”

The SAKURA-I AI co-processor is a TSMC 12nm FinFET, AI-focused accelerator device, designed for rapid computations and power-efficient inference processing, from small to medium form-factor systems. SAKURA-I is especially suited for real-time applications with streaming data, such as high-resolution camera and lidar data, real time controls for autonomous systems, radio frequency (RF) signal processing, generative AI applications or natural language processing, as well as forthcoming 5G-AI integrated systems. The SAKURA-I co-processor delivers industry leading AI inference capabilities across power and mobility sensitive edge applications like aerial, underwater or ground-based autonomous, or manned, platforms, smart city, smart manufacturing, security and surveillance, visual and text/speech combined (multi-modal) systems, generative AI systems, real-time defense applications and next generation AI-5G integrated systems. SAKURA-I AI processor is specifically optimized for inference with real-time, streaming and high-resolution data. It supports mixed numerical precision for more accurate solutions, as well as can process latest generation AI models like Transformers used in large language models and generative AI applications. SAKURA-I is powered by up to 40 trillion operations per second (TOPS) computing engine called Dynamic Neural Accelerator® (DNA). DNA is EdgeCortix’s proprietary neural processing engine with built-in runtime reconfigurable data-path connecting all compute engines together to reduce power and increase compute utilization. DNA enables the SAKURA-I AI co-processor to run multiple and multi-modal deep neural network models at the same time with ultra-low latency, while preserving exceptional TOPS utilization and low power consumption, a key market differentiator.

EdgeCortix SAKURA-I is benchmarked vs. leading edge focused GPU platform under different power modes. Edge GPU is expected to be a TSMC 7nm device, while SAKURA-I is TSMC 12nm device. End to end latency is measured under batch size 1, in all cases. All models deployed at INT8 without any pruning. Measurements on Edge GPU were compiled and optimized by the vendor's latest tools. All SAKURA-I measurements were compiled and deployed on early-access SAKURA board using EdgeCortix MERA v1.4 software.

SAKURA-I Edge AI Processor (Production Version) Overview:

- Up to 40 TOPS (INT8 single chip) and 20 TFLOPS (BF16 single chip). Multiple chips can be combined on a single board.

- Real-time, low-latency inference with both generative and non-generative machine learning models including convolutional and transformer based neural networks.

- Powered by EdgeCortix patented run-time reconfigurable data path - Dynamic Neural Accelerator IP.

- On-chip dual 64-bit LPDDR4x – up to 16 GB on single board.

- PCIe Gen 3 up to 16 GB/s bandwidth.

- SAKURA Board TDP at 10W-15W based on form-factor

- Dynamic power while running most modern neural network models on chip ranges between ~1W-5W.

About EdgeCortix Inc.

EdgeCortix is an Edge Artificial Intelligence (AI) Platform as a service company, pioneering the future of the connected intelligent edge. It was founded in 2019 with the radical idea of taking a software first approach, while designing an artificial intelligence specific runtime reconfigurable processor from the ground up using a technique called "hardware & software co-exploration". Targeting advanced computer vision applications first, using proprietary hardware and software IP on existing processors like FPGAs and custom designed ASIC, the company is geared towards positively disrupting the rapidly growing AI hardware space across defense, aerospace, smart cities, industry 4.0, autonomous vehicles and robotics.

For more details or to schedule a demonstration, contact: info@edgecortix.com

Related Semiconductor IP

- Dataflow AI Processor IP

- Powerful AI processor

- AI Processor Accelerator

- High-performance AI dataflow processor with scalable vector compute capabilities

- AI DSA Processor - 9-Stage Pipeline, Dual-issue

Related News

- EdgeCortix Expands Delivery of its Industry Leading SAKURA-I AI Co-processor Devices and MERA Software Suite

- EdgeCortix SAKURA-I AI Accelerator Demonstrates Robust Radiation Resilience, Suitable for Many Orbital and Lunar Expeditions.

- MIPS I8500 Processor Orchestrates Data Movement for the AI Era

- The next RISC-V processor frontier: AI

Latest News

- SEALSQ and Lattice Collaborate to Deliver Unified TPM-FPGA Architecture for Post-Quantum Security

- SEMIFIVE Partners with Niobium to Develop FHE Accelerator, Driving U.S. Market Expansion

- TASKING Delivers Advanced Worst-Case Timing Coupling Analysis and Mitigation for Multicore Designs

- Efficient Computer Raises $60 Million to Advance Energy-Efficient General-Purpose Processors for AI

- QuickLogic Announces $13 Million Contract Award for its Strategic Radiation Hardened Program