Rethinking AI Infrastructure: The Rise of PCIe Switches

Boring? Think Again. PCIe Switches Are the Hidden Power Behind AI

When thinking of AI, images of futuristic robots or self-driving cars may come to mind. What might not come to mind are the unsung hardware component heroes that are quietly enabling such complex systems. Among these, PCI Express (PCIe) switches might seem to be a boring topic to write about, much less read. But here's the twist—they are nothing short of revolutionary when it comes to empowering AI workloads. Far from just a functional piece of hardware, PCIe switches are the invisible foundation that accelerates data processing, eliminates bottlenecks, and ensures modern AI systems operate with blazing speed and pinpoint precision.

Understanding PCIe Switches: Structure, Capabilities, and Topology

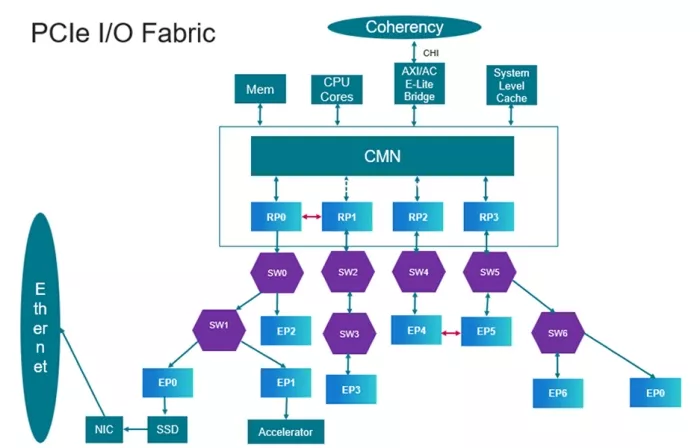

PCIe switches are intelligent, multi-port devices that serve as the backbone of scalable, high-performance computing systems. Architecturally, a PCIe switch includes one upstream port connected to the root port and multiple downstream ports (connected to endpoints like GPUs, SSDs, or FPGAs). Internally, it features a non-blocking crossbar switch fabric, routing logic, non-transparent bridge (NTB) with cross-link feature for inter-domain communication, and telemetry.

What makes PCIe switches indispensable in AI and data center environments is their ability to scale connectivity and bandwidth beyond what a CPU's root complex can offer. For example, modern PCIe switches enable direct peer-to-peer communication between endpoints—critical for multi-GPU AI training workloads where accelerators must exchange large datasets without CPU intervention.

PCIe Topologies: How Switches Fit In

PCIe topologies define how devices are interconnected in a system. The most common topologies include:

- Tree Topology: A single root port connects to multiple endpoints through one or more switches. This is the most common layout in servers and workstations.

- Multi-Root Topology: Multiple root complexes share access to a common set of endpoints via a switch that supports NTB. This is used in high-availability or multi-host systems.

PCIe switches enable these topologies by acting as the central hub that routes traffic efficiently between devices, allowing for flexible system design and expansion.

PCIe Switch vs. Root Port vs. Endpoint

- Root Port: The origin of the PCIe hierarchy, typically integrated into the CPU or chipset. It initiates transactions and manages the configuration and control of downstream devices. However, without a PCIe switch port, the connection is limited to a single endpoint device.

- Endpoint: These are the devices that consume or produce data—such as GPUs, SSDs, or NICs. They are the leaf nodes in the PCIe topology and rely on the root port or switch to communicate with other devices.

- PCIe Switch: Positioned between the root port and endpoints, the switch enables multiple endpoints or PCIe switches to communicate with the root port and with each other. Unlike a root port, it does not initiate transactions but facilitates them. Unlike an endpoint, it does not consume or produce memory data but routes it efficiently. A PCIe switch contains one upstream switch port, which connects to the root port or another downstream port switch, and one or more downstream switch ports that might be connected to other PCIe switches and endpoint devices, enabling the fabric expansion.

Key Capabilities of PCIe Switches

- Peer-to-Peer Transfers: Allow endpoints to communicate directly, bypassing the CPU.

- Lane Bifurcation: Dynamically splits x16 links into smaller widths (e.g., 4×4) to optimize bandwidth allocation.

- Bandwidth Allocation: Adjusts how bandwidth is distributed based on traffic demand, congestion, and priority

- CXL Compatibility: Supports memory pooling and coherent memory sharing in AI workloads.

- Integrated Retimers: Maintain signal integrity at high speeds (up to 64 GT/s in PCIe 6.0).

- Telemetry and Diagnostics: Monitor link health and performance in real time.

- Low Latency: Very low latency makes the PCIe switch ideal for internal system communication compared to the Ethernet protocol. Its typical latency ranges from 1 to 5 microseconds, whereas VLAN-based Ethernet network communication typically incurs latency between 10 and 100 microseconds. This significant difference makes the PCIe switch an attractive solution for fabric expansion in high-performance systems.

In short, while root ports and endpoints are fixed-function roles in a PCIe topology, the switch is the dynamic enabler that brings scalability, flexibility, and performance optimization with minimal latency. This capability is especially critical in AI-driven systems, where efficient data movement is key to overcoming bottlenecks.

Why Are PCIe Switches Critical to AI?

By nature, AI systems are built to have immense streams of data, whether for training machine learning models, running neural networks, or performing real-time analysis. To achieve this, processors like CPUs, GPUs, and FPGAs must exchange data at incredible speeds. That's made possible by PCIe, providing a high-speed, low-latency communication protocol. Yet, as AI applications demand more hardware integration and higher data throughput, standard PCIe links alone can't keep up. This is where PCIe switches shine—delivering scalable, dynamic connectivity that quietly powers the performance of AI behind the scenes.

Unlike traditional switches, PCIe switches were designed to meet the unique demands of complex systems. These switches manage data flow between components, balance workloads, and ensure seamless communication, which allows AI systems to perform reliably under increasingly demanding workloads. What used to be a niche component is now something that industries can't build AI systems without.

Rewriting the Narrative on PCIe Switches

PCIe switches might not be as glamorous as shiny new AI algorithms or groundbreaking robots, but they do more heavy lifting than you might think. They've quietly become the hidden drivers behind AI transformations in areas like autonomous vehicles, healthcare diagnostics, and edge computing. The next time you hear "PCIe switches," don't think boring—think of them as quietly powering the greatest advances in AI, making them anything but ordinary.

Cadence delivers a robust PCIe switch with upstream and downstream ports, streamlining even the most complex serial link and protocol layer requirements. Our switch ports feature abstracts the intricacies of the PHY, data link, and transaction layers—freeing designers to focus on innovating at the application layer of their SoC fabric. This includes integration of endpoints, additional switches, and peer-to-peer (P2P) interconnects. Whether you're architecting a dense interconnect topology or scaling PCIe connectivity beyond your SoC boundary, Cadence simplifies the heavy lifting—enabling agility, efficiency, and advanced design flexibility.

Interested in PCIe upstream and downstream switch capabilities? Connect with the Cadence Sales Team to explore detailed specifications and integration support tailored to your project.

Related Semiconductor IP

- PCIe Switch Verification IP

- PCIe Switch for USB4

- PCIe 6.2 Switch

- PCIe 7.0 Switch

- PCIe 5.0 Multi-port Switch

Related Blogs

- Rivian’s autonomy breakthrough built with Arm: the compute foundation for the rise of physical AI

- The Rise of Physical AI: When Intelligence Enters the Real World

- Connected AI is More Than the Sum of its Parts

- UALink: Powering the Future of AI Compute

Latest Blogs

- Silicon Insurance: Why eFPGA is Cheaper Than a Respin

- One Bit Error is Not Like Another: Understanding Failure Mechanisms in NVM

- Introducing CoreCollective for the next era of open collaboration for the Arm software ecosystem

- Integrating eFPGA for Hybrid Signal Processing Architectures

- eUSB2V2: Trends and Innovations Shaping the Future of Embedded Connectivity