How to Solve the Size, Weight, Power and Cooling Challenge in Radar & Radio Frequency Modulation Classification

Modern radar and Radio Frequency (RF) signal processing systems—especially those deployed on platforms like drones, CubeSats, and portable systems—are increasingly limited by strict Size, Weight, Power and Cooling (SWaP-Cool) constraints. These environments demand real-time performance and efficient computation, yet many conventional algorithms are too resource-intensive to operate within such tight margins. As the need for intelligent signal interpretation at the edge grows, it becomes essential to identify processing methods that balance accuracy within these constraints.

One such essential task in radar and RF applications is Automatic Modulation Classification (AMC). AMC enables systems to autonomously recognize the modulation type of incoming signals without prior coordination, a function crucial for dynamic spectrum access, electronic warfare, and cognitive radar systems. However, many existing AI-based AMC models, such as deep CNNs or hybrid ensembles, are computationally heavy and ill-suited for low- SWaP-Cool deployment, creating a pressing gap between performance needs and implementation feasibility.

In this post, we’ll show how BrainChip’s Temporal Event-Based Neural Network (TENN), a state space model, overcomes this challenge. You’ll learn why conventional models fall short in AMC tasks—and how TENN enables efficient, accurate, low-latency classification, even in noisy RF environments.

Why Traditional AMC Models Fall Short at the Edge

AMC is essential for identifying unknown or hostile signals, enabling cognitive electronic warfare, and managing spectrum access. But systems like UAVs, edge sensors, and small satellites can’t afford large models that eat power and memory.

Unfortunately, traditional deep learning architectures used for AMC come with real drawbacks:

- Hundreds of millions of Multiply Accumulate (MAC) operations resulting in high power consumption and large parameter counts demanding large memory

- Heavy preprocessing requirements (e.g., Fast Fourier Transform (FFTs), spectrograms)

- Still fail to maintain accuracy under 0 dB Signal-to-Noise Ratio (SNR), where signal and noise have similar power.

In mobile, airborne, and space-constrained deployments, these inefficiencies are showstoppers.

BrainChip’s TENN Model: A Low-SWaP-Cool Breakthrough for Real-Time RF Signal Processing

BrainChip’s TENN model provides a game-changing alternative. It replaces traditional CNNs with structured state-space layers and is specifically optimized for low SWaP-Cool high-performance RF signal processing. State‑Space Models (SSMs) propagate a compact hidden state forward in time, so they need only constant‑size memory at every step. Modern SSM layers often recast this recurrent update as a convolution of the input with a small set of basis kernels produced by recurrence. Inference‑time efficiency therefore matches that of classic RNNs, but SSMs enjoy a major edge during training: like Transformers, they expose parallelizable convolutional structure, eliminating the strict step‑by‑step back‑propagation bottleneck that slows RNN training. The result is a sequence model that is memory‑frugal in deployment yet markedly faster to train than traditional RNNs, while still capturing long‑range dependencies without the quadratic cost of attention of Transformers.

TENN introduces the following innovations:

- A compact state-space modeling that simplifies modulation classification by reducing memory usage and computation—offering a leaner alternative to transformer-based models.

- Tensor contraction optimization, applying efficient strategies to minimize memory footprint, computation and maximize throughput.

- Hybrid SSM architecture that replaces CNN layers and avoids attention mechanisms, maintaining feature richness with lower computational cost.

- Real-time, low-latency inference by eliminating the need for FFTs or buffering at inference time

Matching Accuracy with a Fraction of the Compute

The Convolutional Long Short-Term Deep Neural Network (CLDNN), introduced by O’Shea et al. (2018), was selected as the benchmark model for comparison with BrainChip’s TENN. Although the original RadioML paper did not use the CLDNN acronym, it proposed a hybrid architecture combining convolutional layers with LSTM and fully connected layers—an architecture that has since become widely referred to as CLDNN in the AMC literature.

This model was chosen as a reference because it comes from the foundational paper that introduced the RadioML dataset—making it a widely accepted standard for evaluation. As a hybrid of convolutional and LSTM layers, CLDNN offers a meaningful performance baseline by capturing both spectral and temporal features of the input signals in the In-phase (I) and Quadrature (Q) (I/Q) components, which are used to represent complex signals in communication systems.

While more recent models like the Mixture-of-Experts AMC (MoE-AMC) have achieved state-of-the-art accuracy on the RadioML 2018.01A dataset, they rely on complex ensemble strategies involving multiple specialized networks, making them unsuitable for low-SWaP-Cool deployments due to their high computational and memory demands. In contrast, TENN matches or exceeds the accuracy of CLDNN, while operating at a fraction of the resource cost—delivering real-time, low-latency AMC performance with under 4 million MACs and no reliance on using multi-model ensembles or hand-crafted features like spectral pre-processing.

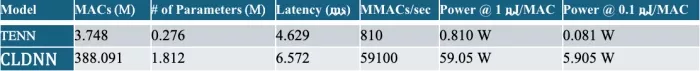

With just ~3.7 million MACs and 276K parameters, TENN is over 100x more efficient than CLDNN, while matching or exceeding its accuracy—even in low-SNR regimes. Moreover, the latency in the table refers to the simulated latency on a A30 GPU for both models.

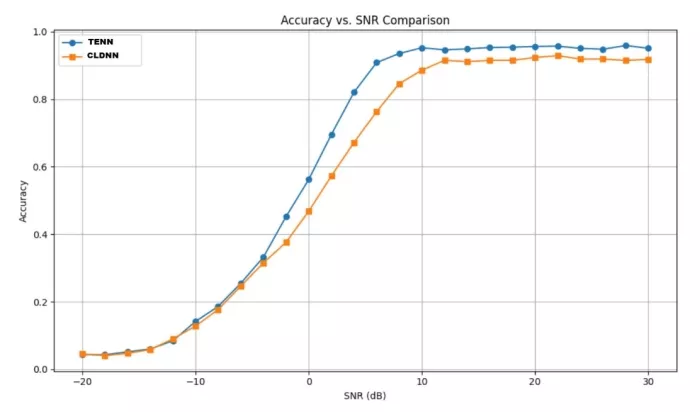

On the RadioML 2018.01A dataset (24 modulations, –20 to +30 dB), TENN consistently outperforms CLDNN especially in mid to higher SNR scenarios. Here is the performance of TENN compared to CLDNN's over the SNR range of -20 to +30 dB:

Ready to bring low SWaP-Cool AI to your RF platforms?

Today’s RF systems need fast, accurate signal classification that fits into small power and compute envelopes. CLDNN and similar models are simply too resource intensive. With TENN, BrainChip offers a smarter, more scalable approach—one that’s purpose-built for edge intelligence.

By leveraging efficient state-space modeling, TENN delivers:

- Dramatically reduces latency, power consumption, and cooling requirements

- Robust accuracy across noisy environments

- Seamless deployment on real-time, mobile RF platforms

Whether you're deploying on a drone, CubeSat, or embedded system, TENN enables real-time AMC at the edge—without compromise.

Schedule a demo with our team to benchmark your modulation use cases on BrainChip’s event-driven AI platform and explore how TENN can be tailored to your RF edge deployment.

Tools and Resources Used

- Dataset: RadioML 2018.01A – A widely used AMC benchmark with 2 million samples:

- DeepSig Inc., "Datasets," [Online]. Available: https://www.deepsig.io/datasets

- BrainChip Paper: Pei , Let SSMs be ConvNets: State-Space Modeling with Optimal Tensor Contractions arXiv, 2024. Available: https://arxiv.org/pdf/2501.13230

- Reference Paper: O’Shea, T. J., Roy, T., & Clancy, T. C. (2018). Over-the-air deep learning based radio signal classification. IEEE Journal of Selected Topics in Signal Processing, 12(1), 168–179. Available: https://doi.org/10.1109/JSTSP.2018.2797022

- Framework: PyTorch was used to implement and train the TENN-based SSM classifier

- Thop is a library designed to profile PyTorch models; it calculates the number of MACs and parameters.

Related Semiconductor IP

Related Blogs

- 3 steps to shrinking your code size, your costs, and your power consumption

- The Integrated Design Challenge: Developing Chip, Software, and System in Unison

- Pasteur’s Magic Quadrant in AI: The Fusion of Fundamental Research and Practical

- Adapting Foundation IP to Exceed 2 nm Power Efficiency in Next-Gen Hyperscale Compute Engines

Latest Blogs

- Leadership in CAN XL strengthens Bosch’s position in vehicle communication

- Validating UPLI Protocol Across Topologies with Cadence UALink VIP

- Cadence Tapes Out 32GT/s UCIe IP Subsystem on Samsung 4nm Technology

- LPDDR6 vs. LPDDR5 and LPDDR5X: What’s the Difference?

- DEEPX, Rambus, and Samsung Foundry Collaborate to Enable Efficient Edge Inferencing Applications