Akida in Space

As humanity pushes the boundaries of space exploration, the requirements for intelligent, autonomous systems capable of operating in harsh, resource-constrained environments have never been more challenging. Traditional AI accelerators, while powerful, often require significant energy and cooling—resources that are scarce aboard spacecraft and satellites.

This creates a critical demand for ultra-low-power solutions that can deliver real-time inference without compromising performance. Enter Akida from BrainChip—a neuromorphic processor designed to mimic the efficiency of the human brain, offering a new compute paradigm for AI applications in space.

In this post, we’ll explore how Akida’s unique architecture addresses the energy and latency challenges of space-based AI, and why it’s poised to become a game-changer for edge intelligence beyond Earth.

Unlocking Hidden Efficiency: Sparsity and the Power of the Akida Architecture

One of the standout features of the Akida architecture is its smart use of sparsity in neural network models and streaming data. Instead of following the traditional exhaustive compute approach that processes every single data point—whether it holds valuable information or not—Akida selectively computes that data that is more likely to impact the output result. It focuses only on what’s meaningful, skipping over zero activations and triggering computation only where it’s truly needed.

This event-based processing isn’t just clever—it’s efficient. But it naturally raises a key question: is there actually enough sparsity in neural networks to make this worthwhile?

Over the past several years, I’ve had the opportunity to collaborate closely with developers across a range of industries—including some working on cutting-edge space applications. One preconception I often hear is that Akida event-based processing might only perform well when paired with event-based inputs.

While it’s true that this can be a great pairing (more on this below), it’s equally important to emphasize that mainstream models using standard inputs—like images or audio—also contain significant sparsity. Indeed, when we analyze sparsity in models, we find that once you’re past the first few layers, sparsity is determined much more by architecture and task than by input type.

Real-World Validation: Testing Akida in a Satellite Workflow

A great example of this can be found in a recent study we did alongside Gregor Lenz from Neurobus[1] on frame-based data. The study was to test Akida in a typical Earth Observation satellite workflow, to show the throughput and energy requirements that could be expected for space-based detection to avoid transmitting all of the images to the ground for subsequent processing.

One common element in these tasks is that objects of interest may only be present in a minority of images, and so an efficient system should perform low-cost filtering, if possible, prior to any more intensive analysis (or downlinking). So, we showed a very simple task, image classification (ship/no ship) on the Airbus Ship Detection dataset [2].

Figure 1: The Airbus Ship Detection dataset [2]. A minority of images (22%) contain one or more ships, and the task is to localize these, typically requiring processing of a relatively high-resolution image. However, many more images contain no ships: it is possible to perform ship/no-ship filtering very effectively at lower resolution. From Lenz & McLelland (2024).

Especially noteworthy is that even with standard image inputs, the model delivered significant energy savings—all thanks to activation sparsity.

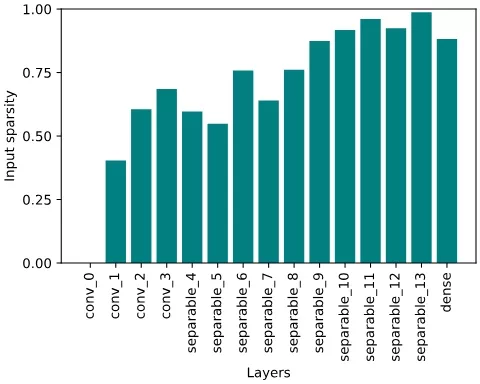

As shown in Figure 2, the input image itself is fully dense, meaning there’s zero sparsity at the first layer. But that changes quickly. Just a few layers into the network, sparsity climbs past 50%, and by the deeper layers, it often exceeds 90%. That’s a very typical picture for a standard CNN backbone. (A quick clarification on sparsity, because it’s a bit of a back-to-front measure: high sparsity is good, it means fewer non-zero values so less processing on Akida. 90% sparsity means that there are only 10% non-zeros to process).

Figure 2: Input sparsity per layer for a classification model (ship/no-ship) processing dense image inputs. Even in the early layers, sparsity is typically higher than 50%, and by the latter part of the model is often higher than 90%. Adapted from Lenz & McLelland (2024).

This level of sparsity allowed Akida to process large volumes of satellite imagery efficiently, at just over 5 mJ per image, and a dynamic power of less than 0.5 W for 85 FPS. This level of performance enables systems to filter out irrelevant data before passing selected images for further processing. A similar approach was outlined by Kadway et al. (2023) [^3], where Akida was used to pre-filter satellite images based on cloud cover, so: high activation sparsity in models does not require event-based inputs.

The Natural Synergy Between Akida and Event-Based Sensors

While Akida delivers strong efficiency gains even with traditional inputs, it truly shines when paired with event-based sensors—a combination especially relevant in space applications. Dynamic Vision Sensors (DVS), for example, have garnered increasing interest for tasks ranging from spacecraft navigation and landing [4] to space situational awareness [5]. DVS sensors offer two standout advantages that make them particularly well-suited for space:

- Ultra-high temporal resolution: unlike traditional cameras that capture full frames at fixed intervals, DVS sensors operate asynchronously, recording changes in brightness at each pixel as they occur—often with microsecond precision. This allows them to capture fast-moving objects or sudden events, such as docking maneuvers or debris flybys, without motion blur or latency. In the vacuum of space, where objects can move at several kilometers per second, this responsiveness is critical for real-time perception and control.

- Exceptional dynamic range: With dynamic ranges exceeding 120 dB—far surpassing conventional image sensors, DVS outperform traditional image sensors. This means they can function effectively in extreme lighting conditions, such as when a spacecraft transitions from deep shadow into direct sunlight. In such scenarios, traditional cameras often saturate or lose detail, while DVS continues to deliver usable data.

Making DVS Work for ML with Akida and TENNs

Despite these attractive features, DVS has faced barriers to adoption on the machine learning side.

On the one hand, the more strongly neuromorphic approaches (with fully asynchronous spiking neural networks, for example) can achieve theoretically impressive results, but they have less familiar training pipelines and often lack support on commercially available hardware that could exploit their advantages.

On the other hand, standard CNNs have proven perfectly capable of processing DVS inputs, but typical NPU hardware implementations are then unable to exploit the very high spatial sparsity of the data.

That’s where Akida offers a combination of benefits: the efficiency of event-based processing but built on very familiar and mature ML training stacks for CNNs. Add in the support for our new TENNs models on Akida 2 – intrinsically designed to handle spatiotemporal data and tasks, thus perfect for DVS – and you really have a perfect match.

From Concept to Orbit: Akida Heads to Space with Frontgrade Gaisler Akida’s capabilities are not just theoretical. Frontgrade Gaisler, a leader in radiation-hardened microprocessors for space, is integrating Akida IP alongside their NOEL-V RISC-V processor in the upcoming GR801 SoC, part of the new GRAIN product line. This space-grade chip is designed specifically for energy-efficient in orbit AI, combining traditional and neuromorphic processing in a radiation-tolerant package.

Embedding Akida at the heart of this GRAIN architecture highlights its suitability for the harsh constraints of space missions and signals growing trust in neuromorphic computing as a cornerstone of next-gen space systems.

Looking Ahead

I’m incredibly excited about the work we are advancing in this domain. We look forward to the results as the event-based datasets continue to mature. Meanwhile, our recent state-of-the-art achievements in other areas [^6] using these models leave me with no doubt about the enormous potential for Akida in future space applications.

If you’re exploring how to bring intelligent, low-power processing to your next project—whether in orbit or on Earth—we’d love to hear from you. Get in touch with the BrainChip team to learn more about how Akida can help you build smarter, more efficient AI at the edge.

Resources

[1]: Gregor Lenz & Douglas McLelland (2024) “Low-power ship detection in satellite images using neuromorphic hardware.” SpAIce Conference. https://arxiv.org/html/2406.11319v1

[2] https://www.kaggle.com/c/airbus-ship-detection

[3] Chetan Kadway, Sounak Dey, Arijit Mukherjee, Arpan Pal & Gilles Bézard (2023) “Low Power & Low Latency Cloud Cover Detection in Small Satellites Using On-board Neuromorphic Processors.” International Joint Conference on Neural Networks. https://ieeexplore.ieee.org/abstract/document/10191569

[4] https://www.esa.int/gsp/ACT/projects/eventful_landings/

[5] Ralph et al. (2019) “Real-time event-based unsupervised feature consolidation and tracking for space situational awareness.” Frontiers in Neuroscience 16:821157. doi: 10.3389/fnins.2022.821157 https://www.frontiersin.org/journals/neuroscience/articles/10.3389/fnins.2022.821157/full

[6] Yan Ru Pei, Sasskia Bruers, Sebastien Crouzet, Douglas McLelland & Olivier Coenen (2024) “A lightweight spatiotemporal network for online eye tracking with event camera” CVPR. https://arxiv.org/abs/2404.08858

Related Semiconductor IP

Related Blogs

- Addressing Challenges with FPGAs in Space Using the GR716B Microcontroller

- How to Solve the Size, Weight, Power and Cooling Challenge in Radar & Radio Frequency Modulation Classification

- Unleash Real-Time LiDAR Intelligence with Akida On-Chip AI

- Pasteur’s Magic Quadrant in AI: The Fusion of Fundamental Research and Practical

Latest Blogs

- Silicon Insurance: Why eFPGA is Cheaper Than a Respin

- One Bit Error is Not Like Another: Understanding Failure Mechanisms in NVM

- Introducing CoreCollective for the next era of open collaboration for the Arm software ecosystem

- Integrating eFPGA for Hybrid Signal Processing Architectures

- eUSB2V2: Trends and Innovations Shaping the Future of Embedded Connectivity