Smart way to memory controller verification: Synopsys Memory VIP

By Tanuj Poddar, Nikhil Ahuja, Nusrat Ali (Synopsys)

Today verification team are facing contradicting pressure, at one end they are asked to reduce the verification schedule while at other end the design complexity is increasing. To overcome the conflicting requirements it becomes essential to analyze the critical path in the verification activity and device smart ways to minimize risk and reduce the verification schedule.

This paper focuses on Memory controller (DDR, LPDDR etc.), which is one of most critical element involved in almost all the data paths of a SoC. It analyzes the challenges associated with memory controller verification and proposes modern approach to reduce the debug and test creation involved which accounts for >70% of the total effort spent in the verification.

How does Memory Controller work?

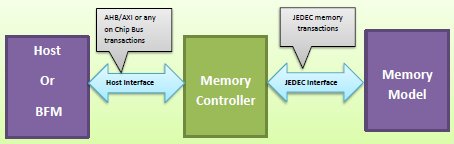

Let us try to understand the typical memory controller verification set up to understand associated challenges. The below diagram (Figure-1) shows a typical memory controller verification environment

Figure-1: Typical Memory controller verification environment

In a block level verification environment the host could be a BFM whereas in a SoC level environment it could be on chip bus which could be connected to the host processor. In either of the case user will need to configure the memory controller through the BFM (or Host). Memory controller utilizes these configurations in setting up the memory before it could be used for data storage.

The Host/BFM is not aware of the memory protocol and it just initiates the memory access (Read/Write) or memory controller registers configuration commands. The memory controller in turn generates the required JEDEC memory commands to enable the required Read/Write operation to be performed.

Challenges with Traditional Memory Controller Verification Method

- Complex and ever growing Protocol specifications

- Huge Test case writing effort

- Painful Debug

- Huge Score boarding effort

Protocol Knowledge

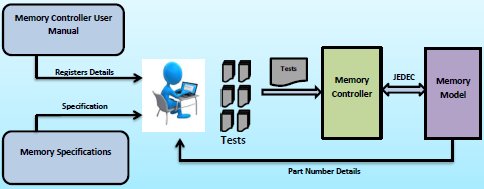

Let us understand the process of creating the tests for verifying the memory controller to understand the associated challenges. The below diagram demonstrates the typical verification process for memory controller verification.

Verification engineer is supposed to study not only the memory controller user manual but also memory specifications to be able to configure the controller. To make things more complex there are different specifications for DDR, LPDDR and DIMMs. This adds to further complexity and needs sound understanding of the protocol to write quality tests.

Test case writing effort

Due to numerous configurations of memory, the test case writing effort becomes huge. In fact it becomes more complex as user needs to take into account the Memory speed bin or Part number when configuring the memory. This makes the job of creating test cases more difficult as Test need to incorporate configuration setting based on the part number being used.

To understand the complexity, let us look at below snapshot taken from DDR4 specifications, this shows the possible values of the CL/CWL for different speed grade of the memory. As can be seen that CL =9 is not a valid condition for the 1866N speed bin whereas the same is possible for the other variants of the 1866 speed bin (1866L and 1866M). This adds to the woes of the test case writer.

The same holds true for other memories like DDR3, LPDDR2, LPDDR3, LPDDR4, MRAM etc. Similar to CL/CWL, there are many other parameters which need to be configured based on the memory speed being deployed. This requires not only close understanding of the specifications but also needs monitoring of the changes in the specifications and modification of the tests accordingly.

Painful debug with Trace file and GUI debuggers

The debug effort with traditional Trace files is quite involved and needs good understanding of the protocol. This results in lower productivity and ultimately impacts the project schedule/cost.

Let us analyze the debug challenges with the traditional memory trace file with the below diagram. The LHS (Left hand side) of the diagram shows three write transactions (WR#1, 2 and 3) to different addresses with the same data. The RHS (Right hand side) of the diagram shows view of the corresponding traditional memory trace file. Assuming there is no reordering of commands done by the memory Controller, let us consider the following case.

The LHS shows three commands but the trace shows only two commands. How do we determine which command has been dropped by controller?

- The only way is to manually remap the row, bank, bank group and column etc. to the host address. This can be quite painful if the remapping by the controller is not straight forward.

Let us revisit the same scenario when controller reorders (to improve the throughput at JEDEC interface, memory controller can reorder commands and minimize unnecessary row activation and pre-charge) the command i.e. the order of the commands received by the controller can be changed when sending to memory. The debug in the case becomes even more complex and one against it needs manual mapping of the command to correlate

The below diagram shows traditional memory interface as they appear in waveform viewer. Analyzing the memory Transactions not only needs protocol knowledge but it also requires mapping the interface signals into commands as per the JEDEC mapping. It shows the address in memory representation (row, bank and column address) whereas the transactions made to the memory controller are in terms of host address to the host. It makes the debugging difficult.

Huge score boarding effort

The best way to reduce debug effort is to create an efficient Scoreboard as it minimizes the manual effort involved in reporting and analyzing unexpected behavior. Traditional memory models do not provide any provision for quickly setting up score board at memory interface level and it is left to user to create a scoreboard by setting up memory monitor on the memory interface. This takes out the effort of the Verification engineers from quality testing to environment development and its stabilization lowering their time in the real testing and many time stabilization of the environment is huge risk for the verification activity.

Inefficient use of the verification resources

In a typical SoC setup there will be dedicated tests for verifying different peripherals. Although memory is part of most of the data flow but still there are dedicated tests for testing the memory controller. Most of the other peripherals which also include memory in their data path use fixed configuration of the memory controller. This essentially means that the memory controller is not being regressed though it is being exercised. This ultimately results in inefficient use of the compute resources, loss of time and waste of simulation resources. As it requires dedicated memory tests to be written to thoroughly exercise the memory controller.

The same holds true for the block level verification as well. One needs to create multiple tests to achieve the targeted coverage. More number of tests requires more maintenance effort, compute power, simulation resources which in turn results to longer verification schedule.

Smart solution to the above problems

Randomized Memory configuration

The below diagram describes proposed approach for creating memory controller tests. It focuses in reducing the number of tests and dependency on protocol expertise in creating the memory controller tests. This drastically reduces the verification effort of memory controller verification.

It recommends creating a wrapper (one time effort) which takes the randomized memory register configuration as input (provided by the memory VIP) and in turn implements the required mapping in the wrapper to configure the controller accordingly. The wrapper generated configuration of the controller can be used in every test case. This further helps to verify the different configuration of the memory without writing specific tests for the memory as every test will have the randomized configuration of the DDR memory. It essentially means that every SoC test which has memory in its data path regress the memory controller and contributes to the overall coverage target.

Now let us revisit the case of configuring the CL/CWL based on the speed bin with the proposed approach. The user need not bother about the allowed values as the memory model itself takes care of the same and provides only the allowed values to the wrapper. The wrapper in turn configures the controller based on the controller user manual to configure the memory accordingly. The beauty of this approach is that not only user need not to bother about the memory spec/part numbers but also he need not worry about configuring the memory with all possible values as the same is being taken care by the randomized memory configurations. The built in the functional coverage allows tracking the verification holes.

User friendly trace files

Synopsys memory VIP provides the ability to display the host/logical address along with memory specific details. Not only it implements the most common form of industry prevalent mapping but it provides the ability to configure the VIP for any custom mapping. This allows displaying the address matching with original host transaction and makes debugging quite easy.

Let us revisit the earlier described problem with Synopsys memory Trace file.

As can be seen, every transaction appears with the logical address/host address. This will not only ease the debug but will also save a lot of time that could have been consumed in the traditional trace files mapping efforts

GUI Based transaction level debug

The Synopsys VIP provides user friendly waveform viewing by providing a debug interface along with regular memory interface. It displays memory state and commands in ASCII character which helps user in easy debug as they do not have to do the decoding of the signals to determine the commands. It also captures the host address (logical address to which Read/Write happens).This helps in debugging and tracking the host transaction to corresponding memory transactions.

Intelligent Protocol Analyzer GUI for memory debug

To further facilitate the debugging the VIP provides a Protocol Analyzer (GUI tool). The GUI is designed to make the memory debug handy and easy. It provides various features like searching Transactions based on the address (One can query an address and get list of all associated commands) made to the address, searching Transactions within a given period, send to specific rank. The GUI is also linked to the transcript log and waveform so one can use the tool to search a given transactions and then invoke the waveform viewer and reach to the memory transactions from the Protocol Analyzer.

Provision to quickly setup Scoreboard

VIP comes a built in analysis port which contains all the memory transactions issued to the memory. They can be used to quickly setup a scoreboard. The VIP also comes with many useful inbuilt checks specific to memory usage like read to uninitialized memory location, write to reserved memory map, back to back write without read (WR -> RD -> WR) etc. These are just some of checks the VIP comes with many useful checks customized for typical memory usage and memory map verification. It also provides handy APIs which allow taking the dump and doing comparison of the current memory snapshot

Summary

The above paper provides an overview of the debug challenges and Synopsys memory VIP offering in this regard. There are many other useful features available with the VIP. They have been customized to reduce debug effort and improve verification productivity and in turn quality.

Related Semiconductor IP

- Memory Controller

- HYPERBUS™ Memory Controller

- xSPI Multiple Bus Memory Controller

- HBM Memory Controller

- HBM4 Memory Controller

Related White Papers

- Metric Driven Verification of Reconfigurable Memory Controller IPs Using UVM Methodology for Improved Verification Effectiveness and Reusability

- Re-Use of Verification Environment for Verification of Memory Controller

- DDR3 memory interface controller IP speeds data processing applications

- Integrating large-capacity memory in advanced-node SoCs

Latest White Papers

- Ramping Up Open-Source RISC-V Cores: Assessing the Energy Efficiency of Superscalar, Out-of-Order Execution

- Transition Fixes in 3nm Multi-Voltage SoC Design

- CXL Topology-Aware and Expander-Driven Prefetching: Unlocking SSD Performance

- Breaking the Memory Bandwidth Boundary. GDDR7 IP Design Challenges & Solutions

- Automating NoC Design to Tackle Rising SoC Complexity