Optimizing Communication and Data Sharing in Multi-Core SoC Designs

By Andy Nightingale, VP Product Management and Marketing at Arteris

As semiconductor manufacturing technology advances, systems-on-chip (SoCs) have increased our capacity to contain more transistors in a smaller area, enabling greater computational power and functionality. The way of connecting components in an SoC needed to evolve to support the growing data communication demands.

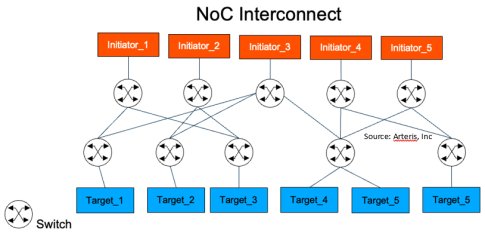

Network-on-chip (NoC) interconnects are used to manage data flow between components efficiently to provide scalable low latency and power-efficient communication, enabling seamless integration of features like CPUs, GPUs, NPUs and other accelerators. NoCs reduce data bottlenecks, enhance system performance and accommodate increasing complexity in SoC designs

Decoding Cache Coherence

Cache coherence lies at the core of efficient data sharing among the various components within an SoC. It's the mechanism that guarantees data consistency, preventing bottlenecks and data incongruities and ultimately sustaining the overall system performance. It ensures the system operates seamlessly in the background.

Engineers who delve into the world of cache coherence find themselves at the intersection of hardware and software. In this domain, the technical intricacies of ensuring that data remains consistent across a multi-core system take precedence. Cache coherence serves as the underlying thread weaving through the intricate fabric of modern technology, ensuring a consistent view of memory for all processes.

The Evolution of Interconnects

As semiconductor technology has advanced, the methods of component communication have evolved. Here's a technical exploration of the different interconnect structures:

(Source: Arteris, Inc.)

In the 1990s, the prevalent bus structure was the shared bus. When one component needed to communicate with another, it would place its data onto the bus, allowing all connected components to read the data and respond if necessary. While the concept of a shared bus was straightforward, it introduced complexities such as bus contention and limited bandwidth, particularly as more components were integrated into the system.

In the early 2000s, hierarchical buses emerged as a solution to manage and streamline data flow between components in a tier function. They offered efficient data flow, scalability, modular design and reduced complexity. However, they came with potential challenges, including latency, limited bandwidth, complex routing and increased power consumption.

Around 2005, a range of crossbar buses were developed. They offered high-speed simultaneous connections, reducing contention and maximizing bandwidth. The complexity, cost and scalability of crossbar implementations varied based on design and technology. Some crossbar implementations were complex and costly, while others were more scalable for large systems.

The concept of a network-on-chip (NoC) began to gain popularity in research and development. Like many new technological concepts, it took a few more years before NoC-based interconnects found widespread adoption in commercial semiconductor designs.

Crossbar Bus vs a NoC Bus

Crossbar buses offer several advantages and disadvantages. They are highly efficient when connecting a limited number of components with high-speed simultaneous connections, making them ideal for applications like high-performance CPUs and memory access. However, they can be challenging to implement and may not scale well when dealing with large and heterogeneous SoC designs.

(Source: Arteris, Inc.)

On the other hand, NoC architectures have become increasingly popular in modern SoC designs due to their scalability and adaptability. They are well-suited for complex SoCs with numerous heterogeneous components, including multi-core processors, GPUs, accelerators and peripherals. NoCs efficiently manage communication in these systems and allow for flexible resource allocation. They are particularly valuable in addressing the scalability challenges of diverse applications within SoC designs.

(Source: Arteris, Inc.)

The choice of interconnect technology ultimately depends on the SoC’s specific design goals, power performance and scalability requirements.

Cache Coherent vs Non-Coherent Interconnects

Coherent interconnects ensure that all processes within a system have a uniform and consistent view of memory. In contrast, non-coherent interconnects do not guarantee this consistency, potentially requiring explicit data management. The choice between coherent and non-coherent interconnects depends on a given system’s needs and design considerations.

To illustrate this distinction, consider a system with a machine learning subsystem. In such a setup, the machine learning processors independently manage data streams, and imposing cache coherency might introduce unnecessary overhead.

(Source: Arteris, Inc.)

On the other hand, coherent NoCs are exemplified by multi-core SoC designs, such as the Apple A17 Bionic featured in iPhones and iPads or the Qualcomm Snapdragon 888 found in Android smartphones. In these devices, maintaining cache coherency ensures proper program execution and data consistency.

Data Access and Efficiency

Caches serve as high-speed storage layers that temporarily store frequently accessed data and instructions, significantly boosting data access speed compared to slower storage media like RAM or hard drives. These integral components can be found in a wide array of devices, from smartphones and laptops to servers and supercomputers. Caches are typically organized into multiple levels, including L1, L2, and L3 caches, each offering a larger capacity but slightly slower access times than the previous one. The primary goal is to minimize the CPU's wait time for data by keeping frequently used data close to the CPU. This results in improved overall performance, reduced latency and enhanced power efficiency. They also manage memory bandwidth effectively and are cost-efficient.

Managing caches adds complexity and requires coherency management. Although cache coherency prevents data inconsistencies and ensures correctness, it has some challenges. Synchronized cache updates, for instance, can inadvertently introduce data inconsistencies and increase latency and resource consumption. Scalable caches make coherency more difficult by requiring efficient protocols and synchronized software to ensure data consistency and avoid problems like data erases and discrepancies.

The technical requirements of managing these challenges present an opportunity for engineers. They must dive deep into the design of cache coherency protocols. Each protocol presents its own set of technical challenges and trade-offs. Engineers must assess the specific needs of their systems and choose the protocol that best aligns with their performance and efficiency goals. This technical decision-making process can make or break the success of a project.

Arteris' Ncore Cache Coherent Interconnect

In the quest for technical excellence, Arteris introduces Ncore, a flexible and scalable interconnect solution engineered to navigate the complexity of cache coherency and data flow. It offers heterogeneous coherency, providing flexibility, performance optimization, power efficiency and ease of development in complex SoC designs. It ensures data consistency across various processing elements, making systems more efficient and capable. Additionally, it leverages configurable proxy caches, enabling dynamic adaptation to diverse system configurations. These caches can be tailored to specific coherency requirements, allowing for efficient data sharing and minimized coherency traffic. Ncore's multiple configurable snoop filters allow for more targeted and streamlined coherency management, reducing the overall complexity of the design. This approach ensures efficient and tailored coherency solutions, contributing to overall effectiveness.

(Source: Arteris, Inc.)

Conclusion

The rapid advancement of semiconductor technology has necessitated the optimization of communication and data sharing in multi-core SoC designs. The progression from shared buses to hierarchical and crossbar buses has given rise to the prominence of NoC architectures, particularly in addressing the complexities posed by intricate and diverse SoC configurations. Cache coherence, serving as a fundamental element, ensures data consistency and system performance, while the choice between coherent and non-coherent interconnects depends on specific design considerations.

The role of caches in enhancing data access speed is pivotal, but it introduces complexities in coherency management. Engineers face critical decisions in selecting cache coherency protocols, navigating technical challenges and trade-offs to align with performance and efficiency goals. As SoC technology evolves, optimizing communication, effectively managing cache coherence, and making informed decisions on interconnect technologies become increasingly crucial. Solutions offering flexible and scalable interconnect options signify significant progress in addressing these evolving challenges.

Learn more about Arteris cache coherent and non-coherent IP.

Related Semiconductor IP

- NPU IP Core for Mobile

- NPU IP Core for Edge

- Specialized Video Processing NPU IP

- HYPERBUS™ Memory Controller

- AV1 Video Encoder IP

Related White Papers

- Achieving Lower Power, Better Performance, And Optimized Wire Length In Advanced SoC Designs

- Analog and Power Management Trends in ASIC and SoC Designs

- Getting started in structured assembly in complex SoC designs

- Integrating VESA DSC and MIPI DSI in a System-on-Chip (SoC): Addressing Design Challenges and Leveraging Arasan IP Portfolio

Latest White Papers

- Ramping Up Open-Source RISC-V Cores: Assessing the Energy Efficiency of Superscalar, Out-of-Order Execution

- Transition Fixes in 3nm Multi-Voltage SoC Design

- CXL Topology-Aware and Expander-Driven Prefetching: Unlocking SSD Performance

- Breaking the Memory Bandwidth Boundary. GDDR7 IP Design Challenges & Solutions

- Automating NoC Design to Tackle Rising SoC Complexity