RaiderChip showcases the evolution of its local Generative AI processor at ISE 2026

Its ability to run multiple AI models simultaneously opens the door to a new generation of intelligent systems built entirely on local hardware

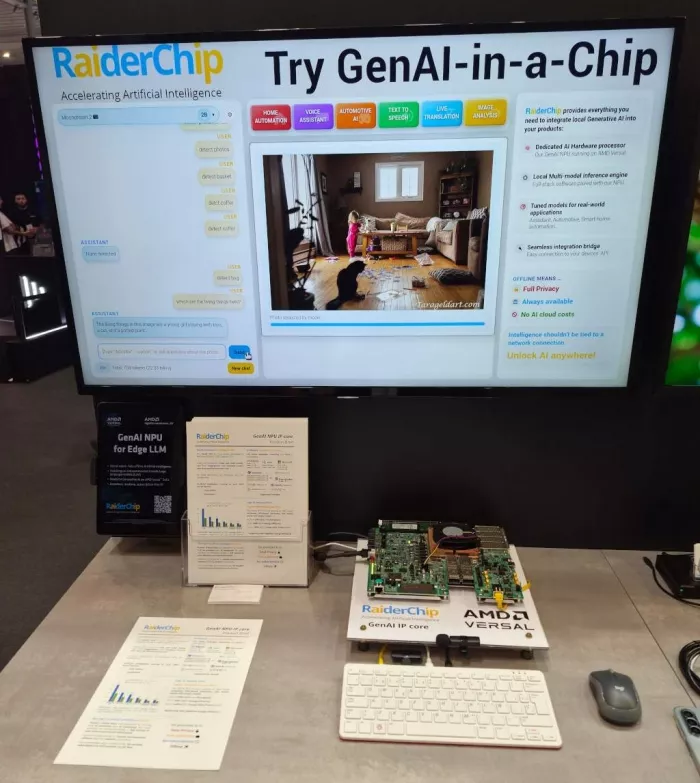

Spain, February 16th, 2026 -- Voice, text, reasoning, and vision combined and running offline and in real time on a single device. At ISE 2026, RaiderChip showcased the evolution of its local Generative AI processor (NPU), ready for integration into SoCs and ASICs, enabling visitors to interact with up to 18 different models, including both LLMs and multimodal models, all running locally on device.

RaiderChip GenAI NPU Demos

Voice interaction played a central role in the demonstration, serving as the platform’s primary user interface. Attendees were able to interact with the models naturally, speaking directly to the device, supporting more than 70 languages, without the limitations of keyboards or traditional user interfaces.

“We believe voice will become the primary way people interact with all kinds of devices. Buttons and rigid, predefined commands will give way to natural conversations, where systems respond in real time in the user’s own language,” said Victor Lopez, RaiderChip’s CTO.

To demonstrate the real-world potential of local Generative AI, RaiderChip presented two concrete use cases — Home Automation and Automotive — enabling users to query and control home devices and vehicle systems through natural conversations, powered by the simultaneous execution of multiple Generative AI models on RaiderChip’s NPU.

“We selected these two environments because they clearly illustrate where local processing is especially critical. In home automation, privacy within the home is paramount; in automotive, safety and reliability are essential — particularly in situations such as tunnels or remote areas where connectivity cannot always be guaranteed. In both cases, the ability to operate offline and keep data on the device is fundamental,” added RaiderChip’s CTO.

RaiderChip's Demo at AMD booth (ISE 2026)

Unlike previous generations of assistants based on commands and rigid workflows — such as those popularized by solutions like Alexa — the self-discovering approach enables a shift from executing predefined instructions to achieving a genuine understanding of context. The system does not require an exact command; instead, it interprets the conversation, reasons about the user’s intent, and decides how best to assist in each situation. As a result, interaction moves beyond a sequence of commands and becomes a natural, flexible, and adaptive conversation.

“Our demo does not rely on separate systems for the home and the vehicle. RaiderChip’s hardware runs the same models in both cases — listening, transcribing, reasoning, and acting — with only the context and available actions changing. The system understands the conversation, evaluates what it can do in each environment, and acts accordingly, just as a person would do.” added RaiderChip’s CTO.

With its participation at ISE 2026, RaiderChip has demonstrated that local, multimodal, real-time Generative AI is already a tangible reality, enabled by the flexibility of its Generative AI processor. The demo acts as a technical showcase of the capabilities of its NPU, capable of locally executing models that understand context, reason, and assist users naturally — running entirely on RaiderChip’s hardware and ready to be integrated directly into end products, without reliance on the cloud and without compromising performance, privacy, or user experience.

WANT TO KNOW MORE?

Related Semiconductor IP

- Embedded AI accelerator IP

- NPU

- NPU IP Core for Mobile

- NPU IP Core for Edge

- Specialized Video Processing NPU IP

Related News

- NXP Completes Acquisitions of Aviva Links and Kinara to Advance Automotive Connectivity and AI at the Intelligent Edge

- M31 Debuts at ICCAD 2025, Empowering the Next Generation of AI Chips with High-Performance, Low-Power IP

- Redefining the Cutting Edge: Innatera Debuts Real-World Neuromorphic Edge AI at CES 2026

- Tenstorrent unveiled its first-generation compact AI accelerator device designed in partnership with Razer™ today at CES 2026

Latest News

- RaiderChip showcases the evolution of its local Generative AI processor at ISE 2026

- ChipAgents Raises $74M to Scale an Agentic AI Platform to Accelerate Chip Design

- Avery Dennison announces first-to-market integration of Pragmatic Semiconductor’s chip on a mass scale

- Ceva, Inc. Announces Fourth Quarter and Full Year 2025 Financial Results

- Ceva Highlights Breakthrough Year for AI Licensing and Physical AI Adoption in 2025