DNN IP

Filter

Compare

15

IP

from

9

vendors

(1

-

10)

-

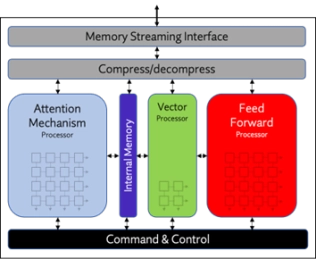

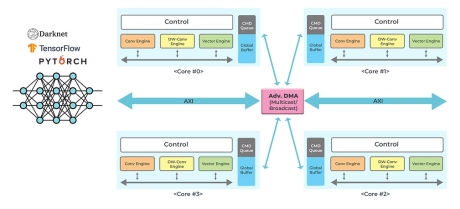

NPU IP Core for Edge

- Origin Evolution™ for Edge offers out-of-the-box compatibility with today's most popular LLM and CNN networks. Attention-based processing optimization and advanced memory management ensure optimal AI performance across a variety of networks and representations.

- Featuring a hardware and software co-designed architecture, Origin Evolution for Edge scales to 32 TFLOPS in a single core to address the most advanced edge inference needs.

-

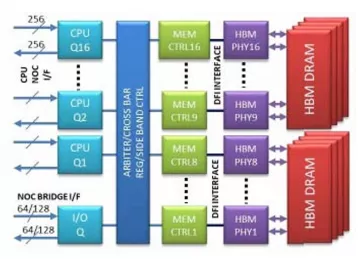

HBM Memory Controller

- Low latency, high bandwidth

- Supports HBM or DDRx memory types

- 16 parallel access channels

- Multi, independent internal queues

-

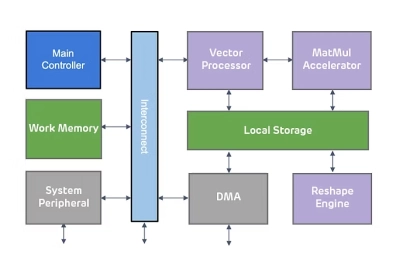

Highly scalable inference NPU IP for next-gen AI applications

- ENLIGHT Pro is meticulously engineered to deliver enhanced flexibility, scalability, and configurability, enhancing overall efficiency in a compact footprint.

- ENLIGHT Pro supports the transformer model, a key requirement in modern AI applications, particularly Large Language Models (LLMs). LLMs are instrumental in tasks such as text recognition and generation, trained using deep learning techniques on extensive datasets.

-

Perceptual Video Quality Optimization IP

- Fully hardwired IP

- High performance

- Codec-agnostic

- Frame based pre-processor

-

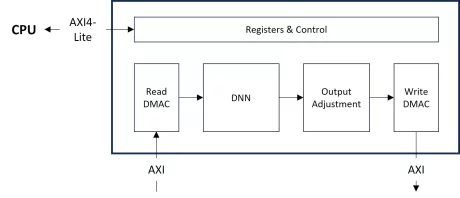

High-Performance NPU

- The ZIA™ A3000 AI processor IP is a low-power processor specifically designed for edge-side neural network inference processing.

- This versatile AI processor offers general-purpose DNN acceleration, empowering customers with the flexibility and configurability to optimize performance for their specific PPA targets.

- A3000 also supports high-precision inference, reducing CPU workload and memory bandwidth.

-

4-/8-bit mixed-precision NPU IP

- Features a highly optimized network model compiler that reduces DRAM traffic from intermediate activation data by grouped layer partitioning and scheduling.

- ENLIGHT is easy to customize to different core sizes and performance for customers' targeted market applications and achieves significant efficiencies in size, power, performance, and DRAM bandwidth, based on the industry's first adoption of 4-/8-bit mixed-quantization.

-

ARC EV Processors are fully programmable and configurable IP cores that are optimized for embedded vision applications

- ARC processor cores are optimized to deliver the best performance/power/area (PPA) efficiency in the industry for embedded SoCs. Designed from the start for power-sensitive embedded applications, ARC processors implement a Harvard architecture for higher performance through simultaneous instruction and data memory access, and a high-speed scalar pipeline for maximum power efficiency. The 32-bit RISC engine offers a mixed 16-bit/32-bit instruction set for greater code density in embedded systems.

- ARC's high degree of configurability and instruction set architecture (ISA) extensibility contribute to its best-in-class PPA efficiency. Designers have the ability to add or omit hardware features to optimize the core's PPA for their target application - no wasted gates. ARC users also have the ability to add their own custom instructions and hardware accelerators to the core, as well as tightly couple memory and peripherals, enabling dramatic improvements in performance and power-efficiency at both the processor and system levels.

- Complete and proven commercial and open source tool chains, optimized for ARC processors, give SoC designers the development environment they need to efficiently develop ARC-based systems that meet all of their PPA targets.

-

PowerVR Neural Network Accelerator - The ultimate solution for high-end neural networks acceleration

- Security

- Lossless weight compression

-

PowerVR Neural Network Accelerator - The perfect choice for cost-sensitive devices

- API support

- Framework Support

- Bus interface

- Memory system