NVMe host IP for computing accelerator

By Jerome Gaysse, IP-Maker

NVM Express (NVMe) SSDs are well adopted by the storage industry. It delivers high performances in term of IOPS, throughput and latency. It comes with a various range of capacity and form factors including PCIe Add-In-Card, 2.5" U.2, M.2 and recently as a single BGA chip. NVMe offers an excellent interface for the next generation of SSDs, based on the PCIe Gen4 physical layers (16Gbps per lane) combined to new fast non-volatile memories. It appears that the trend is to use NVMe SSDs as the standard storage drive, from consumer products (laptop, ultrabook, tablet...) to hyperscale data centers. In the data center world, there has been a huge work done by the world wide industry to develop efficient implementations of the NVMe protocol in the SSDs/ What about on this host side? Delivering high performance NVMe SSDS requires efficient SSD and host. The host is most of the time the main CPU of the server. IP-Maker, a leader storage IP company, already introduced a NVMe host technology IP for embedded applications and edge computing, where this technology solved the problem of using a NVMe SSDs where those applications can’t offer a high end CPU because of limited resources (power, cost, space). This paper now presents this technology for data center use cases.

The need of computing accelerator and local storage

The data amount to process in the data center applications requires a lot of computing and storage resources, and lead to massive data transfers between compute nodes and storage arrays. New kind of architectures has emerged where the common goal is to bring computing features close to the storage, based on FPGAs as the computing accelerators. An optimized integration is to put FPGA and storage on the same board. For a simple management, capacity choice and easy integration, the use of NVMe SSDs appears to be the ideal choice, which is easily feasible with M.2 and BGA SSDs from a mechanical point of view. But on a typical server, the main CPU manages the NVMe host part of the protocol through the native driver available in the operating system. This driver uses a lot of computing resources to process the NVMe commands and IOs.

The computing capabilities to sustain 1 million IOPS are more than 3 full time cores running at 3GHz. For a Gen3x4 SSD, which can deliver about 750k IOPS, about 2 cores are necessary. As the server architectures are evolving with the introduction of computing accelerators, the need to attach NVMe SSDs directly to FPGA-based computing accelerators will become more and more important in a near future. Based on this context, and assuming a 1 GHz clock frequency for cores embedded in a FPGA, 6 cores will be needed in such products to saturate a PCIe Gen3x4 NVMe SSD. That represents a high gate count budget and power.

NVMe Host technology description

NVMe Host IP architecture

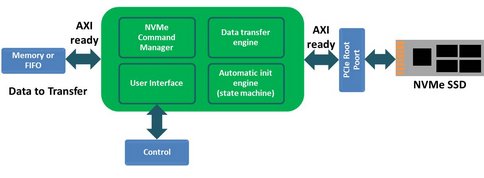

The NVMe host IP from IP-Maker comes as a RTL IP. There is no need of any software for the NVMe command & data transfer management, therefore delivering low latency. The IP features AXI and Avalon interfaces, and four main blocks: a control unit with its configuration registers, a NVMe command manager for the NVMe transaction setup, a data transfer manager, and an auto-init engine. The data buffer can be a memory or a FIFO for streaming uses cases. On the other side it is connected to the PCIe root complex controller (PCIe Gen3x8 capable). Few instances of this IP can be done for a higher total bandwidth. The only limitation will be the available PCIe lanes on the FPGA. For expert users, in addition to the standard NVMe commands, the IP also provides the possibility to send/receive vendor specific commands, and it supports other protocols like Open Channel SSD.

This IP is easy to use and integrate. The automatic initialization engine takes in charge all the PCIe and NVMe detection, configuration and initialization. The user doesn’t have to manage this part. Regarding the data transfer it is also very simple. When the user application needs to initiate a data transfer, it configures the IP through the control unit with the transfer details such as address and size. For example, in case of a write access, the NVMe command manager translates the transfer details into NVMe commands and sends the doorbell to the SSD. At this time, the NVMe SSD takes the control of the data transfer, based on its internal DMAs, and ready the data from the memory buffer of the host IP. When the data transfer is completed, the NVMe Host IP receives the completion information, and makes the memory buffer available.

In case of a read access, the host IP makes sure that a buffer is free, then send the doorbell to the device. The data transfer takes place, and the received data are stored into the memory buffer. The completion status is get through the control unit, and the user can process these data.

Reference design

A reference design is available, based on a Ultrascale FPGA from Xilinx (or Ultrascale +). It is connected to a M.2 SSD through a board adaptor. On the FPGA side, an easy to use testbench design has been implemented, including the NVMe Host IP. The FPGA board is connected to a computer via an UART to control test execution.

Performance measurement

Measurement process: the embedded testbench counts the clock cycles from the beginning to the end of the data transfer. The testbench allow multiple configurations: sequential/random, IO size, read/write. The performances benchmark is done with a Gen3x4 Samsung NVMe SSD and reaches the maximum of the SSD specification: 3GB/s for sequential read access, and 2.3GB/s for a sequential write access. In addition, such performances is reached by a NVMe Host IP using only few percent of the FPGA gates, which is very efficient compared to a CPU.

This NVMe Host IP from IP-Maker is able to provide high performance storage to FPGA-based computing accelerator thanks its tiny footprint and fast bandwidth capabilities.

It is a real added value for such new products, by delivering fast local storage, therefore simplifying system architecture and optimizing data transfers between compute and storage. This local storage can be storage tier or a local cache depending on the application needs. It van applied to the following use cases: HBA, computational storage, storage controllers and smart NIC.

Related Semiconductor IP

- High Performance Embedded Host NVMe IP Core

- NVMe Host Controller

- Xilinx Kintex 7 NVME HOST IP

- Xilinx ZYNQ NVME HOST IP

- Xilinx Ultra Scale NVME Host IP

Related White Papers

- NVMe IP for Enterprise SSD

- Menta eFPGA IP for Edge AI

- SignatureIP's iNoCulator Tool - a Simple-to-use tool for Complex SoCs

- Select the Right Microcontroller IP for Your High-Integrity SoCs

Latest White Papers

- FastPath: A Hybrid Approach for Efficient Hardware Security Verification

- Automotive IP-Cores: Evolution and Future Perspectives

- TROJAN-GUARD: Hardware Trojans Detection Using GNN in RTL Designs

- How a Standardized Approach Can Accelerate Development of Safety and Security in Automotive Imaging Systems

- SV-LLM: An Agentic Approach for SoC Security Verification using Large Language Models