Casting a wide safety net through post processing Checking

By Purushottham R, Nigitha A, Jayashree R, Sharan Basappa (HCL Technologies)

Introduction

Post processing stage is a critical part of any verification project. The post processing step for catching errors is done for multiple reasons some of which are highlighted below,

- Look for standard error signatures (e.g. UVM_ERROR) in the log files

- Look for error signatures that cannot be logged through UVM reporting (e.g. third party components that may not strictly follow UVM reporting mechanism etc.)

- Ignore error signatures globally (e.g. known errors that need temporary or permanent bypass)

- Ignore error signatures specific to a file (or a specific test case that is expected to create error scenarios)

If you notice the above cases, the post processing is done mainly to parse for error patterns. However, there is another aspect to the post processing, which is generally not understood well, but it can play a critical role in the verification cycle. That is, making sure that, signatures that are expected to be present in the log files, are actually present. While the former case results in error when a signature is found, in the latter case, it is treated as an error if an expected signature is not found.

A few examples of this type of post processing checks that are needed are listed below,

- The tests have gone through the expected stages/events so that we don’t end up with false positive

- Scoreboard is disabled when they are not expected to

- Assertions are disabled when they are not expected to

- Certain testbench checks are disabled when they are not expected to

- Events like interrupts have occurred in the expected tests

A typical DV project involves a lot of engineers making simultaneous code updates. Such a collaborative work can result in a lot of inadvertent errors. To handle such common errors, the project team will do review checks at the end of every simulation or a regression run to ensure that any oversight errors have not been introduced. However, this process of review checks itself is manual and hence, is not only effort intensive but is prone to errors.

In this paper, we explain how we adopted the post processing method to make sure we check for signatures that are expected are present in the log files. This acted as a safety net when engineers made inadvertent mistakes and introduced issues in the code base.

Related work

While we are aware of post processing approach to catch error signatures in the log files, we haven’t come across using the post processing methods that look for expected signatures are present in the log files. This is normally done manually by reviewing log files and if needed, additionally reviewing the waveforms.

One of the shortcomings of the prevailing manual method to check that the expected signatures are indeed present. While this approach does work, it has few shortcomings,

- High effort

- The more the number of regression sessions, the more the effort

- Effort increases with increased log files count and hence scaling is an issue

Implementation Approach

The post processing was deployed using Python script. The script accepted JSON file as an input. The JSON file contains a list of rules.

The rules are nothing but signature patterns that need to be parsed in the log files. Some of the key features of the checker parser are captured below,

|

Feature |

Remarks |

|

Recursive processing |

Process log file recursive starting from the root directory |

|

Patterns in regex |

Patterns can be defined in Python native regex form |

|

Skip patterns |

patterns can be skipped globally |

|

File specific skip patterns |

Patterns can be skipped per file |

|

File extension |

File extension can be user defined |

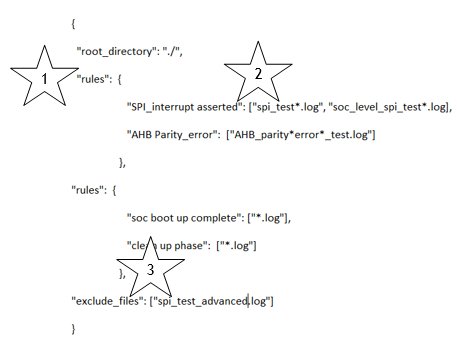

Sample rule file is shown in the snapshot below.

|

Call out |

Description |

|

1 |

Rule group. There can be multiple groups, one for each category of tests |

|

2 |

One example rule. This specific rule indicates to checker parser that it should expect a string “SPI_interrupt asserted” in all the logs that match spi_test*.log (e.g., spi_test1.log) |

|

3 |

This rule indicates to the checker parser that it should skip file named spi_test_advanced.log. |

The key python modules used are,

- Re – regular expression module

- Os – operating system module

- Json – json related operations

- Logging – logger module

Results

For the project where we deployed this method, we had a total of 100+ rules for signatures. The 100 odd rules themselves can be broadly classified into the following categories:

|

Rule type |

Remarks |

|

Look for interrupt signatures in specific test cases |

Applicable to the class of tests that expect an interrupt to be generated. There are cases where the checker for these interrupt events is not robust enough or such checks have been disabled. |

|

Look for interrupt assertions signatures |

Our testbench had option to mask the assertion checks. In some cases, the engineers would disable them and leave them as it is. Most of such errors were found much later accidentally or through review process |

|

Error signature seen in expected error tests |

This is needed to make sure that error tests indeed create the error scenarios. This is to evaluate the quality of the test |

|

Expected sequence of signatures are seen in tests |

We noticed that early in the verification cycle, some of the tests did not even run and yet these were not flagged as test failures due to various issues. This check to ensure that the proper sequence of events happen from the start to the end. E.g., boot up completed |

|

Expected assertion signatures seen in relevant tests |

Expected assertions got triggered which indicates that expected scenario was created by the test |

There were several issues that were found. A sample of issues are given below,

- Many tests did not clear the interrupts as a part of ISR servicing

- Tests did not generate the interrupt as they were masked at the IP

- Due to DUT change and testbench being out of sync, the DUT initialization was not happening properly

Final thoughts

Traditionally, we use post processing to check for error signatures in the log files. We don’t use post processing to check for signatures that we expect to be present. We also use, in certain cases, manual method to review the log files.

Our proposed approach brought a lot of value due to the following,

- Check certain signatures that could not be checked directly in the testbench environment

- Make sure that there are no oversight issues and hence get better confidence in terms of quality of regression runs

- Act as an additional checker to ensure that the expected test intention was met

- Reduce a significant effort by reducing manual review

Related Semiconductor IP

- Ultra-Low-Power LPDDR3/LPDDR2/DDR3L Combo Subsystem

- 1G BASE-T Ethernet Verification IP

- Network-on-Chip (NoC)

- Microsecond Channel (MSC/MSC-Plus) Controller

- 12-bit, 400 MSPS SAR ADC - TSMC 12nm FFC

Related Articles

- Writing a modular Audio Post Processing DSP algorithm

- Stepping through the processing requirements for MPEG-4

- Enhancing Verification through a Highly Automated Data Processing Platform

- Formal Property Checking for IP - A Case Study

Latest Articles

- Extending and Accelerating Inner Product Masking with Fault Detection via Instruction Set Extension

- ioPUF+: A PUF Based on I/O Pull-Up/Down Resistors for Secret Key Generation in IoT Nodes

- In-Situ Encryption of Single-Transistor Nonvolatile Memories without Density Loss

- David vs. Goliath: Can Small Models Win Big with Agentic AI in Hardware Design?

- RoMe: Row Granularity Access Memory System for Large Language Models