Bulletproofing PCIe-based SoCs with Advanced Reliability, Availability, Serviceability (RAS) Mechanisms

1. Introduction

As silicon manufacturing process nodes keep shrinking and transistors get smaller, System-on-Chip (SoC) are increasingly subject to failures due to changing external conditions such as temperature, EMI, power surges, Hot Plug events, etc.

The transition to PCIe 4.0 and 5.0 with increasing PCIe signaling speeds (16GT/s and 32GT/s) also augments the risk of errors due to tightening timing budgets inside the SoC and electrical issues outside the SoC (e.g. crosstalk, line attenuation, jitter, etc.).

Furthermore, the ever growing number of PCIe components and systems designed to different revisions of the Specification increases the risk for interoperability issues.

As a result, chip designers who use PCIe as the main communication interface in their SoCs are looking for ways to bulletproof their designs by implementing advanced Reliability, Availability, and Serviceability (i.e. RAS) mechanisms that go above and beyond those included in the PCI Express Base Specification.

We start this article by defining “RAS” in the context of PCIe interfacing and looking at the provisions for RAS mechanisms in the PCIe Specification. We then explore some potential PCIe hazards SoC designers can face and the RAS mechanisms that can be implemented to detect, recover, or prevent these hazards. We conclude with recommendations for choosing a PCIe silicon IP solution that helps mitigate these risks.

2. RAS in the context of PCIe

In the context of a SoC’s PCI Express interface, the 3 components of RAS can be defined as follows:

- Reliability: the PCIe interface should never cause the SoC or system to fail. As such, any mechanism that allows the PCIe interface to be more tolerant to changing external or internal conditions is considered a Reliability feature.

- Availability: the PCIe interface should remain operational in case of failure of the SoC or system. As such, any mechanism that allows the PCIe interface to continue to operate in case of component failure is considered an Availability feature.

- Serviceability: the PCIe interface should enable quick fixing of PCIe related issues. As such, any mechanism that allows quick and easy identification of PCIe runtime issues or design bugs is considered a Serviceability feature.

3. RAS features in PCIe protocol

The PCIe Base Specification defines a set of mechanisms primarily intended for link-level reliability. These include:

- LCRC, ACK/NAK, Replay: ensure that packets sent are received correctly across a link; if not received correctly (LCRC error), a packet is NAKed and Replayed (i.e. resent).

- ACK/NAK timeouts: ensures that the link partner is alive and forces a link retraining if a ACK or NAK is not received within the specified time.

- ECRC: end-to-end CRC generation/check that ensures packets are not corrupted in their journey between a requester and a completer (possibly when going through other components such as switches).

- Timeout counters and LTSSM failsafe mechanisms: ensure that Link Training and Status State Machine always reinitializes in case of timeout, with link partners returning to known state.

- Advanced Error Reporting (AER): an optional PCIe capability (ECN) that provides advanced error signaling and logging.

These mechanisms provide basic protection against PCIe link level hazards however prove insufficient for mission-critical deployments in markets such as automotive, HPC/Cloud computing, and enterprise storage/networking.

Here are a few examples of potentially hazardous conditions that require more advanced RAS mechanisms to be detected, reported and/or corrected:

- The device issues intermittent retries, due to link instability or credit starvation: the system may still be functional however performance is degraded.

- Link partner’s Flow Control updates (ACK, NAK, UpdateFC, …) are received slightly after timeout due to high system latency. Here again the system may still be functional but with intermittent retries or transitions to the Recovery state.

- Link does not reach the L0 state due to non-compliant TSx sequence, Receiver Detect error, etc.: system does not link up.

4. Best practices for enhanced reliability

To improve the reliability of PCIe communications, PCIe designs have integrated a set of best practice mechanisms that are not part of the PCIe Base Specification. These include:

- Parity: adding one or more parity bits along the PCIe data path. 1 bit of parity per byte of data is considered good coverage; other options such as 1 bit of parity for 16-bit or 32-bit of data offer lesser coverage but have a lower cost. Parity however does not protect against multi-bit errors.

- ECC (Error Correcting Code): implementing ECC logic for PCIe data at rest, in particular for receive and transmit buffers. ECC is typically designed for 2-bit error detection and 1-bit correction which provides a good tradeoff between coverage and cost; 8-bit ECC code per 64-bit of data is the common usage.

The combination of Parity and ECC do a good job at protecting PCIe payload in flight and at rest, however mission-critical applications demand for more advanced RAS mechanisms, which we discuss in the following section.

5. Proposed advanced RAS mechanisms

In this section we describe some PCIe related issues observed in production environments that could quickly be detected, reported and/or corrected using advanced RAS mechanisms. We propose a specific solution to each problem and a generalization of the solution in term of a Reliability, Availability, and/or Serviceability mechanism to be implemented inside and/or around the PCIe interface logic.

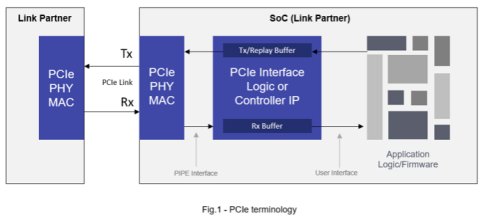

The terminology used throughout this chapter is illustrated in Figure 1.

5.1. Non-compliant component

The following examples illustrate issues observed due to non compliance of either link partner.

5.1.1. Equalization timeout due to PHY specific FOM timing

In this scenario, link should initialize at 16GT/s (Gen4) speed as both link partners support this line rate but instead initializes at 2.5GT/s (Gen1) speed, without triggering any errors.

This is due to the time required by the SoC’s PHY Rx circuitry to compute a Figure Of Merit (FOM) for a given preset in Equalization Phase 2, which exceeds the Preset timer for EQ Phase 2 of the SoC’s PCIe controller, in turn preventing the next preset to be tested (possibly to obtain a better Bit Error Rate - BER) and forcing the link in some cases to fall back to Gen1 speed.

The problem can be detected by monitoring the appropriate timeout condition, and can be resolved by increasing the EQ Phase 2 timeout counter (up to 50% as allowed by the PCIe Specification) to allow for multiple presets to be tested and achieve optimal BER. The EQ timeout counters can be further increased beyond PCIe Specification recommendations for even greater margin.

The solution can be generalized with a Reliability mechanism that includes:

- exposing every LTSSM ‘timeout expired’ condition to the SoC’s application logic for detecting the issue (i.e. observability),

- extending the range of every LTSSM timeout counter by 50% minimum and allowing these counters to be dynamically programmed by the SoC’s application logic.

5.1.2. Excessive replays due to link partner’s ACK latency

In this scenario, the SoC is able to transmit write packets and read requests however the throughput observed is lower than what is expected based on the link speed and active lanes.

This is due to the link partner’s ACK latency that exceeds the recommended maximum latency defined in the PCIe Specification, resulting in transmission replays and affecting performance.

The problem can be detected by monitoring the number of Replays initiated, and can be resolved by increasing the ACK timeout counter to accommodate the extra latency.

The solution can be generalized with the Reliability mechanism proposed in the previous section. It should be noted however that the size of the Replay Buffer may limit the amount by which the ACK timeout can be increased, in order to avoid buffer overflow.

5.2. Tolerance to errors and error injection

In this scenario, a deadlock is observed after a few days of normal operation with packets no longer being transmitted on the link due to insufficient Tx credit available to the SoC’s application logic.

This is due to malformed TLPs transmitted on the link by the PCIe controller, possibly the result of an uncorrectable ECC or parity error at the Transmit Buffer level. The malformed TLP is discarded by the link partner’s receiver and associated credits are lost. Deadlock occurs as a consequence of this credit leakage when Tx credits are no longer available, which can occur over the course of several days depending on the frequency of the errors.

The credit leakage can be identified with an indication from the PCIe controller of the number of malformed TLP transmitted, and can be corrected by ensuring that the PCIe controller does not update the associated credits.

A generalization of the solution involves the implementation of a Reliability mechanism in which the PCIe interface logic is able to transmit errored TLPs on the PCIe link without incurring side effects such as credit leakage. This allows testing of the system hardware and system software response to errors. Similarly for the receive path, the ability for the PCIe interface to generate errored packets on the user interface without side effects allows testing the application logic and SoC’s response to errors.

The mechanism typically requires a dedicated set of registers and interface to the PCIe interface logic allowing for:

- Controlling the number, type, and frequency of errors

- Defining hardware triggers for the error injection

- Logging and reporting errors

- Allowing register access by SoC firmware and/or host software

The number of injectable errors is such that it is ultimately a tradeoff between gate count, implementation complexity, and likeliness of occurence. Common errors that may be supported include LCRC Error, Sequence # Errors, Nullified TLP, Malformed Packet Errors, Block DLLPs (e.g. ACK/NAK), Force DLLPs (e.g. NAKs), Symbol/Framing errors, Flow control errors (e.g. nonsensical values, blocked updates).

5.3. Layer-based monitoring and troubleshooting

In one instance, the PCIe link does not link up and LTSSM does not progress past the Detect state possibly due to a problem during the Receiver Detect sequence.

In another instance, the link is unstable with LTSSM frequently transitioning to the Recovery state. This issue could have multiple causes, originating from either the SoC’s PCIe interface or the link partner’s PCIe interface, including a problem at PHY/MAC level during the training (TSx) sequence, or a problem at Transaction level such as the reception of an Unsupported TLP.

These issues can be detected by probing relevant signals and events at the various layers of the PCIe interface. Bringing out the PIPE interface out on the application layer interface can help with PHY/MAC layer issues. It should be noted that PIPE receive data (RxData) should go through 128B/130B unscrambling (for PCIe Gen3 and Gen4) to be readable.

This mechanism can further be extended to the PCIe interface’s Physical Coding Sublayer (PCS), Data Link Layer (DLL) and Transaction Layer (TL) whereas relevant signals and events are brought out on the application layer’s interface for easy monitoring and troubleshooting. These signals/events may include:

- PHY/PCS Layer: Elasticity Buffer SKP Add/Delete, Speed change/Link width change, Entry to Recovery state, Lane state changes

- Data Link Layer: Tx/Rx Ack DLLP, Tx/Rx Update FC DLLP, Tx/Rx Nullified TLP, Rx Duplicate TLP

- Transaction layer: Tx/Rx packet types, FC credit exhaustion

6. Closing statement

As PCI Express is being further deployed into mission critical applications in the Automotive, AI, and Enterprise markets, the need for higher level of reliability, availability, and serviceability is increasing. Whether they are using a homegrown PCIe interface solution or licensing a PCIe IP solution, SoC designers are looking for mechanisms that provide better visibility into the PCIe interface behavior and better control over its operation. Implementing programmable timers and timeouts inside the PCIe interface logic as well as mechanisms for generating errors without side effects improve the reliability of the system; having dedicated monitoring, status, and control interfaces for each of the PCIe interface’s functional layer (PHY, PCS, MAC, DLL, TL) allow SoC designers to flag specific events and errors, and improve the overall serviceability and availability of the system.

PLDA is working closely with customers to offer a comprehensive set of RAS mechanisms in its line of PCIe controller IP, such as those described in this article, allowing customers to confidently deploy their SoCs and systems in mission-critical applications in AI, HPC, Automotive, and Enterprise applications. For more information, please visit us online at www.plda.com.

Related Semiconductor IP

- Multi-Channel Flex DMA IP Core for PCI Express

- PCIe - PCI Express Controller

- PCI Express PIPE PHY Transceiver

- Scalable Switch Intel® FPGA IP for PCI Express

- Multichannel DMA Intel FPGA IP for PCI Express*

Related White Papers

- Semiconductor Reliability and Quality Assurance--Failure Mode, Mechanism and Analysis (FMMEA)

- Design Patterns for High Availability

- Integrating design and test increases reliability

- A Generalized Waveform Synthesis Mechanism for Software Radio

Latest White Papers

- Breaking the Memory Bandwidth Boundary. GDDR7 IP Design Challenges & Solutions

- Automating NoC Design to Tackle Rising SoC Complexity

- Memory Prefetching Evaluation of Scientific Applications on a Modern HPC Arm-Based Processor

- Nine Compelling Reasons Why Menta eFPGA Is Essential for Achieving True Crypto Agility in Your ASIC or SoC

- CSR Management: Life Beyond Spreadsheets