An overview of Machine Learning pipeline and its importance

By V Srinivas Durga Prasad, Softnautics

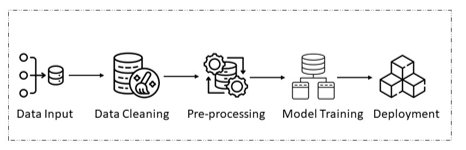

A Machine Learning (ML) pipeline is used to assist in the automation of machine learning processes. They work by allowing a sequence of data to be transformed and correlated in a model that can be tested and evaluated to achieve a positive or negative outcome. Starting from data extraction and pre-processing to model training & tuning, analysis of the model and deployment would run in a single entity in mainstream design. This means that the data will be extracted, cleaned, prepared, modelled, and deployed using the same script. Because machine learning models typically contain far less code than other software applications, keeping all resources in one place makes perfect sense. Because of advancements in deep learning, and neural network algorithms, the global market is expected to gain traction. Furthermore, many companies are tightening their deep learning capabilities to drive innovation, which is expected to drive ML market growth across industries like automotive, consumer electronics, media & entertainment, and others. According to the precedence research group, the global ML as a service market was valued at USD 15.47 billion in 2021 and it is predicted to reach USD 305.62 billion by 2030, with a CAGR of 39.3 percent from 2022 to 2030.

Overview of machine learning pipeline

A machine learning pipeline is a method for fully automating a machine learning task's workflow. This can be accomplished by allowing a series of data to be converted and associated in a model that can be examined to determine the output. A general ML pipeline consists of data input, data models, parameters, and predicted outcomes. The process of creating a machine learning model can be codified and automated using a machine learning pipeline. The deployment of various versions of the same model, model expansion, and workflow setup difficulties may arise while executing the ML process and must be handled manually. We can utilize a machine learning pipeline to address all of the aforementioned issues. Each step of the workflow functions independently using the ML pipeline. Therefore, one may select that module and use it as needed for any updates at any stage.

Overview of ML Pipeline

- Data input

The Data input step is the first step in every ML pipeline. The data is organized and processed in this stage so that it can be applied to subsequent steps.

- Validation of data

Data validation is the next step, which must be completed before training a new model. The statistics of the new data, such as the scope, number of classifications, distribution of subgroups, etc., are the main focus of data validation. We can compare various datasets to find anomalies using a variety of data validation tools like Python, R, Python Pandas, etc.

- Pre-processing of data

One of the most important phases of each ML lifecycle as well as the pipeline is data pre-processing. As it might produce a sudden and unexpected result, we cannot input the collected data directly to train the model without first processing it. The pre-processing stage entails getting the raw data ready for the ML model. The procedure is divided into several parts, such as attribute scaling, data cleansing, information quality assessment, and data reduction. The final dataset that can be utilised for model training and testing is the result of the data pre-processing procedure. In machine learning, a variety of methods like normalization, aggregation, numerosity reduction, etc. are available for pre-processing data.

- Data model training

Each ML pipeline's central step is model training. In this step, the model is trained to predict the output as accurately as possible given the input (a pre-processed dataset). Larger models or training data sets, however, might present some challenges. Therefore, efficient model training or model tuning distribution is needed for this. Because pipelines are scalable and can process many models at once, they can address the problem of the model training stage. Different sorts of ML algorithms like Supervised, Unsupervised, and Reinforcement learnings can be utilized for building data models.

- Deployment of model

It's time to deploy the model after training and analysis. Three methods exist for deploying ML models: through the model server, a browser, and an edge device. However, employing a model server is the typical method of deployment for the model. ML pipeline ensures smooth functioning of ML inference at edge level devices where the data generation plays a crucial part and offers features like lower cost, real time processing, and increased privacy. And for cloud services, the ML pipeline ensures proper utilization of resource demand and reduces processing power and consumes fewer data storage space. The ability to host different versions concurrently on model servers makes it possible to do A/B tests on models and can yield insightful feedback for model improvement.

Benefits of a machine learning pipeline include.

- Providing a comprehensive view of the entire series of phases by mapping a complex process that incorporates input from various specialties.

- Concentrating on particular steps in the sequence one at a time allows for the automation of individual phases. It is possible to integrate machine learning pipelines, increasing productivity and automating processes.

- It offers the flexibility to easily debug the entire code and trace out the issues in a particular step.

- Easily deployable, upscaling modular machine learning pipeline components as necessary.

- Offers the flexibility of using multiple pipelines which are reliably coordinated over heterogeneous system resources as well as different storage locations.

Each machine learning pipeline will be slightly different depending on the model's use case and the organization using it. However, since the pipeline frequently adheres to a typical machine learning lifecycle, the same factors must be taken into account when developing any machine learning pipeline. Consider the various phases of machine learning and divide each phase into distinct modules as the first step in the process. A modular approach facilitates the gradual enhancement of each component of the machine learning pipeline and makes it easier to concentrate on the individual parts of the pipeline.

Softnautics with its AI engineering and machine learning services helps businesses build intelligent solutions in the areas of computer vision, cognitive computing, artificial intelligence & FPGA acceleration. We possess the capability to handle a complete Machine Learning (ML) pipeline involving dataset, model development, optimization, testing, and deployment. We collaborate with organizations to develop high-performance cloud-to-edge machine learning solutions like face/gesture recognition, people counting, object/lane detection, weapon detection, food classification, and more across a variety of platforms.

Related Semiconductor IP

- Ultra-Low-Power LPDDR3/LPDDR2/DDR3L Combo Subsystem

- 1G BASE-T Ethernet Verification IP

- Network-on-Chip (NoC)

- Microsecond Channel (MSC/MSC-Plus) Controller

- 12-bit, 400 MSPS SAR ADC - TSMC 12nm FFC

Related Articles

- An Industrial Overview of Open Standards for Embedded Vision and Inferencing

- Importance of VLSI Design Verification and its Methodologies

- Exploring Machine Learning testing and its tools and frameworks

- An 800 Mpixels/s, ~260 LUTs Implementation of the QOI Lossless Image Compression Algorithm and its Improvement through Hilbert Scanning

Latest Articles

- Extending and Accelerating Inner Product Masking with Fault Detection via Instruction Set Extension

- ioPUF+: A PUF Based on I/O Pull-Up/Down Resistors for Secret Key Generation in IoT Nodes

- In-Situ Encryption of Single-Transistor Nonvolatile Memories without Density Loss

- David vs. Goliath: Can Small Models Win Big with Agentic AI in Hardware Design?

- RoMe: Row Granularity Access Memory System for Large Language Models