LeapMind Unveils "Efficiera", the New Ultra Low Power AI Inference Accelerator IP

The company drives creation of embedded AI products by providing accelerators featuring superior power and area efficiency

May 18, 2020 – Tokyo, Japan - LeapMind Incorporated (Shibuya-ku, Tokyo Japan, CEO: Soichi Matsuda), the industry leading provider of business solutions built around deep learning technology, today announced that the company has developed "Efficiera", an ultra low power AI inference accelerator IP for targeting companies that design ASIC and FPGA circuits, and other related products. LeapMind plans to ship "Efficiera" in the Fall of 2020. "Efficiera" will enable customers to develop cost-effective, low power edge devices and accelerate go-to-market of custom devices featuring AI capabilities.

■ Overview of Efficiera and related products

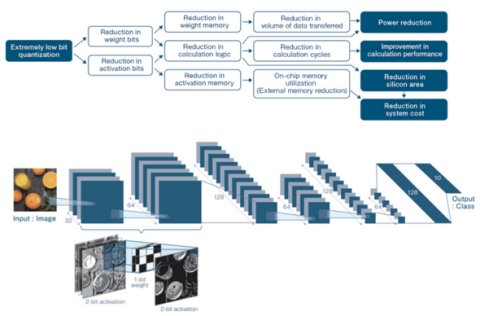

"Efficiera" is an Ultra Low Power AI Inference Accelerator IP specialized for Convolutional Neural Network (CNN)(1) inference calculation processing; it functions as a circuit in an FPGA or ASIC device. Its "Extremely low bit Quantization" technology, which minimizes the number of quantized bits to 1–2 bits, does not require cutting-edge semiconductor manufacturing processes or the use of specialized cell libraries to maximize the power and space efficiency associated with convolution operations, which account for a majority of inference processing.

This product enables the inclusion of deep learning capabilities in various edge devices that are technologically limited by power consumption and cost, such as consumer appliances (household electrical goods), industrial machinery (construction equipment), surveillance cameras, and broadcasting equipment as well as miniature machinery and robots with limited heat dissipation capabilities.

LeapMind is simultaneously launching several related products and services: "Efficiera SDK," a software development tool providing a dedicated learning and development environment for Efficiera, the "Efficiera Deep Learning Model" for efficient training of deep learning models, and "Efficiera Professional Services," an application-specific semi-custom model building service based on LeapMind's expertise that enables customers to build extremely low bit quantized deep learning models applicable to their own unique requirements.

Official product website URL: https://leapmind.io/business/ip/

Target Applications

(1) "Hazard Proximity Detection" using object detection

Helps ensure safety when using industrial vehicles such as construction machinery, by detecting surrounding people and obstacles.

(2)"High quality video streaming" using noise reduction

Improves image quality by eliminating image noise when shooting under low-light conditions and by blocking noise caused by image codecs.

(3)"Higher resolution for video footage" using super-resolution

Converts low-resolution video data into resolutions suitable for display devices.

Photo caption: Target applications

■ Advantages of Efficiera

"Efficiera" offers excellent power and space efficiencies, contributing to power savings and providing cost reductions in AI-equipped products. In addition, because the circuit information is licensed rather than being provided as a module or device, customers can integrate Efficiera within a device featuring other circuits, thereby contributing to reduced BoM(Bill of Material) costs for mass-produced products equipped with AI capabilities. The circuit configuration:

(1)Power Savings: The power required for convolutional processing is reduced by minimizing the amount of data transfer and the number of bits.

(2)Performance: The number of calculation cycles can be reduced by reducing the calculation logic, thereby improving calculation performance relative to area and on a per cycle basis.

(3)Space savings: The silicon area is reduced while maintaining performance by reducing the calculation logic using 1–2 bit quantization; thus, the area per computing unit is minimized.

* The performance estimate (PPA(2)) results during the development stage were measured in cooperation with Alchip Technologies, Limited, and announced at the "COOL Chips 23" international computer conference on April 17th, 2020. These results are featured in the following press release.

▼ LeapMind announces performance results for its in-house developed AI inference accelerator at COOL Chips 23

URL:https://leapmind.io/news/content/4991/

■ Development background

Artificial Intelligence technology, particularly deep learning, has received significant attention in recent years. Deep learning has dramatically improved classification accuracy in several fields, including image and audio processing. However, vast computing resources (significant quantities of memory and high processing power) are required to perform the necessary calculations. Hence, it is difficult to perform these calculations on edge devices (terminals) with limitations in power draw, cost, and thermal management.(3) To solve this problem, LeapMind has been engaged in research and development aimed at reducing the load of deep learning models by focusing on a method called "extremely low bit quantization technology," based on which Efficiera was developed. Hereafter, we plan to continue this development with the goal of enabling the incorporation of image recognition capabilities in all types of edge devices.

■ Supporting Efficiera, LeapMind's proprietary "Extremely low bit quantization technology"

LeapMind is working on the development of a technology to reduce the load of deep learning models, called "extremely low bit quantization technology." In general, the use of numerical expressions with wide bit ranges of 16 or 32 bits (FP16 and FP32 data types) improves the accuracy of inferential results; however, it also increases the size of calculation circuits (area) and both processing time and power consumption. Conversely, when the bit width is reduced and a numerical expression with low bit width of 1–2 bits is used, the circuit scale is reduced and both processing time and power consumption are reduced; however, this results in a decrease in the accuracy, which is an issue when attempting to reduce power and area. However, using extremely low bit quantization technology, LeapMind provides extremely quantization such as a 1-bit Weight (weight coefficient) and 2-bit Activation (Intermediate data), while maintaining accuracy and achieving a significant reduction in the model area, thereby maximizing speed, power efficiency, and space efficiency.

Note to editors

(1)An abbreviation for “Convolutional Neural Network.” This is a type of deep learning architecture that is widely used for image and video recognition.

(2)This is an acronym for “Power, Performance, and Area.”

(3)In particular, power consumption is a considerably important challenge in edge devices with limited power supply.

Moreover, there are several advantages to employing minimal quantization on edge devices, such as reducing delays and ensuring security.

About LeapMind Incorporated

Founded in 2012 with the company philosophy of “promoting new devices using machine learning technologies far and wide,” LeapMind Inc. has procured 4.99 billion yen and specializes in extremely low bit quantization technology that makes deep learning compact. The company’s products are employed in more than 150 businesses primarily in manufacturing, in industries such as the automotive industry. LeapMind Inc. also develops semiconductor IP based on the company’s expertise in software and hardware.

Head Office: 150-0044 3F, Shibuya Dogenzaka Sky Bldg, 28-1 Maruyama-cho, Shibuya-ku, Tokyo, Japan

Representative: Soichi Matsuda, CEO

Established: December 2012

URL: https://leapmind.io

Related Semiconductor IP

- AI inference engine for real-time edge intelligence

- Neural engine IP - AI Inference for the Highest Performing Systems

- AI inference engine for Audio

- Neural engine IP - Balanced Performance for AI Inference

- AI inference processor IP

Related News

- Official Commercial Launch of Efficiera Ultra-Low Power AI Inference Accelerator IP Core

- LeapMind's "Efficiera" Ultra-low Power AI Inference Accelerator IP Was Verified RTL Design for ASIC/ASSP Conversion

- LeapMind Announces the Beta Release of their Ultra-low Power Consumption AI Inference Accelerator IP

- LeapMind Announces Efficiera v2 Ultra-Low Power AI Inference Accelerator IP

Latest News

- RaiderChip showcases the evolution of its local Generative AI processor at ISE 2026

- ChipAgents Raises $74M to Scale an Agentic AI Platform to Accelerate Chip Design

- Avery Dennison announces first-to-market integration of Pragmatic Semiconductor’s chip on a mass scale

- Ceva, Inc. Announces Fourth Quarter and Full Year 2025 Financial Results

- Ceva Highlights Breakthrough Year for AI Licensing and Physical AI Adoption in 2025