Role of Time-of-Flight Sensors in Automotive

In recent posts, we've explored the foundational aspects of Time-of-Flight (ToF) technology and the critical role of Tensilica Vision DSPs in efficiently processing and decoding ToF sensor data. We then delved into how Artificial Intelligence (AI) significantly enhances ToF applications, from improving depth quality with denoising to enabling advanced scene understanding for object, gesture, and facial recognition. Now, let's connect these advancements directly to the automotive industry, where ToF sensors and powerful Vision DSPs are truly driving the future of intelligence and safety.

The automotive sector is undergoing a profound transformation, moving beyond basic detection to a comprehensive, real-time 3D understanding of both the external environment and the vehicle's interior. ToF is emerging as a pivotal technology, offering precise 3D depth and distance information essential for navigating complex environments and understanding in-cabin dynamics. This capability not only enhances existing features but also enables entirely new ones, from precise external environment mapping using LiDAR to granular in-cabin monitoring for child presence detection and gesture control.

ToF Sensors in Automotive Applications

Advanced Driver-Assistance Systems (ADAS)

ToF sensors are poised to revolutionize ADAS by providing a new dimension of perception. Unlike traditional 2D cameras that offer limited depth information, ToF sensors directly measure the time it takes for light to reflect off objects, generating precise 3D point clouds. When integrated with other sensor modalities, particularly LiDAR (Light Detection and Ranging), ToF sensors contribute to an exceptionally accurate and comprehensive understanding of the vehicle's surroundings. This rich 3D data is crucial for the sophisticated operation of various ADAS features:

|

System |

Function |

Benefits |

|

Adaptive Cruise Control (ACC) |

Maintain a safe following distance |

Accurate distance measurement, smoother acceleration, and deceleration |

|

Lane-Keeping Assistance (LKA) and Lane Centering (LC) |

Map lane boundaries and vehicle position |

Enhanced accuracy and reliability, precise steering adjustments |

|

Automatic Emergency Braking (AEB) |

Object detection and ranging |

Superior detection, earlier threat assessment, and reliable braking |

|

Blind Spot Monitoring (BSM) and Rear Cross-Traffic Alert (RCTA) |

Detect approaching vehicles or objects |

High-resolution 3D mapping, enhanced safety |

|

Parking Assistance Systems (PAS) and automated parking |

3D environmental mapping |

Accurate obstacle measurement, precise parking |

In essence, ToF sensors offer a significant leap forward in perception for ADAS. Their direct measurement of depth, high spatial resolution, and robustness to varying environmental conditions provide a more complete and reliable understanding of the driving environment, leading to safer, more efficient, and ultimately, more autonomous vehicles. They offer a compelling alternative and a powerful complement to existing sensor technologies, pushing the boundaries of what ADAS can achieve.

Autonomous Driving (AD)

The pursuit of fully autonomous vehicles necessitates a sensing suite that is not only robust but also highly redundant, ensuring uninterrupted operation and maximum safety. ToF sensors play a crucial role in this intricate system by delivering real-time, high-resolution depth maps. These maps act as invaluable complements to data gathered from other sensor modalities such as radar, lidar, and cameras. The integration of ToF sensor data significantly enhances the vehicle's ability to perform precise obstacle avoidance, meticulous path planning, and maintain comprehensive situational awareness. This is particularly vital in challenging lighting conditions, where traditional camera systems might struggle, or in scenarios demanding highly accurate proximity detection that radar or lidar alone might not provide with the same level of granularity. Their ability to deliver accurate depth information regardless of ambient light variations or material properties makes them indispensable for reliable perception in diverse and dynamic driving environments.

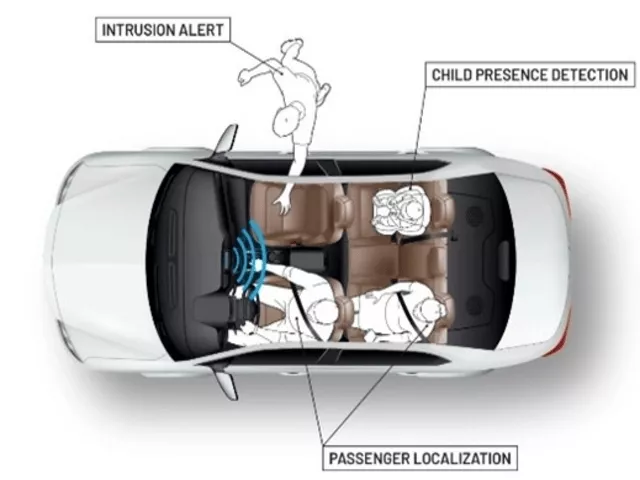

In-Cabin Sensing (ICS)

ToF technology is poised to redefine the in-cabin experience within vehicles, moving beyond mere external sensing to create a more intuitive, safe, and personalized environment for occupants.

|

Application |

Description |

Benefits |

Future Iterations |

|

Child Presence Detection |

Reliable detection of infants and children using ToF cameras |

Accurate identification even if obscured, enhances safety systems |

Integration with climate control systems to adjust temperature |

|

Occupant Monitoring |

Monitoring all vehicle occupants using ToF sensors |

Tracks posture, ensures optimal seatbelt usage, non-invasive monitoring of vital signs |

Personalizing airbag deployment based on occupant's size, position, proximity |

|

Gesture Control |

ToF-based gesture recognition for human-machine interaction |

Control infotainment or climate systems without touching buttons or screens |

Promotes cleaner and ergonomic cabin design |

|

Driver Monitoring Systems (DMS) |

Enhances DMS capabilities by tracking head pose, gaze direction, facial or body movements |

Detects fatigue, drowsiness, distraction; timely alerts for accident prevention |

Personalized driver profiles adapting to individual driving styles |

Tensilica Vision DSPs: The Engine for Automotive ToF Intelligence

The increasing sophistication of these ToF-driven automotive systems necessitates powerful, energy-efficient processing solutions capable of handling vast amounts of real-time sensor data. This is where Cadence Tensilica Vision DSPs become indispensable. As we've seen, these DSPs are specifically engineered for intensive vision and AI processing at the edge, offering a compelling solution for automotive applications.

- Efficient ToF decoding: Vision DSPs excel at the complex signal processing required for ToF decoding, including phase extraction, unwrapping, and filtering. Their parallel processing capabilities (SIMD and VLIW) and specialized instructions accelerate these computationally demanding tasks.

- Integrated AI acceleration: The automotive industry is rapidly adopting AI for enhanced perception. Tensilica Vision DSPs, particularly the Vision 331/341 series, are designed as efficient AI inference engines. They can run all neural network layers, including those crucial for transformer models, directly on the DSP core. This unified architecture minimizes power consumption and data movement, making them ideal for deploying advanced AI algorithms for denoising, MPI reduction, object detection, and gesture recognition at the edge within the vehicle.

- Meeting automotive safety standards: The integration of ToF and AI in critical automotive systems demands adherence to rigorous safety standards like ISO 26262. Tensilica Vision DSPs are designed with features and development flows that support achieving high Automotive Safety Integrity Levels (ASIL), crucial for ensuring the reliability and safety of these advanced systems.

- Power efficiency and performance: In automotive designs, power consumption and thermal management are critical. Vision DSPs provide a high-performance, low-power solution, enabling sophisticated 3D sensing capabilities without compromising overall system efficiency.

The Road Ahead

Combining Time-of-Flight sensors with Tensilica Vision DSPs' AI capabilities is transforming vehicle safety and user interaction. This integration enables vehicles to interpret their surroundings more effectively, supporting safer and smarter autonomous driving. As automotive technology shifts toward software- and AI-driven systems, ToF sensors and Vision DSPs will become increasingly essential.

Related Semiconductor IP

- Tensilica Vision Q8 DSP

- Vision AI DSP

- Tensilica Vision P1 DSP

- Tensilica Vision P6 DSP

- Tensilica Vision Q7 DSP

Related Blogs

- Ensuring Integrity: The Role of SoC Security in Today's Digital World

- SiFive HiFive: The Vital Role of Development Boards in Growing The RISC-V Ecosystem + HiFive Premier P550 Update

- The Future of Technology: Trends in Automotive

- Imagination and Renesas Redefine the Role of the GPU in Next-Generation Vehicles

Latest Blogs

- Silicon Insurance: Why eFPGA is Cheaper Than a Respin

- One Bit Error is Not Like Another: Understanding Failure Mechanisms in NVM

- Introducing CoreCollective for the next era of open collaboration for the Arm software ecosystem

- Integrating eFPGA for Hybrid Signal Processing Architectures

- eUSB2V2: Trends and Innovations Shaping the Future of Embedded Connectivity