Enhancing Edge AI with the Newest Class of Processor: Tensilica NeuroEdge 130 AICP

Artificial intelligence is rapidly expanding its reach into embedded systems and edge devices, driving the need for specialized processors that can efficiently handle complex AI workloads. While Neural Processing Units (NPUs) excel at accelerating neural network computations, the increasing demands of agentic and physical AI networks require a more comprehensive approach. This is where the Cadence Tensilica NeuroEdge 130 AI Co-Processor (AICP) comes in, designed to complement any NPU and enable end-to-end execution of these advanced AI applications.

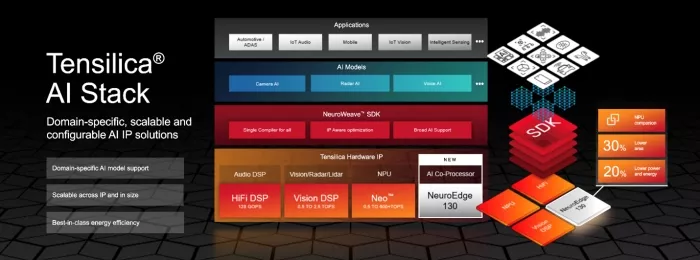

The NeuroEdge 130 AICP is a new class of processor built on the proven architecture of the Tensilica Vision DSP family with 30% lower area and 20% lower power and energy compared to the Vision DSPs. It is specifically engineered to handle the crucial pre-processing tasks for AI workloads, freeing up the NPU to focus on its core strengths in neural network inference. This heterogeneous processing approach leverages the strengths of both processor types, leading to optimized performance and efficiency in embedded AI systems.

But Why a Co-Processor for NPUs? Can't We Use a More Powerful NPU?

Sure, you could use a more powerful NPU. But ultimately, NPUs are optimized for the parallel processing of neural network computations, but they may not be as efficient or flexible for the diverse pre-processing tasks required by real-world AI applications. Also, these neural networks are composed of operations such as softmax, sigmoid, batch normalization, to name just a few, that are not suitable for execution on an NPU. Offloading these computationally intensive tasks to a specialized co-processor like the NeuroEdge 130 AICP offers significant advantages.

- Computational Offloading: By handling pre-processing tasks, these units allow the Neural Processing Unit (NPU) to dedicate its resources to demanding neural network inference.

- Performance and Latency Improvements: Parallel processing and efficient data handling within the signal processing unit reduce delays throughout the AI processing pipeline, leading to enhanced overall system performance.

- Energy Efficiency: Designed specifically for signal processing, these units consume less power for these operations compared to NPUs or general-purpose CPUs.

- Adaptability and Longevity: Their programmable and extensible nature enables customization and ensures they can adapt to future advancements in AI workloads.

What Makes NeuroEdge AICP So Special?

The NeuroEdge 130 AICP features a Very Long Instruction Word (VLIW) and Single Instruction, Multiple Data (SIMD) architecture, enabling a high degree of parallelism essential for efficiently handling data-intensive pre-processing stages like pixel-level computations. A key feature is its wide memory bus, capable of reaching up to 512 bits, which facilitates the rapid transfer of high-bandwidth data, avoiding bottlenecks in the AI pipeline. An integrated Direct Memory Access (DMA) engine further enhances efficiency by managing data movement without constant processor core intervention, reducing data access latency crucial for real-time pre-processing.

What Tasks Are Targeted for NeuroEdge 130 AICP to Handle?

The NeuroEdge 130 AICP can handle a wide range of pre-processing tasks, including:

- Non-Linear Operations: Accelerating non-linear operations such as ReLU, sigmoid, tanh, etc, by taking advantage of the optimized ISA and instructions specific for AI networks.

- Data Format Transformation: Efficiently converting data between different formats and handling various data types (8-bit to 32-bit, fixed-point and floating-point).

- Region of Interest (ROI) Extraction and Data Reduction: Identifying and extracting relevant regions and reducing the amount of data sent to the NPU.

Software Ecosystem

Cadence provides the NeuroWeave Software Development Kit (SDK) to facilitate the integration of NeuroEdge with NPUs, simplifying the development and deployment of AI applications on heterogeneous systems. Optimized libraries and neural network mapper toolsets further accelerate development and streamline the deployment of AI functionalities.

Beyond its architectural strengths, the NeuroEdge 130 AICP is highly programmable and extensible. It can be programmed with standard C/C++ and supports the Tensilica Instruction Extension (TIE) language for custom instruction sets, allowing developers to optimize the co-processor for specific pre-processing algorithms.

The NeuroEdge 130 AICP is well-suited for various real-world applications, including ADAS, surveillance systems, and industrial applications leveraging sensor fusion. The Tensilica NeuroEdge 130 AICP offers a compelling solution for the challenges of implementing complex AI workloads in embedded systems and edge devices, providing a high-performance, energy-efficient, and flexible platform for accelerating physical AI applications.

Learn more about Cadence's latest processor and how it's solving tomorrow's AI problems at the Embedded Vision Summit 2025. Amol Borkar, Product Marketing Director at Cadence, will present on Wednesday, May 21, at 1:30pm.

Learn more about the Tensilica NeuroEdge 130 AICP.

Related Semiconductor IP

- Dataflow AI Processor IP

- Powerful AI processor

- AI Processor Accelerator

- High-performance AI dataflow processor with scalable vector compute capabilities

- AI DSA Processor - 9-Stage Pipeline, Dual-issue

Related Blogs

- The Future of Technology: Transforming Industrial IoT with Edge AI and AR

- Upgrade the Raspberry Pi for AI with a Neuromorphic Processor

- Pushing the Boundaries of Memory: What’s New with Weebit and AI

- A New Era for Edge AI: Codasip’s Custom Vector Processor Drives the SYCLOPS Mission

Latest Blogs

- Leadership in CAN XL strengthens Bosch’s position in vehicle communication

- Validating UPLI Protocol Across Topologies with Cadence UALink VIP

- Cadence Tapes Out 32GT/s UCIe IP Subsystem on Samsung 4nm Technology

- LPDDR6 vs. LPDDR5 and LPDDR5X: What’s the Difference?

- DEEPX, Rambus, and Samsung Foundry Collaborate to Enable Efficient Edge Inferencing Applications