How a 16Gbps Multi-link, Multi-protocol SerDes PHY Can Transform Datacenter Connectivity

By Steve Brown, Cadence

Increasingly, more of the focus on mobile has centered around cloud datacenters and the networking to get the data back and forth between these datacenters and the mobile device. Functions like voice recognition and mapping depend on the ability to split the functionality between the smartphone, for local processing like encryption and compression, and the back end, where a large number of servers can do the heavier lifting before returning the results.

There is also tremendous value derived from gathering and analyzing large data sets, such as information about consumer buying patterns and business trends. Big Data companies are leveraging solutions such as Hadoop to automatically partition the data and computation in order to distribute right-sized tasks to the compute farm, analyze the data partition, and recombine this data into aggregated results.

Supporting this data-centric focus is an equipment market comprised of several sub-segments:

- Compute

- Wired networks (Internet)

- Wireless and access (cellular, WiFi, etc.)

- Storage

How Will Zettabytes of Data Impact Compute Functions?

According to Cisco, “global IP traffic will pass the zettabyte (1000 exabytes) threshold by the end of 2016, and will reach 2 zettabytes per year by 20191.” A large portion of this traffic consists of video data—Netflix downloads alone reportedly account for 37% of peak US Internet traffic2. How will these volumes impact the compute functions in a datacenter? Datacenters are hosting a lot of cloud software products and services, facilitating porting of applications to the cloud. The bulk of the compute cycles is spent on data analysis. The median dataset has grown from 6GB to 30GB3. Meanwhile, the data scientist is now considered the “sexiest job of the 21st century,” according to Forbes4.

Datacenter Wired Networks

While the processor is driving computation throughput, the wires between the machines are increasingly becoming a new kind of bottleneck. Large data analysis software partitions the algorithms and data across the datacenter compute servers, distribute and manage the jobs, and recombine the data. Some algorithms utilize data or results from “adjacent” partitions. The speed of these types of inter-job communications can have a large impact on the overall throughput of the data analysis. This is an example of why it can be beneficial to employ high-speed interfaces such as PCIe 4.0, 10G-KR, and USB 3.1 for server-to-server communication within the datacenter.

Datacenter Wireless and Access

Wireless networking for cellular and WiFi, etc. actually depends on a mixture of radio interfaces (sometimes called the air interface) and wired infrastructure to connect to the Internet or backhaul of traffic. There are trends changing the architecture of the basestation to optimize the throughput.

Cloud-RAN (Radio Access Network) or C-RAN presents a particularly interesting potential technology. By moving part of the basestation processing, with all the DSP and other processing required, out to the antennae, packets are expedited onto the backhaul. The challenge is that high-performance wireless standards such as LTE have latency requirements, not just throughput requirements. Because of the differing distances to the various basestations, the economics of the architecture may prove to be prohibitive.

Datacenter Storage

In storage, the biggest trend is probably the transition from rotating media to SSD (solid state disks) based on Flash memory. There are challenges in scaling this from just being in your notebook to the kind of functionality needed for large datacenters processing video and large datasets, mainly because Flash memory bits can only be written a limited number of times.

PCIe Gen4 for Datacenters

For datacenter planners and enterprise software architects, PCIe Gen4 is transforming servers and virtualization possibilities. The interface increases the bandwidth and value of data transmission from server to server, switch to switch, and server to storage, enabling even larger dataset analysis and parallelizing other complex cloud services.

High-speed SerDes technology is used to implement these high-speed connections. SerDes technology is often applied in advanced-node geometries such as 14/16 nm. However, it is increasingly difficult to create robust SerDes designs while meeting short project timescales.

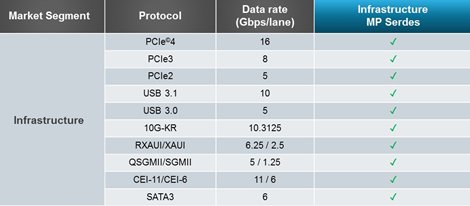

An alternative to in-house design is partnering with a leading IP provider. Cadence, for example, provides a 16Gbps PHY that supports multiple protocols (see Figure 1 below). Cadence is also the first to provide multi-link SerDes IP to support different protocols running on links in the same bundle or macro of SerDes. This means that each link can be configured to 1 of the 14 protocols supported by the PHY. There are two PLLs embedded per common block to simultaneously support different protocols.

Cadence 16Gbps multi-protocol PHY

In addition, this configuration is software enabled through the registers. While the SoC is running, the SerDes can be quickly halted, reconfigured, reset, and restarted. The PHY also offers long reach and high power efficiency for green datacenters.

Demo at DesignCon

Cadence will be demonstrating a PCIe Gen4 testchip operating at 16Gbps at the 2016 DesignCon Expo, held at the Santa Clara Convention Center this week. Read about Cadence at DesignCon here.

About the Author

Steve Brown is Director of Product Marketing, IP Group, at Cadence Design Systems. He has held senior marketing and engineering management positions at Cadence, Verisity, Synopsys, and Mentor Graphics. He specializes in creating product and marketing plans to enter markets and drive products to positions of market leadership. Steve earned BSEE and MSEE degrees from Oregon State University and certificates in leadership and marketing strategy at Stanford, Berkeley, Harvard, Kellogg, and Wharton.

Steve Brown is Director of Product Marketing, IP Group, at Cadence Design Systems. He has held senior marketing and engineering management positions at Cadence, Verisity, Synopsys, and Mentor Graphics. He specializes in creating product and marketing plans to enter markets and drive products to positions of market leadership. Steve earned BSEE and MSEE degrees from Oregon State University and certificates in leadership and marketing strategy at Stanford, Berkeley, Harvard, Kellogg, and Wharton.

Related Semiconductor IP

- Programmable PCIe2/SATA3 SERDES PHY on TSMC CLN28HPC

- PCI Express Gen5 SERDES PHY on Samsung 8LPP

- PCI Express Gen4 SERDES PHY on Samsung 7LPP

- PCI Express Gen3/Enterprise Class SERDES PHY on Samsung 28LPP

- PCI Express Gen3/4 Enterprise Class SERDES PHY on Samsung 14LPP