Neural Network Accelerator IP

Filter

Compare

42

IP

from

16

vendors

(1

-

10)

-

Neural Network Accelerator

- The Neural-Network Accelerators (NACC) improves the inference performance of neural networks.

- The NACC data type is INT8, and supports im2col, convolution, depthwise convolution, average pool, max pool, fully connected, activation and matrix multiplication acceleration.

-

PowerVR Neural Network Accelerator

- Flexible bit-depth data type support

- Lossless weight compression

- Advanced security enablement

-

PowerVR Neural Network Accelerator - The ultimate solution for high-end neural networks acceleration

- Security

- Lossless weight compression

-

PowerVR Neural Network Accelerator - The perfect choice for cost-sensitive devices

- API support

- Framework Support

- Bus interface

- Memory system

-

PowerVR Neural Network Accelerator - The ideal choice for mid-range requirements

- API support

- Framework Support

- Bus interface

- Memory system

-

PowerVR Neural Network Accelerator - perfect choice for cost-sensitive devices

- API support

- Framework Support

- Bus interface

- Memory system

-

PowerVR Neural Network Accelerator - cost-sensitive solution for low power and smallest area

- API support

- Framework Support

- Bus interface

- Memory system

-

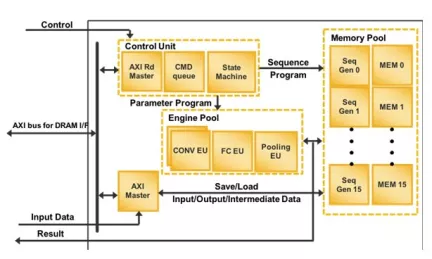

Run-time Reconfigurable Neural Network IP

- Customizable IP Implementation: Achieve desired performance (TOPS), size, and power for target implementation and process technology

- Optimized for Generative AI: Supports popular Generative AI models including LLMs and LVMs

- Efficient AI Compute: Achieves very high AI compute utilization, resulting in exceptional energy efficiency

- Real-Time Data Streaming: Optimized for low-latency operations with batch=1

-

Convolutional Neural Network (CNN) Compact Accelerator

- Support convolution layer, max pooling layer, batch normalization layer and full connect layer

- Configurable bit width of weight (16 bit, 1 bit)

-

Power efficient, high-performance neural network hardware IP for automotive embedded solutions

- Power efficient, high-performance

- For automotive embedded solutions