All-in-Memory Stochastic Computing using ReRAM

Abstract

As the demand for efficient, low-power computing in embedded and edge devices grows, traditional computing methods are becoming less effective for handling complex tasks. Stochastic computing (SC) offers a promising alternative by ap proximating complex arithmetic operations, such as addition and multiplication, using simple bitwise operations, like majority or AND, on random bit-streams. While SC operations are inherently fault-tolerant, their accuracy largely depends on the length and quality of the stochastic bit-streams (SBS). These bit-streams are typically generated by CMOS-based stochastic bit-stream generators that consume over 80% of the SC system’s power and area. Current SC solutions focus on optimizing the logic gates but often neglect the high cost of moving the bit-streams between memory and processor. This work leverages the physics of emerging ReRAM devices to implement the entire SC flow in place: ❶ generating low-cost true random numbers and SBSs, ❷ conducting SC operations, and ❸ converting SBSs back to binary. Considering the low reliability of ReRAM cells, we demonstrate how SC’s robustness to errors copes with ReRAM’s variability. Our evaluation shows significant improvements in throughput (1.39×, 2.16×) and energy consumption (1.15×, 2.8×) over state-of-the-art (CMOS- and ReRAM-based) solu tions, respectively, with an average image quality drop of 5% across multiple SBS lengths and image processing tasks.

I. INTRODUCTION

The growing prevalence of embedded and edge devices has driven the demand for low-cost but efficient computing solutions. These devices, which often run complex applications like computer vision tasks in real-world environments, are con strained by computational resources and power budget, making traditional computing methods less effective. Stochastic com puting (SC) and non-von Neumann paradigms have emerged as promising alternatives, offering trade-offs in computational density, energy efficiency, and error tolerance [1, 2, 3, 4].

In SC, data is represented by random bit-streams, where a value x ∈ [0,1] is encoded by the probability (Px) of a ‘1’ appearing in the stream. For example, the bit-stream ‘10101’ represents the value 3 5, where 5 is the bit-stream length (N). This unconventional representation enables complex compu tations like multiplication and addition to be approximated with simple logic operations such as AND and majority, respectively, reducing area and power consumption substan tially without taking a high toll on computational accuracy. Additionally, since all bits in the bit-streams carry equal weights– no most- or least-significant bits– SC is naturally tolerant to noise, including bit flips and inaccuracies in the input data and computations. This makes SC particularly advantageous for a range of applications, including image processing [5], signal processing [6], and neural networks [7].

Stochastic bit-streams (SBSs) are conventionally generated using a CMOS-based structure, called stochastic bit-stream generator, built from a pseudo random (or more recently, quasi-random) [8] number generator, and a binary compara tor. The accuracy and cost efficiency of SC systems highly depend on this bit-stream generation unit. Presently, CMOS based bit-stream generation consumes up to 80% of the system’s total hardware cost and energy consumption [4, 9]. Additionally, SC implementations on classic von Neumann systems require extensive movement of bit-streams from/to memory, which is often overlooked in evaluations but can easily offset the benefits of the simpler SC operations. This has motivated significant research into non-von Neumann computing for SC, using different memory technologies [10, 11, 12, 13, 14, 15, 16, 17].

Most compute-in-memory (CIM) designs for SC utilize ReRAM, a nonvolatile memory technology that stores data in the resistance state of the devices [18]. ReRAM offers DRAM-comparable read latency, higher density, and signif icantly reduced read energy consumption but incurs expensive write operations, limited write endurance, and suffers from non-linearities [19]. SC in ReRAM benefits from ReRAM’s high density for efficient storage and in-place processing of long bit-streams, while SC’s inherent robustness helps mitigate the effects of ReRAM’s non-linearities. In existing ReRAM based CIM designs for SC, conventional CMOS-based random number generators (RNGs) are used for bit-stream generation, while ReRAM arrays handled in-place logic operations [10, 11, 12]. This increases parallelism and reduces data movement overhead, though the high cost of random bit-stream and SBSs generation remains a bottleneck. Techniques like leveraging ReRAM’s inherent write noise [13] and employing DRAM based lookup tables [20] have been explored to improve the performance of random bit-stream generation. However, SBSs generation continues to face challenges, including the high energy cost of ReRAM write operations and scalability issues with DRAM-based methods.

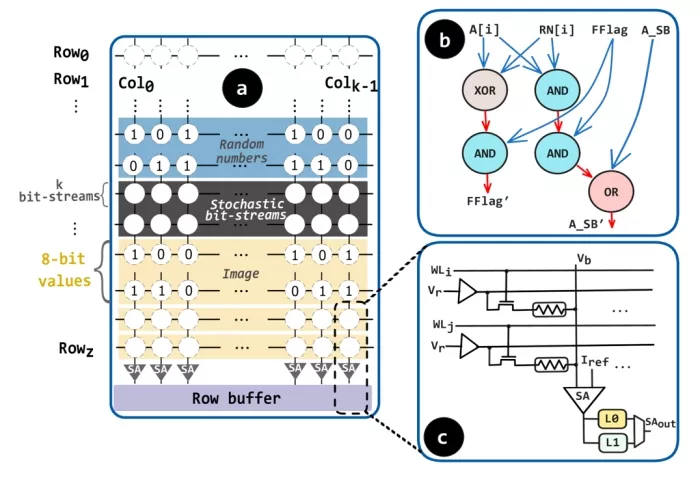

Figure 1: A high-level overview of our proposed in-memory SC solution: (a) ReRAM array, (b) Greater-than operation using basic logic gates, (c) Write latches in the peripheral circuitry.

To address these challenges, we propose a CIM accelerator that implements all steps of SC using ReRAM. We decouple RNG from SBS generation, allowing compatibility with any RNG type, including general-purpose true RNGs (TRNGs) based on ReRAM [21]. This approach ensures (1) accurate SBS generation with target probabilities and (2) correlation control, despite ReRAM cell noise. We perform in-memory logic and comparison operations to convert true random num bers into SBS (❶), conduct SC operations (❷), and convert the resulting values back into a binary representation (❸). Concretely, this work makes the following novel contributions:

- We propose a ReRAM-based accelerator for SC that implements all steps in place, including those often overlooked by current SC designs.

- We develop a novel in-memory method for converting true random binary sequences (50% ones) into SBSs with desired probabilities. To the best of our knowledge, this is the first such method reported in the literature.

- Our SBS generation approach is RNG-agnostic, leverag ing in-memory comparison to produce SBS even under substantial CIM failures caused by ReRAM variability.

- For SC operations typically implemented with MUXs, we propose novel alternatives that are CIM-friendly and achieve comparable accuracy.

Compared to the state-of-the-art CMOS-based solutions, the proposed design, while requiring minimal changes to the memory periphery, on average, reduces energy consumption by 1.15× and improves throughput by 1.39× across multiple image processing applications. Our design is also more robust than traditional arithmetic for CIM, with only a 5% average quality drop in the presence of faults compared to a 47% drop. It eliminates the need for protection schemes on unreliable ReRAM devices and provides better correlation control than previous in-memory SC designs.

To read the full article, click here

Related Semiconductor IP

Related White Papers

- Using FPGAs in Mobile Heterogeneous Computing Architectures

- Reconfiguring Design -> Adaptive computing makes efficient use of silicon

- Reconfiguring Design -> Reconfigurable computing aims at signal processing

- High-Performance DSPs -> Serial interconnects back high-performance computing

Latest White Papers

- Breaking the Memory Bandwidth Boundary. GDDR7 IP Design Challenges & Solutions

- Automating NoC Design to Tackle Rising SoC Complexity

- Memory Prefetching Evaluation of Scientific Applications on a Modern HPC Arm-Based Processor

- Nine Compelling Reasons Why Menta eFPGA Is Essential for Achieving True Crypto Agility in Your ASIC or SoC

- CSR Management: Life Beyond Spreadsheets