AI IP

Filter

Compare

789

IP

from

140

vendors

(1

-

10)

-

LLM AI IP Core

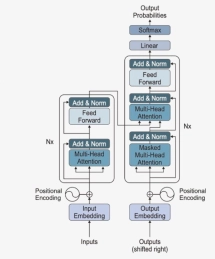

- For sophisticated workloads, DeepTransformCore is optimized for language and vision applications.

- Supporting both encoder and decoder transformer architectures with flexible DRAM configurations and FPGA compatibility, DeepTransformCore eliminates complex software integration burdens, empowering customers to rapidly develop custom AI SoC (System-on-chip) designs with unprecedented efficiency.

-

RISC-V-Based, Open Source AI Accelerator for the Edge

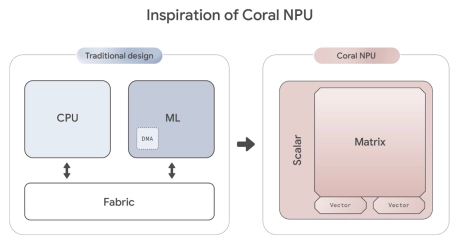

- Coral NPU is a machine learning (ML) accelerator core designed for energy-efficient AI at the edge.

- Based on the open hardware RISC-V ISA, it is available as validated open source IP, for commercial silicon integration.

-

Dataflow AI Processor IP

- Revolutionary dataflow architecture optimized for AI workloads with spatial compute arrays, intelligent memory hierarchies, and runtime reconfigurable elements

-

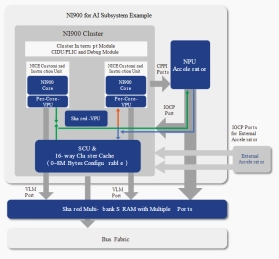

AI DSA Processor - 9-Stage Pipeline, Dual-issue

- NI900 is a DSA processor based on 900 Series.

- NI900 is optimized with features specifically targeting AI applications.

-

224G SerDes PHY and controller for UALink for AI systems

- UALink, the standard for AI accelerator interconnects, facilitates this scalability by providing low-latency, high-bandwidth communication.

- As a member of the UALink Consortium, Cadence offers verified UALink IP subsystems, including controllers and silicon-proven PHYs, optimized for robust performance in both short and long-reach applications and delivering industry-leading power, performance, and area (PPA).

-

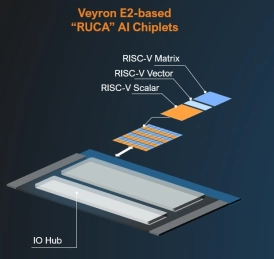

RISC-V AI Acceleration Platform - Scalable, standards-aligned soft chiplet IP

- Built on RISC-V and delivered as soft chiplet IP, the Veyron E2X provides scalable, standards-based AI acceleration that customers can integrate and customize freely.

-

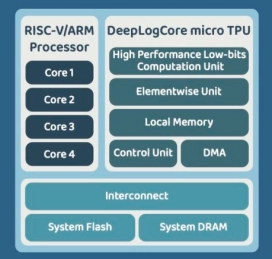

CNN AI IP Core

- DeepMentor has developed an AI IP that combines low-power and high-performance features with the RISC-V SOC.

- This integration allows customers to quickly create unique AI SOC without worrying about software integration or system development issues.

- DeepLogCore supports both RISC-V and ARM systems, enabling faster and more flexible development.

-

AI SDK for Ceva-NeuPro NPUs

- Ceva-NeuPro Studio is a comprehensive software development environment designed to streamline the development and deployment of AI models on the Ceva-NeuPro NPUs.

- It offers a suite of tools optimized for the Ceva NPU architectures, providing network optimization, graph compilation, simulation, and emulation, ensuring that developers can train, import, optimize, and deploy AI models with highest efficiency and precision.

-

AI inference engine for real-time edge intelligence

- Flexible Models: Bring your physical AI application, open-source, or commercial model

- Easy Adoption: Based on open-specification RISC-V ISA for driving innovation and leveraging the broad community of open-source and commercial tools

- Scalable Design: Turnkey enablement for AI inference compute from 10’s to 1000’s of TOPS

-

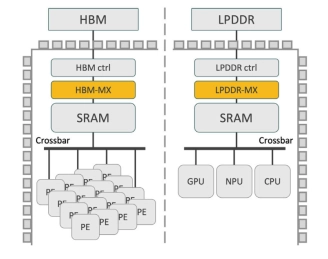

High-Performance Memory Expansion IP for AI Accelerators

- Expand Effective HBM Capacity by up to 50%

- Enhance AI Accelerator Throughput

- Boost Effective HBM Bandwidth

- Integrated Address Translation and memory management: