Neural Engine IP

Filter

Compare

67

IP

from

21

vendors

(1

-

10)

-

Neural engine IP - Tiny and Mighty

- The Origin E1 NPUs are individually customized to various neural networks commonly deployed in edge devices, including home appliances, smartphones, and security cameras.

- For products like these that require dedicated AI processing that minimizes power consumption, silicon area, and system cost, E1 cores offer the lowest power consumption and area in a 1 TOPS engine.

-

Neural engine IP - AI Inference for the Highest Performing Systems

- The Origin E8 is a family of NPU IP inference cores designed for the most performance-intensive applications, including automotive and data centers.

- With its ability to run multiple networks concurrently with zero penalty context switching, the E8 excels when high performance, low latency, and efficient processor utilization are required.

- Unlike other IPs that rely on tiling to scale performance—introducing associated power, memory sharing, and area penalties—the E8 offers single-core performance of up to 128 TOPS, delivering the computational capability required by the most advanced LLM and ADAS implementations.

-

Neural engine IP - The Cutting Edge in On-Device AI

- The Origin E6 is a versatile NPU that is customized to match the needs of next-generation smartphones, automobiles, AV/VR, and consumer devices.

- With support for video, audio, and text-based AI networks, including standard, custom, and proprietary networks, the E6 is the ideal hardware/software co-designed platform for chip architects and AI developers.

- It offers broad native support for current and emerging AI models, and achieves ultra-efficient workload scheduling and memory management, with up to 90% processor utilization—avoiding dark silicon waste.

-

Neural engine IP - Balanced Performance for AI Inference

- The Origin™ E2 is a family of power and area optimized NPU IP cores designed for devices like smartphones and edge nodes.

- It supports video—with resolutions up to 4K and beyond— audio, and text-based neural networks, including public, custom, and proprietary networks.

-

Compact neural network engine offering scalable performance (32, 64, or 128 MACs) at very low energy footprints

- Best-in-Class Energy

- Enables Compelling Use Cases and Advanced Concurrency

- Scalable IP for Various Workloads

-

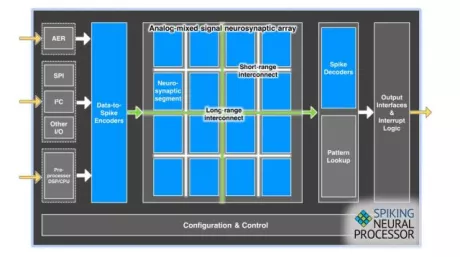

Ultra low power inference engine

- Neuromorphic processor

- Sub milliwatt power

- Ultra-low power AI processing

-

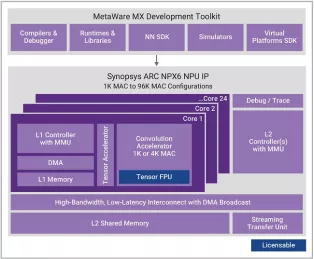

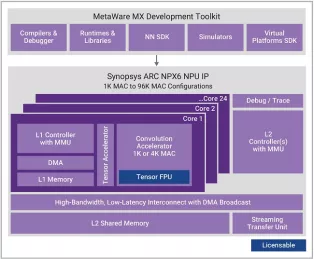

Enhanced Neural Processing Unit providing 98,304 MACs/cycle of performance for AI applications

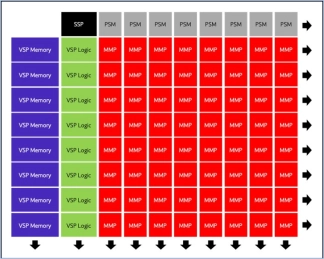

- Scalable real-time AI / neural processor IP with up to 3,500 TOPS performance

- Supports CNNs, transformers, including generative AI, recommender networks, RNNs/LSTMs, etc.

- Industry leading power efficiency (up to 30 TOPS/W)

- One 1K MAC core or 1-24 cores of an enhanced 4K MAC/core convolution accelerator

-

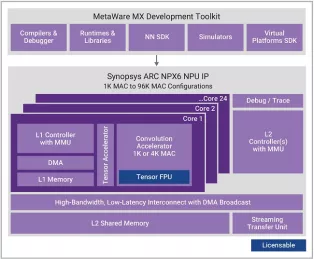

Enhanced Neural Processing Unit providing 8,192 MACs/cycle of performance for AI applications

- Scalable real-time AI / neural processor IP with up to 3,500 TOPS performance

- Supports CNNs, transformers, including generative AI, recommender networks, RNNs/LSTMs, etc.

- Industry leading power efficiency (up to 30 TOPS/W)

- One 1K MAC core or 1-24 cores of an enhanced 4K MAC/core convolution accelerator

-

Enhanced Neural Processing Unit providing 65,536 MACs/cycle of performance for AI applications

- Scalable real-time AI / neural processor IP with up to 3,500 TOPS performance

- Supports CNNs, transformers, including generative AI, recommender networks, RNNs/LSTMs, etc.

- Industry leading power efficiency (up to 30 TOPS/W)

- One 1K MAC core or 1-24 cores of an enhanced 4K MAC/core convolution accelerator