Why VAD and what solution to choose?

By Baptiste Tournoud, Dolphin Design

More than ever before, the attention to power consumption is paramount when developing new products. In this environment, voice activity detection has a major role to play for any new voice-controlled system. The main objective of VAD is to lower power consumption, saving on computation or data processing by deactivating part of the SoC when no speech patterns are detected.

Markets like home assistants and telecommunications can greatly benefit from this feature as the

always-on mode is not exactly suitable for wireless products or when privacy is a concern.

1) Different VADs and how to compare them

A VAD solution allows the system to detect when someone is speaking. When connected to a keyword-spotting DSP, you will be able to create complete speech processing solutions.

With that in mind, it is important to differentiate between voice detection, that identifies human speech patterns, and speech recognition, which is the overall function achieved by the system, ie. translating wavelengths into digital computable information. This document only aims to present the different voice detection systems currently available and their respective benefits and drawbacks.

One of the most widely used VAD techniques is to spot activity in the human audible spectrum, which includes more than just voice. The sounds that surround your product can create parasitic or conflicting noises working in that bandwidth, common examples being traffic, waves, construction sites, etc..

In order to compare the different solutions on a level playing field, some metrics are necessary. NDV stands for Noise Detected as Voice and will represent false positive. VDV stands for Voice Detected as Voice and are true positive. The last note-worthy metric is Detection Time which characterizes the amount of time taken by the VAD to identify and flag a sound as being human speech.

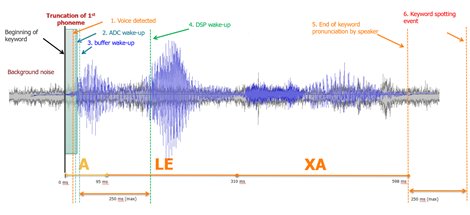

This detection time shows the clear limitation of VAD. It means that the first phoneme will be truncated and introduce a delay between the start of speech and the start of speech processing. Such a truncation could have an impact on the algorithm dealing with the speech synthesis if it is too long, and needs to be taken into account when adding a VAD into the system to lower its power consumption.

Figure 1: Detection time introduces delays

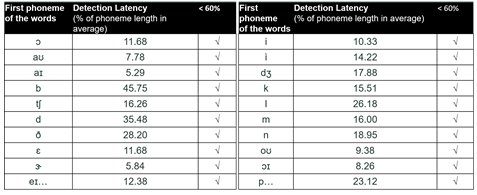

Lastly, how to compare performance of two VAD solutions? Benchmarking is a good way to go. Dolphin has developed MiWok benchmarking platform that assesses the performance of a VAD with key metrics like NDV, VDV and Detection Time.

Figure 2: Miwok results for English phonemes in far field conditions

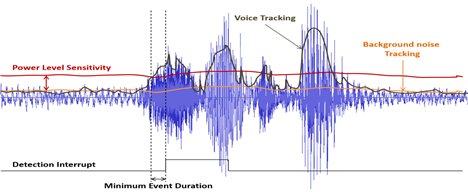

An interesting feature that Dolphin’s VAD offers is a self-adaptation to background environment noises, by comparing the amplified electrical signal with at least one threshold level. Its patented voice recognition circuit is operable by a wake-up signal which forms a true/false signal, enabling an easy to implement software solution.

Figure 3: Example of wake-up signal

2) What does the market offer at this point?

There are different solutions already available in the market today, ranging from all-in-one microphones to software solutions identifying specific structure (speech, glass breaking…) in the sound input.

The main solutions with their respective major benefits and possible short comings will now be discussed. In the following figures used to present these solutions, a yellow bubble means the IP is always on and a dark green bubble means that the IP can be shut down until a wake-up signal is sent.

![]()

Smart microphones are a plug and play, all-in-one solution that allows for an easy VAD solution and allows for the highest number of designers to be able to integrate this solution in their design.

However, it often comes at the cost of lack of configurability. For example, the system will have difficulties identifying voices in an environment it was not designed to be in (crowded noisy environments vs. quiet rooms). You must be able to clearly predict the environment your product will be used in, to set the voice threshold correctly for example. This lack of flexibility can be a deal-breaker if the system is meant to be used in different environments (smartphones, cars…).

Moreover, these solutions often detect a sound level and not specifically voice activity, thus have to wake the audio chain more often.

![]()

Figure 4: Audio chain illustration with a smart microphone

Another available solution is to implement a subsystem straight out of the microphone that includes processing capabilities, thus providing an easy implementation of a system that runs true Speech Recognition.

The main drawback of this solution is that the DSP is always-on and so the overall power consumption savings will be reduced compared to other solutions. In addition, some of these solutions need “training” to recognize specific triggers, instead of offering the possibility of being programmed.

![]()

Figure 5: Audio chain illustration with an integrated solution

The last hardware solution worth mentioning is IPs that can be integrated between the microphone and the ADC, thus enabling the maximum number of IPs to be put in sleep mode as illustrated in the figure below. These usually trigger interrupts to signal that an event has been detected. The most customizable ones will offer some configurability with the environment (background noises mainly) as well as different input levels (far field vs. near field).

Figure 6: Audio chain illustration with a dedicated VAD IP

Adopting this type of solution will need a hands-on approach in terms of integration and software programming, but will deliver the most power savings and the ultimate adaptable event detection solution out there.

Finally, you can also rely on an entirely software solution that can run true keyword spotting at any given time. This solution, however, needs the whole audio channel to run constantly, drastically limiting any power saving that a VAD system can offer.

![]()

Figure 7: Audio chain illustration with VAD running on the DSP

While all the solutions presented above allow you to run speech recognition within your DSP, choosing the solution most suitable for your application will be a balancing act between your application needs and the different limitations introduced by each of those solutions. Time to market and experience in SoC design might also be a decisive deal-breaker for some of them.

This table summarizes the main VAD differentiators for the above solutions, if performance metrics are equal:

Figure 8: Summary of the different solutions

3) WhisperTrigger Voice Activity Detector

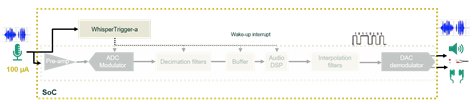

Dolphin provides two kinds of VAD named WhisperTrigger. The analog and digital solutions for VAD are called WT-a and WT-d respectively. The choice in the microphone technology (analog or digital) will drive the choice between these two solutions.

To link up with the solutions previously presented, this IP is to be integrated between the microphone and the ADC in the case of the WT-a, and between the microphone and the decimation filters in the case of WT-d in order to allow a maximum reduction of power consumption. This solution has the undeniable advantage of being able to operate without requiring any DSP resources.

Figure 9: WT-d integration example

Figure 10: WT-a integration example

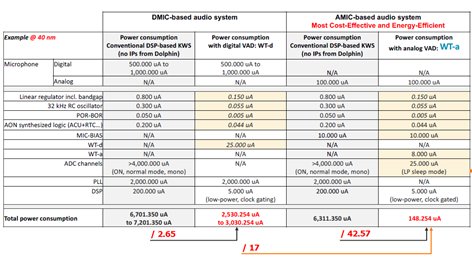

The two previous figures present the clear advantage of integrating either solution, as they allow to turn off most of the audio chain, thus cutting power consumption by more than 42x when compared to a standard always-on audio chain.

The following table highlights the power saving cuts possible by using a WhisperTrigger in 40nm:

Figure 11: WhisperTrigger power savings potential

Dolphin’s highly customizable and patented solution is offering on-the-fly customization capabilities that allow you to adapt to any kind of environment and optimize metrics like VDV and NDV. These features, highlighted in Figure 3 are referred to as the Voice Tracking and Background noise tracking, and they allow the IP to auto-adjust to voice and background noise levels that are different from the value specified initially. You can still set specific values, but this adaptivity allows for better detection of voice activity depending on the environment.

For example, when switching from a quiet environment to a busier one (walking into a bar, opening the window in a traffic jam) or when needing to operate in both Near-Field and Far-Field conditions, these features will allow the IP to adapt the defined threshold level, trigger the interrupt signal and maintain the highest amount of VDV while limiting the amount of NDV.

While being easily accessible, this solution might need a proactive approach into VLSI design. Furthermore, the solution delivers an interrupt to signal that a voice has been detected which eases the software implementation, but this type of interruption also needs to be considered while implementing the software running on the DSP.

That being said, we believe that our offering is perfectly positioned for you to be able to benefit from all of the power savings VAD can offer while being easily implemented into your SoC. If you want to learn more about our WhisperTrigger products, you can find additional information here.

WhisperTrigger is part of our BAT audio platform, enabling a seamless configuration and assembly of your high fidelity and low power audio device, offering the best analog performances with a high configurability of the digital filtering.

ABOUT THE AUTHOR

|

| Baptiste Tournoud graduated from a 3-year sandwich CSEE master course within STMicroelectronics where he contributed to the development of PDK’s DFM decks ranging from 130 to 28 nm. Then he spent a year as a software developer at Mentor Graphics. In 2015, he evolved into a technical support role within Arm to help designers develop memory compilers. In 2019, he joined Dolphin Design as a FAE for audio products. |

Related Semiconductor IP

- NPU IP Core for Mobile

- NPU IP Core for Edge

- Specialized Video Processing NPU IP

- HYPERBUS™ Memory Controller

- AV1 Video Encoder IP

Related White Papers

- Agile Analog's Approach to Analog IP Design and Quality --- Why "Silicon Proven" is NOT What You Think

- Why choose Linux for embedded development projects?

- The why, where and what of low-power SoC design

- The 'why' and 'what' of algorithmic synthesis

Latest White Papers

- Breaking the Memory Bandwidth Boundary. GDDR7 IP Design Challenges & Solutions

- Automating NoC Design to Tackle Rising SoC Complexity

- Memory Prefetching Evaluation of Scientific Applications on a Modern HPC Arm-Based Processor

- Nine Compelling Reasons Why Menta eFPGA Is Essential for Achieving True Crypto Agility in Your ASIC or SoC

- CSR Management: Life Beyond Spreadsheets