Vision AI Accelerator IP

Filter

Compare

21

IP

from

7

vendors

(1

-

10)

-

RISC-V-Based, Open Source AI Accelerator for the Edge

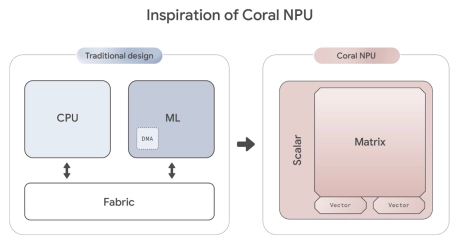

- Coral NPU is a machine learning (ML) accelerator core designed for energy-efficient AI at the edge.

- Based on the open hardware RISC-V ISA, it is available as validated open source IP, for commercial silicon integration.

-

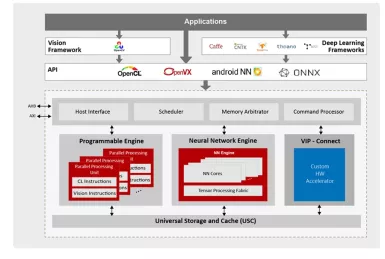

NPU IP for AI Vision and AI Voice

- 128-bit vector processing unit (shader + ext)

- OpenCL 3.0 shader instruction set

- Enhanced vision instruction set (EVIS)

- INT 8/16/32b, Float 16/32b

-

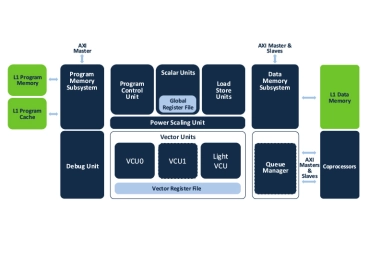

Vision AI DSP

- Ceva-SensPro is a family of DSP cores architected to combine vision, Radar, and AI processing in a single architecture.

- The silicon-proven cores provide scalable performance to cover a wide range of applications that combine vision processing, Radar/LiDAR processing, and AI inferencing to interpret their surroundings. These include automotive, robotics, surveillance, AR/VR, mobile devices, and smart homes.

-

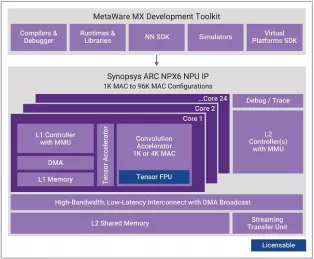

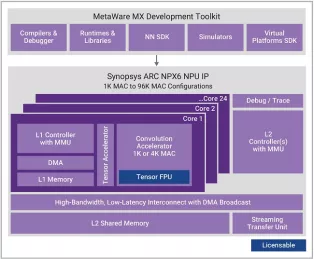

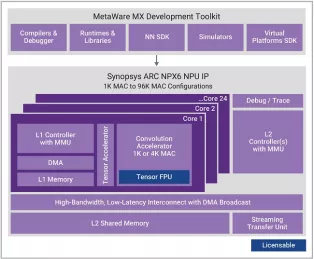

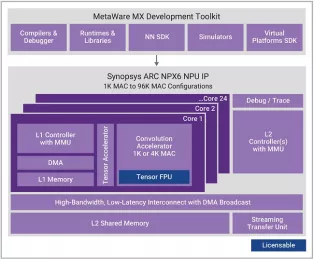

Enhanced Neural Processing Unit providing 98,304 MACs/cycle of performance for AI applications

- Scalable real-time AI / neural processor IP with up to 3,500 TOPS performance

- Supports CNNs, transformers, including generative AI, recommender networks, RNNs/LSTMs, etc.

- Industry leading power efficiency (up to 30 TOPS/W)

- One 1K MAC core or 1-24 cores of an enhanced 4K MAC/core convolution accelerator

-

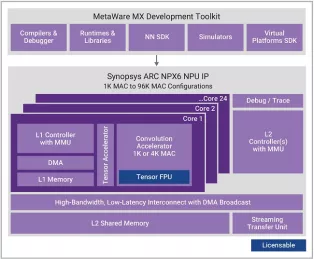

Enhanced Neural Processing Unit providing 8,192 MACs/cycle of performance for AI applications

- Scalable real-time AI / neural processor IP with up to 3,500 TOPS performance

- Supports CNNs, transformers, including generative AI, recommender networks, RNNs/LSTMs, etc.

- Industry leading power efficiency (up to 30 TOPS/W)

- One 1K MAC core or 1-24 cores of an enhanced 4K MAC/core convolution accelerator

-

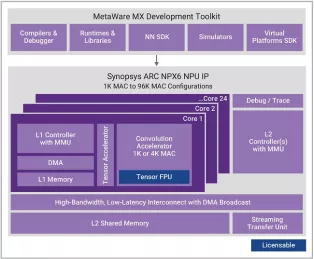

Enhanced Neural Processing Unit providing 65,536 MACs/cycle of performance for AI applications

- Scalable real-time AI / neural processor IP with up to 3,500 TOPS performance

- Supports CNNs, transformers, including generative AI, recommender networks, RNNs/LSTMs, etc.

- Industry leading power efficiency (up to 30 TOPS/W)

- One 1K MAC core or 1-24 cores of an enhanced 4K MAC/core convolution accelerator

-

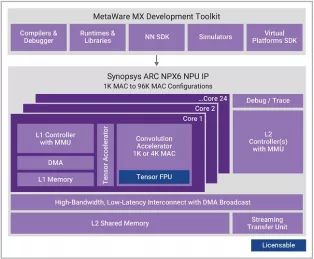

Enhanced Neural Processing Unit providing 49,152 MACs/cycle of performance for AI applications

- Scalable real-time AI / neural processor IP with up to 3,500 TOPS performance

- Supports CNNs, transformers, including generative AI, recommender networks, RNNs/LSTMs, etc.

- Industry leading power efficiency (up to 30 TOPS/W)

- One 1K MAC core or 1-24 cores of an enhanced 4K MAC/core convolution accelerator

-

Enhanced Neural Processing Unit providing 4096 MACs/cycle of performance for AI applications

- Scalable real-time AI / neural processor IP with up to 3,500 TOPS performance

- Supports CNNs, transformers, including generative AI, recommender networks, RNNs/LSTMs, etc.

- Industry leading power efficiency (up to 30 TOPS/W)

- One 1K MAC core or 1-24 cores of an enhanced 4K MAC/core convolution accelerator

-

Enhanced Neural Processing Unit providing 32,768 MACs/cycle of performance for AI applications

- Scalable real-time AI / neural processor IP with up to 3,500 TOPS performance

- Supports CNNs, transformers, including generative AI, recommender networks, RNNs/LSTMs, etc.

- Industry leading power efficiency (up to 30 TOPS/W)

- One 1K MAC core or 1-24 cores of an enhanced 4K MAC/core convolution accelerator

-

Enhanced Neural Processing Unit providing 24,576 MACs/cycle of performance for AI applications

- Scalable real-time AI / neural processor IP with up to 3,500 TOPS performance

- Supports CNNs, transformers, including generative AI, recommender networks, RNNs/LSTMs, etc.

- Industry leading power efficiency (up to 30 TOPS/W)

- One 1K MAC core or 1-24 cores of an enhanced 4K MAC/core convolution accelerator