LLM Accelerator IP

Filter

Compare

3

IP

from

3

vendors

(1

-

3)

-

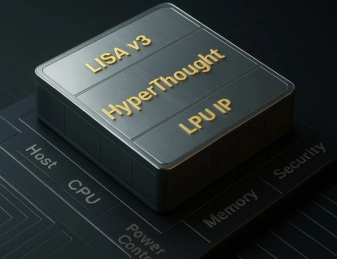

LLM Accelerator IP for Multimodal, Agentic Intelligence

- HyperThought is a cutting-edge LLM accelerator IP designed to revolutionize AI applications.

- Built for the demands of multimodal and agentic intelligence, HyperThought delivers unparalleled performance, efficiency, and security.

-

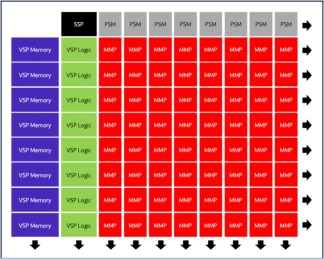

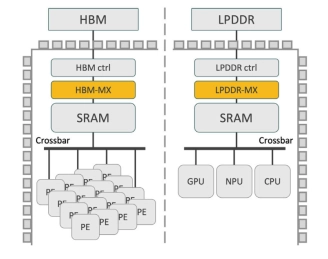

High-Performance Memory Expansion IP for AI Accelerators

- Expand Effective HBM Capacity by up to 50%

- Enhance AI Accelerator Throughput

- Boost Effective HBM Bandwidth

- Integrated Address Translation and memory management:

-

Neural engine IP - AI Inference for the Highest Performing Systems

- The Origin E8 is a family of NPU IP inference cores designed for the most performance-intensive applications, including automotive and data centers.

- With its ability to run multiple networks concurrently with zero penalty context switching, the E8 excels when high performance, low latency, and efficient processor utilization are required.

- Unlike other IPs that rely on tiling to scale performance—introducing associated power, memory sharing, and area penalties—the E8 offers single-core performance of up to 128 TOPS, delivering the computational capability required by the most advanced LLM and ADAS implementations.