From data centers to autonomous cars, the most demanding AI applications need high-performance NPUs with the lowest possible latency. With its highly customizable architecture, the Origin™ E8 delivers performance that scales to 128 TOPS in a single core and PetaOps with multiple cores.

The Origin E8 is a family of NPU IP inference cores designed for the most performance-intensive applications, including automotive and data centers. With its ability to run multiple networks concurrently with zero penalty context switching, the E8 excels when high performance, low latency, and efficient processor utilization are required. Unlike other IPs that rely on tiling to scale performance—introducing associated power, memory sharing, and area penalties—the E8 offers single-core performance of up to 128 TOPS, delivering the computational capability required by the most advanced LLM and ADAS implementations.

Innovative Architecture

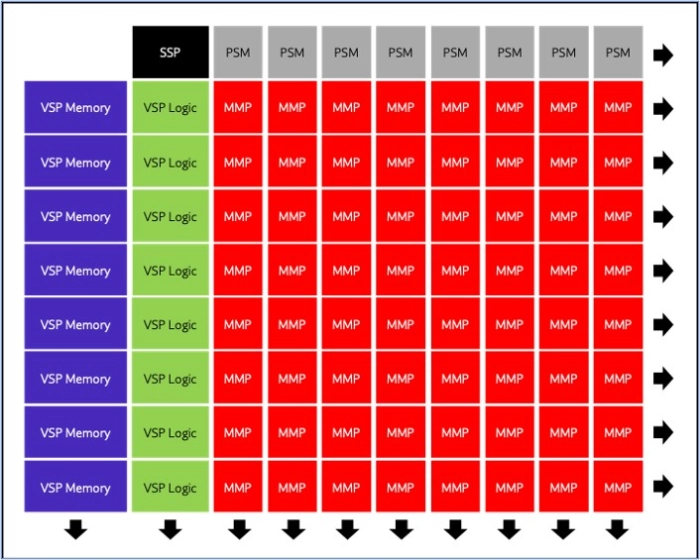

The Origin E8 neural engine uses Expedera’s unique packet-based architecture, which is far more efficient than common layer-based architectures. The architecture enables parallel execution across multiple layers achieving better resource utilization and deterministic performance. It also eliminates the need for hardware-specific optimizations, allowing customers to run their trained neural networks unchanged without reducing model accuracy. This innovative approach greatly increases performance while lowering power, area, and latency.

Specifications

| Compute Capacity | 16K to 64K INT8 MACs |

| Multi-tasking | Run >10 Simultaneous Jobs |

| Power Efficiency | 18 TOPS/W effective; no pruning, sparsity or compression required (though supported) |

| Example Networks Supported | Llama2-7B, YOLO v3, YOLO V5, RetinaNet, Panoptix Deeplab, PlainLite, ResNext, ResNet 50, Inception V3, RNN-T, MobileNet V1, MobileNet SSD, BERT, EfficientNet, FSR CNN, CPN, CenterNet, Unet, ShuffleNet2, Swin, SSD-ResNet34, DETR, others |

| Example Performance | YOLO v3 (608 x 608): 626 IPS, 115.6 IPS/W (N7 process, 1GHz, no sparsity/pruning/compression applied) |

| Layer Support | Standard NN functions, including Conv, Deconv, FC, Activations, Reshape, Concat, Elementwise, Pooling, Softmax, others. Programmable general FP function, including Sigmoid, Tanh, Sine, Cosine, Exp, others, custom operators supported. |

| Data types | INT4/INT8/INT10/INT12/INT16 Activations/Weights FP16/BFloat16 Activations/Weights |

| Quantization | Channel-wise Quantization (TFLite Specification) Software toolchain supports Expedera, customer-supplied, or third-party quantization |

| Latency | Deterministic performance guarantees, no back pressure |

| Frameworks | TensorFlow, TFlite, ONNX, others supported |