The Expedera TimbreAI T3 is an ultra-low power Artificial Intelligence (AI) Inference engine designed for noise reduction uses cases in power-constrained devices such as headsets. TimbreAI achieves a best in class <300uW power consumption due to Expedera’s unique packet-based architecture. TimbreAI requires no external memory access, saving system power while increasing performance and reducing chip size, processing latency and system BOM costs.

Designed to provide quick and seamless deployment into customer hardware, TimbreAI provides optimal performance within the strict power and area application requirements of today’s advanced audio devices. TimberAI’s unified compute pipeline architecture enables highly efficient hardware scheduling and advanced memory management to achieve unsurpassed end-to-end low-latency performance. This minimizes die area, saves power, and maximizes performance.

AI Accelerator (NPU) IP - 3.2 GOPS for Audio Applications

Overview

Key Features

- 3.2 GOPS; ideally suited for audio AI applications such as active noise reduction

- Ultra-low <300uW power consumption (TSMC 22nm)

- Low latency

- Neural Networks supported include RNN, LSTM, GRU

- Data type supported include INT8 x INT8, INT16 x INT8, INT16 x INT16

- Use familiar open-source platforms like TVN, TFLITE, ONNX, TVM

- Delivered as soft IP: portable to any process

Benefits

- Ultra-low power implementation for battery-powered devices.

- Drastically reduces memory requirements.

- Portable to any process.

- No DRAM required.

- Run trained models unchanged without the need for hardware dependent optimizations.

- Deterministic, real-time performance.

- Improved performance for your workloads, while still running breadth of models.

- Delivered as soft IP.

- Simple software stack.

- Achieve same accuracy your trained model.

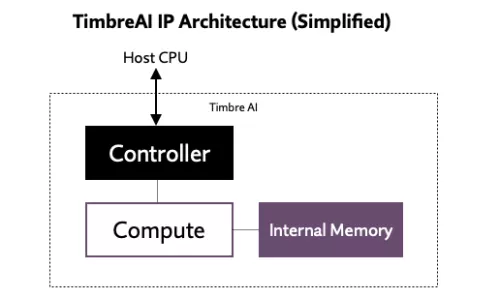

Block Diagram

Applications

- Battery-powered headset

- Hearables

Deliverables

- RTL or GDS

- SDK (TVM-based)

- Documentation

Technical Specifications

Maturity

In production

Availability

In production

Related IPs

- Scalable Edge NPU IP for Generative AI

- AI accelerator (NPU) IP - 16 to 32 TOPS

- AI accelerator (NPU) IP - 32 to 128 TOPS

- NPU IP for Embedded ML

- AI accelerator (NPU) IP - 1 to 20 TOPS

- ARC NPX Neural Processing Unit (NPU) IP supports the latest, most complex neural network models and addresses demands for real-time compute with ultra-low power consumption for AI applications